freshidea - Fotolia

Why Spark DataFrame, lazy evaluation models outpace MapReduce

Learn how the Spark DataFrame execution plan works and why its lazy evaluation model helps the processing engine to avoid the performance issues inherent in Hadoop MapReduce.

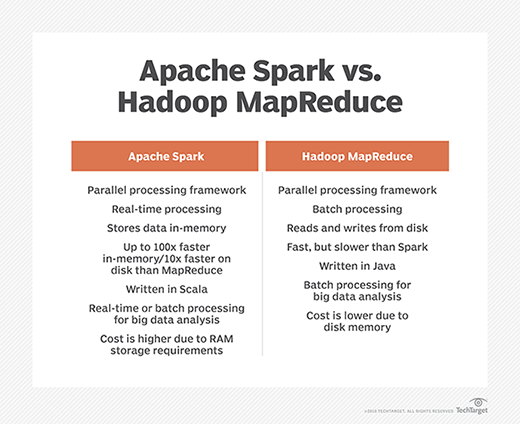

The Apache Spark programming model and execution engine is rapidly gaining popularity over Hadoop MapReduce as the foundation of high-performance applications for large-scale data processing for a number of reasons.

First, the Spark programming model is both simple and general, enabling developers to combine data streaming and complex analytics with a familiar SQL-based interface for data access and utilization.

Second, the execution environment is designed for optimization because it takes advantage of in-memory processing and parallel execution across a cluster of distributed processing nodes.

Third, the approach to composing parallel operations prior to execution is suited to additional optimizations where some computation costs can be reduced -- or even eliminated -- and to pipelining query results so users don't have to wait for the entire execution to finish to begin to see the data.

Spark DataFrame model built for speed

A typical approach to application software blends the use of data relegated to persistent storage loaded by computing nodes on-demand. For example, when an analyst executes a SQL query using a relational database, the CPU streams the records from the queried database in from stored tables. Then, the query's conditions are evaluated and the resulting filtered records are forwarded back to the requestor.

However, if the user wants to use the result set for a subsequent request, that data set would either need to be stored in a temporary table or the analyst must reconfigure the sequence of the queries into increasingly complex requests to get answers. Going back and forth to the disk to access the data, as Hadoop MapReduce does, introduces data latency delays, and as data sets grow in size, that performance hit increases, as well.

The Spark DataFrame is a data structure that represents a data set as a collection of instances organized into named columns. In essence, a Spark DataFrame is functionally equivalent to a relational database table, which is reinforced by the Spark DataFrame interface and is designed for SQL-style queries. However, the Spark model overcomes this latency challenge in two ways.

First, Spark programmers can direct the environment to cache certain DataFrames in memory. That means that instead of reading the data from the disk into a DataFrame data structure each time a query is requested, the data in the DataFrame remains in memory. As a result, there are no latency delays after the first time the DataFrame is populated. As long as the system has enough memory to hold the entire DataFrame, the performance of each subsequent query will be limited only by the time it takes to scan the data resident in memory.

Spark's lazy evaluation model acts fast

Second, Spark's execution model relies on what is called lazy evaluation. In Spark, operations are generally broken up into transformations applied to data sets and actions intended to derive and produce a result from that series of transformations.

Transformations on DataFrames are those types of operations that are applied to all of the instances in a DataFrame. These operations include things such as loading a data set; adding a new column; sorting; grouping by a column's values; mapping; set operations, such as intersecting or uniting DataFrames; and SQL operations, like joining two DataFrames or filtering a subset of records from the DataFrame.

Actions are operations that are intended to provide some results, and examples include aggregations, such as count, sum, minimum, maximum or average of values in a column; reduction operations; or collections, including the show and take operations that deliver a set of instances from the DataFrame to the user.

Lazy evaluation means the execution of transformations isn't triggered until there is an action that needs results. However, what that really means is that the sequence of transformation operations can be configured as an execution plan that can be analyzed for opportunities for optimization.

For example, let's say the user wants to join two DataFrames that contain customer data based on a common identifier, but then filter out the records for customers located in California from the joined table. In a procedural execution environment, the join would be performed first, and the result set would be scanned for those records with an address in California.

In Spark, the analysis might show that the number of records from California in each of the sets to be joined is relatively small; it can then switch the order of operations so that those subsets of the DataFrames are filtered first, and the interim result sets are joined afterward. The outcome is that the process runs much faster for two reasons: the filters are applied to separate tables and both can be done in parallel, and the resulting sets to be joined are much smaller, reducing the time it takes for the join to execute.

Lazy evaluation in Spark was designed to enable the processing engine to avoid the performance issues inherent in Hadoop's MapReduce engine, which executes each task in batch mode. By building an execution plan that isn't put into effect until a result must be delivered, the integrated query optimization algorithms can significantly speed Spark's performance.