5 principles of a well-designed data architecture

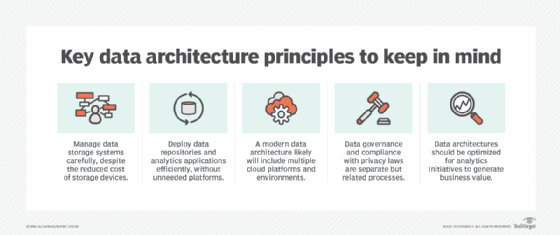

Here are five core data architecture principles to help organizations build a modern architecture that successfully meets their data management and analytics needs.

Have you seen real estate listings that describe a house as architect-designed? It adds a lot to the asking price, but aren't all houses designed by architects? Most are, but they're then rather generically churned out by a construction company. If your house counts as architecture, though, someone designed it for specific needs and tastes.

In the same way, your organization stands to benefit when your data architecture is put together based on your particular data management and analytics needs. But often, we inherit many of the generic components and integrations from the product designs of our technology vendors. If we've customized some of the structure of a data architecture, that may have happened ad hoc, over a long time.

It's hard to offer a prescriptive architecture to meet all your needs from the start. But I can suggest some rules of thumb and best practices that help define an effective modern data architecture. Here are five data architecture principles to keep in mind.

1. Storage is a commodity but still a consideration

Not very long ago, data storage was expensive. So much so that defining storage formats, backup strategies and archiving plans was an important part of the data architect's work. Even the data types of individual fields in a record might be chosen and tweaked specifically to reduce storage costs.

How things have changed. I no longer have to requisition a terabyte of storage from a specialist supplier, along with its rack mounting, power supply and cooling fans. Today I can save a terabyte to an SD card, or even a microSD one. At one time, that would have been enough to run the world's largest databases. Storage -- whether in the cloud or on premises- - is now a commodity.

That has three positive results. First, of course, we save a lot of money. Also, modern data architectures no longer involve complex processes just to reduce storage costs. If some data transformations in a data pipeline can be done more easily by using temporary cloud storage, do it. The added cost may prove negligible. Finally, we can store much more data than before, including aged or supplementary data sets that once would have been archived. Now, the full scope of our enterprise data is available for use.

Even so, data architecture best practices should still consider data storage. For example, storing data close to where it's processed offers performance advantages that may be necessary for real-time analytics and operations. Even on-premises storage is cheap enough to make that practical these days. Also, we may choose to keep large volumes of historical data continuously available, because we can afford to. But restoring such a system in the event of an outage may take much longer than if we partitioned current and older data.

2. Analytics should follow the data

From the early days of data warehouses through the emergence of business intelligence systems and on to today's machine learning pipelines, I've held this to be one of the most practical data architecture principles: Analytics follows the data. It's generally more effective to deploy an analytics tool close to the data source than to move the data to the analytics environment.

Most analytics applications reduce data to only the fields we need. If you know the SQL programming language, you'll remember how fast you were taught not to use SELECT *, a famously bad beginner's query that returns data from every column in a table. Likewise, in data analytics, we usually select specific records to analyze. A lot of our computational effort involves removing data from the process.

With that in mind, it makes little sense to move all your source data into a new environment when the first task of the data analyst is to reduce the data. It's more efficient to do the required data modeling, reduction and shaping work in the source system.

You could also leave source data in on-premises systems for analysis, instead of migrating it to the cloud. That's why BI applications moved to the cloud relatively slowly: When most data was processed and stored on premises, it made sense to do the analytics there, too.

Performance is better with less data movement, the data architecture is less complex with fewer environments to administer and data governance feels easier with fewer user access controls required.

3. Multi-cloud environments are the norm

There is no such thing as the cloud. OK, that's a little controversial, but I hope I got your attention. What I mean is that when we talk about the cloud, we're being lazy. There isn't one cloud. For most enterprises, there may be several -- indeed, many -- different cloud services in use. They often run on different cloud platforms, with more or less (often less) connectivity and integration between them.

For example, it's common today to use cloud-based CRM, expense management and HR systems. But if you want to analyze data across these applications -- to measure sales team costs and effectiveness, say -- you may have to integrate multiple platforms as part of your data architecture. The issues involved may not be as challenging as in the departmental data silos found in many on-premises systems. Cloud applications often have much better APIs and metadata than traditional applications. But you do still have to account for diverse data structures and differing system latency.

4. Don't confuse data governance with compliance

With growing public concern about data privacy and increasing legislation around the world, the use and abuse of enterprise data is no longer just a technical matter. Regulatory compliance worries everyone from the chief marketing officer, who has to find new ways to manage mailing lists and advertising, to the CFO, who frets about the onerous fines imposed for data breaches.

The architectural answer to these anxieties often involves data governance tools and other data management software. For example, an enterprise data catalog classifies and manages the use of data from all sorts of sources, including both operational and reporting systems. An analytics catalog also classifies items but focuses on the artifacts we build on top of the data, such as dashboards and data visualizations. It helps in governing data usage rather than the sources themselves.

Other governance tools incorporated into a data architecture may offer integrated security, such as single sign-on platforms for various technologies. Some tools track where data is stored, moved and used, because privacy laws vary between countries and regions.

Useful as these tools may be, we must bear in mind a critical principle: Governance and compliance are not the same thing, but there's an important bidirectional relationship between them.

Compliance is easy to describe: Does your organization's use of data follow the relevant laws, guidelines and rules? In other words, do your processes check all the right boxes? These checkboxes may be demanded by local, national and international laws, such as GDPR in Europe or CCPA and HIPAA in the U.S. They may also be required by standards bodies, such as ISO and NIST. Internal rules and procedures also represent a form of compliance. Miss even one box and you may not be compliant, with potential consequences. That's pretty straightforward in theory, if complex in practice.

Governance, on the other hand, feels a little paradoxical. It's concerned not with making the right decisions but with making decisions the right way: Do you follow the best processes? Data governance requires a set of policies and processes defined in advance, followed in action and auditable in retrospect. These include not only data architecture best practices but business ones, too.

It's possible to be compliant without being well-governed -- you may check all the boxes by good luck rather than good judgment. But that's unlikely to happen and even more unlikely to be sustainable. In this way, data governance and compliance go together, with governance as the more fundamental data architecture component.

5. Data that isn't analyzed is a wasted asset

It's a cliché of modern data management that data is a business asset. But data that just sits there is only a cost center, requiring maintenance without providing any business benefits. We start to realize its value when we use it, especially in new ways.

Some organizations have found new sources of revenue by monetizing their data. High-value data, such as detailed consumer information, can be an important revenue stream. But for most enterprises, data monetization is a side hustle.

Analytics, though, unlocks new business insights and thus new value. BI helps executives plan business strategies and track performance metrics. New augmented analytics tools built into BI software bring machine learning into the business mainstream to aid in data preparation and analysis. Data scientists use machine learning algorithms and other advanced analytics techniques to predict business issues and opportunities and to find patterns in data sets that are too complex, too big or too fast for human eyes.

It's worth optimizing your data architecture to support the analytics process. Consider how you move data around: Are there advantages to keeping it on premises? Are there security or governance concerns that require data to be stored in a particular country or state? These and other factors can help you find the architectural design that works best for your organization.

Data architecture design thinking

Of course, these five principles aren't all you need to build an effective data architecture. But they can point you to some useful patterns of thinking. In real estate listings, you often come across homes that started out with a generic architecture but were made unique by their owners. Your data architecture should go in the same direction. It may not have the romance of a dream home, but it's one of the most important investments your organization will ever make.