Brian Jackson - Fotolia

Top -- and bottom -- 5 Apache Kafka use cases

Apache Kafka has many applications in big data, but what enterprise use cases are the best fit for the tool? Experts discuss where Kafka works best for your data.

Apache Kafka is a hot tool right now, and many enterprises are looking at it because of its prowess in big data applications, particularly streaming data. It's ideal for a variety of streaming data use cases such as ingesting and analyzing logs, capturing IoT data and analyzing social feed data.

Apache Kafka is at the core of many modern data pipelines and excels in use cases where data is created and/or handled in event or record formats.

"It's a very good fit as a central message bus, connecting software components and handling distinct processing steps of more complex workflows," said Heikki Nousiainen, CTO and co-founder of Aiven, a database as a service provider.

For engineering teams working with large amounts of data, Kafka offers unrivalled scale. The Kafka ecosystem is also particularly strong and allows users to integrate many systems for upstream data capture and downstream data processing.

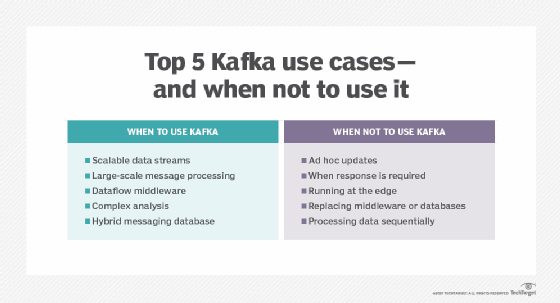

But while Kafka is a powerful tool, it's not the right messaging tool for all applications. Here are five Kafka use cases that make sense, and five places where it is not a good fit.

Strong Kafka use cases

Scalable and configurable streams. "Kafka is great for building data processing solutions that can scale easily [and] are efficient and resilient," said Kiran Chitturi, CTO Architect at Sungard Availability Services, a disaster recovery tools provider. This includes log aggregation, operational metrics or IoT stream processing, which need low latency and have various data sources and multiple consumers.

Large-scale message processing. "Kafka's biggest selling point for use cases is for large-scale message processing and website activity tracking," said Nousiainen. It's particularly well suited for when engineers struggle to process data in real-time.

The most common Kafka use cases Nousiainen sees are retailers tracking customer sentiment and internet service providers processing messaging streams. In both examples, Kafka is deployed to store and analyze data in real time. Kafka is also used in IoT applications where manufacturers can use it to source data from IoT sensors and devices and transfer it to third-party systems to read.

A key differentiator from more traditional message brokers is that Apache Kafka can simplify data reuse and innovation by repurposing existing, available data flows.

Dataflow middleware. Enterprises are increasingly turning to dataflow solutions like Apache NiFi to scale their ability to ingest data. NiFi can simplify the process of getting data from 100,000 machines on an ongoing basis. But plugging in a solution like Apache NiFi by itself can overwhelm downstream applications like data warehouses or analytics engines, said Dinesh Chandrasekhar, head of product marketing for the Data-in-Motion business unit of Cloudera.

He sees Kafka being used to act as a stream's messaging solution in the middle. NiFi can pump data into Kafka at high speed. All other applications that need the data can pull from Kafka topics at a pace they're comfortable with.

"Kafka can scale up to millions of messages per second easily," said Chandrasekhar. Having Kafka in the middle also allows for multiple applications to receive the same data at the same time, making the pipeline more open and pluggable.

Complex analysis. Many types of analytics need to combine real-time data with structured historical data. These kinds of Kafka use cases need persistence, redundancy and the potential for analytics in real time, said Keith Kohl, SVP of product management at Information Builders, an analytics tools provider.

Kafka behaves partially like a logging database and allows introspection of the most recent message or recent set of messages. This means in addition to looking for outliers, you can run complex analysis on the data as it relates to previous messages.

"Kafka's architecture allows for this type of execution without the heavy processing requirements that would be necessary after loading the data into a data store or data lake," Kohl said.

Hybrid messaging database. Another one of Kafka's key strengths lies in combining the quality of a message queue with that of a database, Kohl said. Kafka use cases that play to these strengths involve:

- analytic or operational processes -- such as Complex Event Processing (CEP) -- that use streaming data but need flexibility to analyze the trend rather than just the event; and

- data streams with multiple subscribers.

"The Kafka architecture is designed to help solve the problems associated with these types of use cases that require streaming data with fault-tolerant storage, high performance and scalability," Kohl said. Additionally, it's designed to manage data efficiently through compression.

How LinkedIn uses Kafka

Apache Kafka was originally developed by LinkedIn before it became open source in 2012. The platform is still widely used inside LinkedIn.

"At LinkedIn, we use Kafka for everything from metrics to logs to click data to database replication," said Nacho Solis, senior engineering manager at LinkedIn. In most cases, Kafka is being wrapped by another infrastructure service.

"When building a new service, engineers will reach out to the Kafka team, and we will provide feedback on their design," Solis said. If engineers need to exchange data between various components in their online and nearline systems, Kafka ends up being the solution. This is true whether all the components are part of the same service or different services.

LinkedIn uses Kafka to provide a durable communication queue. It could be thought of as a publish/subscribe system or an inter-process communication service. It solves queuing problems between producers and consumers, acting as a reliable buffer. The LinkedIn Kafka ecosystem also provides tools to enable message schema evolution, which allows the different components of a system to evolve independently.

Places where Kafka is not a good fit

Random updates. Kafka is well suited for handling events that are processed as part of the data pipeline, but it doesn't offer very good capabilities for updates or random access to individual records, Nousiainen said.

For these use cases, however, Kafka can be paired with a different transactional or NoSQL database. While Kafka can be used as more of a storage system for data logs, users typically deploy Kafka for data processing needs within a larger cloud environment to ensure scalable storage, as data storage isn't a common use case for Kafka.

When response is required. Kafka is not a good solution for blocking, real-time or request-response situations, said Nacho Solis, senior engineering manager at LinkedIn. Kafka doesn't offer the ability to represent the relationship between messages or provide a return channel for acknowledgments. Consequently, it can't guarantee delivery to a specific set of recipients. It is each client's responsibility -- producer or consumer -- to ensure they get what they need from the Kafka service.

Running at the edge. Kafka is not built to be run at the edge, said Chandrasekhar. This includes edge processing in IoT. Additional use cases where Kafka is not an ideal choice are ETL-type data movement and batch processing of warehouse data.

In theory, Kafka Connect should be able to help in these scenarios. But Chandrasekhar said it can be cumbersome to build connectors for various protocols for the edge or implementing light-weight agents within a sensor or doing edge-processing of sensor data within devices.

Replacing middleware and databases. Kafka use cases should not completely replace traditional middleware or databases, said Kohl. Although Kafka does allow users to query like a database, it lacks many of the optimizations for storing historical information that databases provide. In terms of traditional middleware, Kafka is complementary. It provides the data stream but if you want to transform or analyze data, you typically need to write code.

Processing data in order. Kafka might not make sense if you need to process data in order or if you intend to use it as a database for queries like joining or sorting, Chitturi said. It's also not ideal for situations where you need real-time audio or video content or large messages such as pictures.