Establish big data integration techniques and best practices

A big data integration strategy departs from traditional techniques, embraces several data processes working together and accounts for data's volume, variety and velocity.

To create business value, improve analytics insights and machine learning outcomes, and maximize AI capabilities, an effective big data integration strategy is essential and may require a shift away from traditional structured data integration techniques. Not only must big data management handle the many and varied types of data collected and stored, but also the larger volumes of data that need to be integrated at a faster pace.

Unstructured data provides new opportunities for deriving insights from text, internal communications and various operational systems. But traditional database management and integration techniques fall short when teams attempt to deal with large amounts of unstructured data, said Rosaria Silipo, principal data scientist at data science platform maker Knime. Big data tools and practices can manage the lifecycle of this data more effectively when guided by a strategy for integrating big data.

Why a big data integration strategy is necessary

Digital transformation initiatives are often driven by new ways to combine collected and stored data. These combinations are generically referred to as all-encompassing big data.

"Data has become the competitive differentiator, and it's become even more apparent in the time of COVID," noted David Mariani, founder and CTO of data virtualization platform provider AtScale. "Investing in a data integration strategy that drives agility and self-service data consumption at scale has become a major revenue driver for enterprises."

Traditional data integration vs. big data integration

The three V's -- data volume, variety and velocity -- factor heavily into a big data integration strategy.

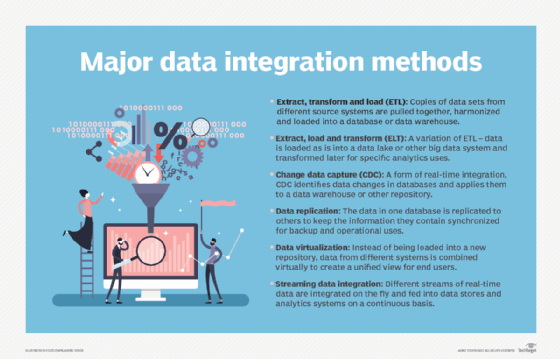

Large volumes of data often make a batch approach to data integration a nonstarter, Mariani said. Traditional extract, transform and load (ETL) techniques struggle to keep pace with new types of data, while traditional integration practices can struggle to keep the data current. Keeping data fresh and recent can often be the critical factor when deriving trusted actionable insights, Mariani explained. Organizations often need to invest in real-time data collection and processing infrastructure to deliver more timely decisions.

The variety of data often goes beyond rows and columns, and the various schemas must be embedded in formats like JSON and XML. Data integration tools need to natively handle these formats, and data platforms need to store these complex data types as is for flexible access. Data velocity -- the speed at which data is processed -- can present another challenge to traditional data integration techniques.

Fundamental differences exist between traditional data processes and those required for new types of architectures built on composable services, said David Romeo, vice president of technology and architecture at app development consultancy Allata. Traditional data integration often focuses on application states and the transactional nature of applications. In contrast, big data integration can take advantage of techniques like data streaming, event-driven architecture and heavy intermediate processing.

"Most organizations don't have a solid data strategy or one that takes into account the recent app and microservice explosion," Romeo explained. As a result, traditional point-to-point integration is used to fill in the gaps, which adds to technical debt. This approach, as well as the required tools and engineering talent, is frequently adjusted to handle semistructured and unstructured data at scale.

Big data integration challenges

Data variety and velocity often pose the greatest big data integration challenges for data managers, said Faisal Alam, emerging technology leader at consultancy EY Americas. Issues surrounding data volume have largely been solved by infinitely scalable cloud storage and in-memory computing capabilities, which breathe new life into traditional integrated patterns.

Data variety. Issues of data variety revolve around managing modern data architectures assembled across multiple best-in-class data repositories to house different types of data.

Time series databases, for example, might store IoT data, relational database transactional data and graph database relationship data. Traditional integration is geared to relational databases through ETL, database-to-database connections and enterprise services buses. This type of integration often assumes a fixed schema in the underlying database. Making a change to this schema creates a ripple effect that could be costly and time-consuming, Alam cautioned.

Big data integration requires that managers build in flexibility and agility across different schemas. For example, the U.S. government's Real ID Act of 2005 required all states to capture a minimum mandatory set of data on each person seeking a driver's license or identity card. The traditional integration process took more than a decade for states to migrate their systems to support a consistent schema. On the other hand, Alam noted, implementing big data platforms and integration techniques from the start would have simplified and shortened the process. In that scenario, each state would only need to maintain a schema definition in a schema registry that was external to the data.

Data velocity. Issues of data velocity arise when moving from a batch integration process to the integration of data streams.

In a hospital setting where time is of the essence, for example, delays in processing data from IoT-enabled devices using traditional ETL techniques could have catastrophic consequences. In this situation, data managers should consider integration patterns using a streaming platform like Kafka to signal real-time alerts to nurses for required intervention. From there, a modern data pipeline like Apache Airflow could use directed acyclic graphs (DAGs) to coordinate data movement across the raw, integrated and curated zones of an on-premises or cloud-based data lake. The hospital could also create a business semantic layer using data virtualization techniques to help administrators analyze and improve the various processes used in treating patients.

But techniques like schema registries, DAG-based tools and data virtualization will more than likely fail if implemented in isolated parts of an organization, Alam warned, and instead require an overall companywide shift in data processing and maturity practices.

Best practices for big data integration

The following practices can help smooth the process of integrating sets of big data:

Plan a coherent strategy. A big data integration strategy needs to account for several data functions working in harmony, including data distributed across multiple locations; data transport, preparation and storage; security; performance; disaster recovery; and other operating concerns, according to Rick Skriletz, global managing principal at digital transformation consultancy RCG Global Services. Data management teams also need to address the topology of data movement, spanning sources, storage, presentation and data processing components. "Without a big data integration strategy," Skriletz advised, "each aspect is typically addressed separately without a clear picture of how it needs to all operate quickly, correctly and smoothly."

Think of data as a product. When integration is considered an IT function, data is often viewed as a byproduct of applications and infrastructure. However, a cultural shift toward treating data as the product should form the basis for a big data integration process, said Jeffrey Pollock, vice president of products at Oracle. Companies will also need to adopt technologies and practices that enable data engineers and analysts to yield productive results from big data integration. The adoption of self-service and no-code tools can help business teams discover new insights and opportunities with less reliance on experts who may be further removed from the data and its purpose.

Clarify the metadata. The key to understanding a big data lake integration is a contextual data model that describes the actual data elements flowing into a data lake, said Bas Kamphuis, chief growth officer at data management platform maker Magnitude Software Inc. A metadata model can help business users connect sources of data to their own projects and clarify concepts that might be interpreted differently across different domains.

Plan for an integration lifecycle. DevOps harmonizes application development with deployment, and DataOps combines data capture and management with the use of data. Consider big data integration a part of the data app development lifecycle, suggested Zeev Avidan, chief product officer at data integration tool provider OpenLegacy.

Migrate first, transform later. Data management teams have typically transformed data before loading it into a data warehouse for analytics. When applying big data integration, it makes more sense to load data into a warehouse or lake first and transform it later. "It's not practical to transform terabytes or more of data for every new report or warehouse," reasoned David Nilsson, senior vice president at enterprise software provider Adapt2 Solutions. A strategy that keeps big data intact is critical.