Dissecting data measurement: Key metrics for assessing data quality

In a book excerpt, data quality architect Laura Sebastian-Coleman explains data assessment terminology and details a framework for measuring quality.

This is part one of an excerpt from Chapter 4: Data Quality and Measurement, from the book Measuring Data Quality for Ongoing Improvement: A Data Quality Assessment Framework by Laura Sebastian-Coleman. Sebastian-Coleman is a data quality architect at Optum Insight, which provides analytics, technology and consulting services to health care organizations. In this section of the chapter, she explains data quality assessment terminology in detail and breaks down data measurement concepts and techniques that can aid in assessing quality levels. In the second part of the excerpt, she discusses data profiling and data quality issues management.

Data quality assessment

To assess is to "evaluate or estimate the nature, ability or quality" of something, or to "calculate or estimate its value or price." Assess comes from the Latin verb assidere, meaning "to sit by." As a synonym for measurement, assessment implies the need to compare one thing to another in order to understand it. However, unlike measurement in the strict sense of "ascertain[ing] the size, amount, or degree of something by using an instrument," the concept of assessment also implies drawing a conclusion -- evaluating -- the object of the assessment.

Arkady Maydanchik defines the purpose of data quality assessment: to identify data errors and erroneous data elements and to measure the impact of various data-driven business processes. Both components -- to identify errors and to understand their implications -- are critical. Data quality assessment can be accomplished in different ways, from simple qualitative assessment to detailed quantitative measurement. Assessments can be made based on general knowledge, guiding principles or specific standards. Data can be assessed at the macro level of general content or at the micro level of specific fields or values. The purpose of data quality assessment is to understand the condition of data in relation to expectations or particular purposes or both and to draw a conclusion about whether it meets expectations or satisfies the requirements of particular purposes. This process always implies the need also to understand how effectively data represents the objects, events and concepts it is designed to represent.

While the term data quality assessment is often associated with the process of gaining an initial understanding of data within the context of the DQAF [data quality assessment framework], the term is used here to refer to a set of processes that are directed at evaluating the condition and value of data within an organization. These processes include initial, one-time assessment of data and the data environment (metadata, reference data, system and business process documentation); automated process controls; in-line data quality measurement; and periodic reassessment of data and the data environment. Process controls and in-line data quality measurement are defined briefly later in this chapter.

Copyright information

This excerpt is from the book Measuring Data Quality for Ongoing Improvement: A Data Quality Assessment Framework, by Laura Sebastian-Coleman. Published by Morgan Kaufmann Publishers, Burlington, Mass. ISBN 9780123970336. Copyright 2013, Elsevier BV. To download the full book for 25% off the list price until the end of 2014, visit the Elsevier store and use the discount code PBTY14.

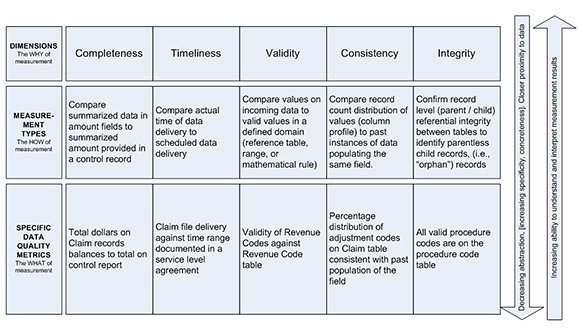

Data quality dimensions, DQAF measurement types, specific data quality metrics

As noted in the discussion of data quality, the word dimension is used to identify aspects of data that can be measured and through which data's quality can be described and quantified. As high-level categories, data quality dimensions are relatively abstract. The dimensions explored in the DQAF include completeness, validity, timeliness, consistency and integrity. Data quality dimensions are important because they enable people to understand why data is being measured.

More on data quality measurement and management

Learn more about addressing internal data quality issues

Specific data quality metrics are somewhat self-explanatory. They define the particular data that is being measured and what is being measured about it. For example, in health care data, one might have a specific metric that measures the percentage of invalid procedure codes in the primary procedure code field in a set of medical claim data. Specific measurements describe the condition of particular data at a particular time. My use of the term metric does not include the threshold itself. It describes only what is being measured.

The DQAF introduces an additional concept to enable data quality measurement, that of the measurement type (see Figure 4.1). A measurement type is a category within a dimension of data quality that allows for a repeatable pattern of measurement to be executed against any data that fits the criteria required by the type, regardless of specific data content. (I will sometimes also use the noun measure to refer to measurement types.) Measurement types bridge the space between dimensions and specific measurements. For example, if it is valuable to measure the percentage of invalid procedure codes in a set of medical claims, it is probably also valuable to measure the percentage of invalid diagnosis codes or invalid adjustment codes. And these can be measured in essentially the same way. Each value set is present on a column or columns in a table. The valid values for each are contained in a second table. The sets can be compared to identify valid and invalid values. Record counts can be taken and percentages calculated to establish levels of invalid values.

FIGURE 4.1 Dimensions, measurement types and specific metrics. The figure illustrates the relationship between data quality dimensions, DQAF measurement types and specific data quality metrics. Dimensions are central to most discussions about data quality. They describe aspects of data quality that can be measured and provide the why of measurement. Measurement types are central to the DQAF. They describe generic ways to measure the dimensions -- the how of measurement. They bridge the distance between dimensions and specific metrics that describe what particular data will be measured -- the what of measurement.

FIGURE 4.1 Dimensions, measurement types and specific metrics. The figure illustrates the relationship between data quality dimensions, DQAF measurement types and specific data quality metrics. Dimensions are central to most discussions about data quality. They describe aspects of data quality that can be measured and provide the why of measurement. Measurement types are central to the DQAF. They describe generic ways to measure the dimensions -- the how of measurement. They bridge the distance between dimensions and specific metrics that describe what particular data will be measured -- the what of measurement.

A measurement type is a generic form into which specific metrics fit. In this example of diagnosis and adjustment codes, the type is a measurement of validity that presents the level (expressed as a percentage) of invalid codes in a specific column in a database table. Any column that has a defined domain or range of values can be measured using essentially the same approach. The results of similar measures can be expressed in the same form. Measurement types enable people to understand any instance of a specific metric taken using the same measurement type. Once a person understands how validity is being measured (as a percentage of all records whose values for a specific column do not exist in a defined domain), he or she can understand any given measure of validity. Just as once one understands the concept of a meter, one can understand the relation between an object that is one meter long and an object that is two meters long.

Measurement types also allow for comparison across data sets. For example, if you measure the level of invalid procedure codes in two different sets of similar claim data, you have the basis of a comparison between them. If you discover that one set has a significantly higher incidence of invalids and you expect them to be similar, you have a measure of the relative quality of the two sets.

- Continue on to read the second part of this excerpt.