Data quality for big data: Why it's a must and how to improve it

As data volumes increase exponentially, methods to improve and ensure big data quality are critical in making accurate, effective and trusted business decisions.

Data quality can be a major challenge in any data management and analytics project. Issues can creep in from sources like typos, different naming conventions and data integration problems. But data quality for big data applications that involve a much larger volume, variety and velocity of data takes on even greater importance.

And because quality issues with big data can create various contextual concerns related to different applications, data types, platforms and use cases, Faisal Alam, emerging technology lead at consultancy EY Americas, suggested adding a fourth V for veracity in big data management initiatives.

Why data quality for big data is important

Big data quality issues can lead not only to inaccurate algorithms, but also serious accidents and injuries as a result of real-world system outcomes. At the very least, business users will be less inclined to trust data sets and the applications built on them. In addition, companies may be subject to government regulatory scrutiny if data quality and accuracy play a role in frontline business decisions.

Data can be a strategic asset only if there are enough processes and support mechanisms in place to govern and manage data quality, said V. "Bala" Balasubramanian, senior vice president of life sciences at digital transformation services provider Orion Innovation.

Data that's of poor quality can increase data management costs as a result of frequent remediation, additional resource needs and compliance issues. It can also lead to impaired decision-making and business forecasting.

How data quality differs with big data

Data quality has been an issue for as long as people have been gathering data. "But big data changes everything," said Manu Bansal, co-founder and CEO of data stability platform maker Lightup Data.

Bansal works with 100-person teams that generate and process a few terabytes of customer data each day. Managing this amount of information totally changes the approach to ensuring data quality for big data and must take into account these key factors:

- Scaling issues. It's no longer practical to use an import-and-inspect design that worked for conventional data files or spreadsheets. Data management teams need to develop big data quality practices that span traditional data warehouses and modern data lakes, as well as streams of real-time data.

- Complex and dynamic shapes of data. Big data can consist of multiple dimensions across event types, user segments, application versions and device types. "Mapping out the data quality problem meaningfully requires running checks on individual slices of data, which can easily run into hundreds or thousands," Bansal said. The shape of data can also change when new events and attributes are added and old ones are deprecated.

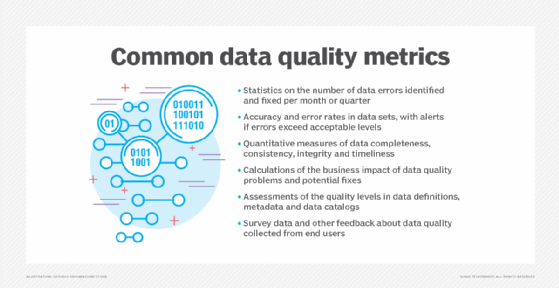

- High volume of data. In big data systems, it's impossible to manually inspect new data. Ensuring data quality for big data requires developing quality metrics that can be automatically tracked against changes in big data applications and use cases.

Big data quality challenges and issues

Merging disparate data taxonomies. Merged companies or individual business units within a company may have created and fine-tuned their own data taxonomies and ontologies that reflect how they each work. Private equity investments, for example, can accelerate the pace of mergers and acquisitions, often combining multiple companies into one large organization, noted Chris Comstock, chief product officer at data governance platform provider Claravine. Each of the acquired companies typically had its own unique CRM, marketing automation, marketing content management, customer database and lead qualification methodology data. Combining these systems into a single data structure to orchestrate unified campaigns can create immense challenges on big data quality.

Maintaining consistency. Cleansing, validating and normalizing data can also introduce big data quality challenges. One telephone company, for example, built models that correlated with network fault data, outage reports and customer complaints to determine whether issues could be tied to a geographic location. But there was a lack of consistency among some of the addresses that appeared as "123 First Street" in one system and "123 1ST STREET WEST" in another system.

Encountering data preparation variations. A variety of data preparation techniques is often required to normalize and cleanse data for new use cases. This work is manual, monotonous and tedious. Data quality issues can arise when data prep teams working with data in different silos calculate similar sounding data elements in different ways, said Monte Zweben, co-founder and CEO of AI and data platform provider Splice Machine. One team, for example, may calculate total customer revenue by subtracting returns from sales, while another team calculates it according to sales only. The result is inconsistent metrics in different data pipelines.

Collecting too much data. Data management teams sometimes get fixated on collecting more and more data. "But more is not always the right approach," said Wilson Pang, CTO at AI training data service Appen. The more data collected, the greater the risk of errors in that data. Irrelevant or bad data needs to be cleaned out before training the data model, but even cleaning methods can negatively impact results.

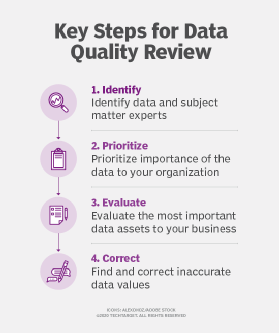

Lacking a data governance strategy. Poor data governance and communications practices can lead to all sorts of quality issues. A big data quality strategy should be supported by a strong data governance program that establishes, manages and communicates data policies, definitions and standards for effective data usage and to build data literacy. Once data is decoupled from its source environments, the rules and details of the data are known and respected by the data community, said Kim Kaluba, senior product marketing manager at data management and analytics software provider SAS Institute.

Finding the proper balance. There's a natural tension between wanting to capture all available data and ensuring that the collected data is of the highest quality, said Arthur Lent, senior vice president and CTO at Dell EMC's data protection division. It's also important to understand the purpose of acquiring certain data, the processes used to collect big data and its intended downstream analytics applications by the rest of the organization. Custom practices can typically evolve that are error prone, brittle and not repeatable.

Best practices on managing big data quality

Best practices that consistently improve data quality for big data, according to Orion's Balasubramanian, include the following:

- Gain executive sponsorship to establish data governance processes.

- Create a cross-functional data governance team that includes business users, business analysts, data stewards, data architects, data analysts and application developers.

- Set up strong governance structures, including data stewardship, proactive monitoring and periodic reviews of data.

- Define data validation and business rules embedded in existing processes and systems.

- Assign data stewards for various business domains and establish processes for the review and approval of data and data elements.

- Establish strong master data management processes so there's only one inclusive and common way of defining product or customer data across an organization.

- Define business glossary data standards, nomenclature and controlled vocabularies.

- Increase adoption of controlled vocabularies established by organizations like the International Organization for Standardization, World Health Organization and Medical Dictionary for Regulatory Activities.

- Eliminate data duplication by integrating sets of big data through interfaces to other systems wherever possible.