michelangelus - Fotolia

Data fabrics help data lakes seek the truth

Data fabrics can play a key role in aligning business goals with the integration, governance, reliability and democratization of information collected in massive data lakes.

Data lakes are getting assistance from an emerging technology framework that helps streamline the management and reuse of data for new applications, analytics and AI workloads. Data fabrics essentially add a semantic layer to data lakes to smooth the process of modeling data infrastructure, reliability and governance.

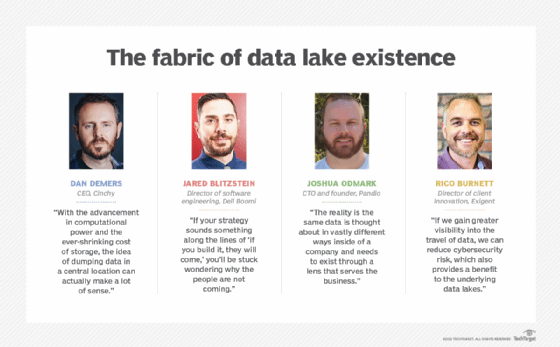

Data lakes serve as a central repository for storing copies of raw data sourced from several and often thousands of operational systems. "With the advancement in computational power and the ever-shrinking cost of storage, the idea of dumping data in a central location can actually make a lot of sense," said Dan DeMers, CEO of data fabric provider Cinchy.

But data lakes aren't typically involved in operational and transactional data flows. So, there's still a need to first interpret the data, requiring incremental steps when new apps and AI models need to interact with the data. Data fabrics overlays can reduce the need for point-to-point integrations among data lakes, operational applications, new analytics and data science apps.

Putting data strategy ahead of technology

One big challenge companies face is thinking about a data lake as a technology rather than a strategy for using data more efficiently, said Jared Blitzstein, director of software engineering at Dell Boomi. "If your strategy sounds something along the lines of 'if you build it, they will come,' you'll be stuck wondering why the people are not coming," he explained. "The organization will find itself overwhelmed with managing massive amounts of data that fail to capitalize on the potential and flexibility of a data lake."

A major criticism of data lakes has been the divergence of data into a "data swamp" -- a dumping ground for information of questionable quality and relevance. Flooding the lake limits the effectiveness of the data to those closest to it. That places the purpose of a data lake at direct odds with the self-service model most organizations seek when pursuing a big data strategy, Blitzstein said.

Traditional data lake architectures typically fail when they're implemented as a novelty or academic IT project with no direct link to data management strategies or business plans, said Sam Tawfik, senior marketing manager at cloud consultancy 2nd Watch. Data fabrics help align the storage and use of data with business goals and result in more consistent data lake funding by C-suite executives. They also can simplify the provisioning and sharing of data in the data lake, creating a single source of truth.

"The reality is the same data is thought about in vastly different ways inside of a company and needs to exist through a lens that serves the business," said Joshua Odmark, CTO and founder of distributed messaging service Pandio.

Creating specialized data models

Data fabrics can allow individual departments within a company to create specialized data models, and teams can create different enforcement policies and make unique guarantees for the model. HR, for example, may want two data models combined into one, the accounting department may need a subset of a data model for privacy purposes, a data scientist may need a guarantee that a data model with an invoice amount is labeled as a floating point, and the security team may need a data model to help identify personal identifiable information. Data fabrics provide the semantics to understand each one of these needs.

A large publicly traded media company, Odmark reported, was using AWS Simple Storage Service (S3) as its data lake. The company incorrectly assumed that AWS Athena, Relational Database Service and Redshift would seamlessly use any data placed in AWS S3. Different users indiscriminately dropped comma-separated values files, transformed JSON lists, Salesforce customer reports and PDF sales reports into Amazon S3. No organizational structure, such as folder naming conventions or file metadata, was used.

The company quickly discovered that its data lake had simply shifted the data access problem it was trying to solve elsewhere, Odmark said. The company used a data fabric retroactively to make the stored data usable and help solve its data accessibility dilemma.

Traditional data lake architectures are designed to access all the data from a single platform and allow users to do some computing on top of the data without dedicated compute power, said Roman Kucera, CTO of data management and governance platform maker Ataccama. That requires moving the data to a centralized repository and documenting how the data source changes.

With data fabrics, the data isn't necessarily moved across the enterprise. If, for example, the current storage is suitable for analytics, the data can live there and be accessed transparently from the same compute resources. Data fabrics help to ensure data quality and understand the meaning of a data set. "It is not just a copy of some data source that we know nothing about," Kucera explained. "It's a specific data set with a known and accepted state."

Turning lakes into islands

Many enterprise data lakes are implemented as a data island that's disconnected from other repositories like data warehouses and data marts that support reporting and analytics, said David Talaga, director of product marketing at data integration and governance platform provider Talend. In that situation, only a small number of skilled data scientists and data engineers can access the data lake, with other users dependent on them to prepare and export data.

Writing manual scripts to normalize this data into a standardized format for broader access can be extremely time-consuming and costly. Data fabrics can provide a unified service for ingesting data from multiple sources into a data lake, ensuring data quality and governance, and allowing more users access to the governed data.

Using data fabrics to complement a data lake is not so much about the inherent architecture as it is about improving the execution of the data strategy, said Rico Burnett, director of client innovation at legal services consultancy Exigent. Many organizations, he observed, run into challenges when focused on pulling, extracting and retrieving data of varying quality from different locations. A significant amount of time is invested in mapping the data journey but not the destination. "Once the lake is created," he noted, "the focus tapers off, and execution of the complete vision becomes a challenge."

Create a marketplace for data reuse

Data fabrics allow businesses to create a data marketplace, noted Manoj Karanth, vice president and global head of data science and engineering at IT consultancy Mindtree. A data marketplace ensures that data set discovery is widespread and seamless, which, in turn, can reinforce the centrality of the data catalog. Once discovered, the data can be exposed through APIs so it can be used in analytics and application systems.

If, for example, there's a data set on product sales data, exposing the data set on an enterprise marketplace shows the interest users have in consuming it. Initially, it could be exposed on a traditional database through a data refresh once a day. If more users are interested in the data set, then it could be upgraded to a modern architecture with lower latency and better performance.

Data fabrics can help organize the data into four key products: the actual data, associated data insights with micro-visuals, signals associated with these insights to personalize them for the end user, and actions necessary to automate some of these signals.

It's important to establish a standard for data quality and consistency across the business. "Without a generally accepted process for the treatment of data, it's really difficult to deliver continuous value," Burnett added. An effective data strategy that includes the format, frequency of updates and treatment of external or benchmarking data should increase the value of the data.

Adoption of more effective data structures can help drive data recycling companywide. Key delivery teams would have greater incentives to find innovative ways to represent and curate data, and stakeholders would have more confidence in the entire process.

In addition, Burnett noted an improvement in the integration of new or previously undiscovered data sources to provide greater detail and visibility. When the speed of integration improves, data lakes gain more widespread use within an organization and their continued maintenance becomes a higher priority.

Data fabrics can play a role in data security as well. "If we gain greater visibility into the travel of data," Burnett reasoned, "we can reduce cybersecurity risk, which also provides a benefit to the underlying data lakes."