What is stream processing? Introduction and overview

Stream processing is a data management technique that involves ingesting a continuous data stream to quickly analyze, filter, transform or enhance the data in real time. Once processed, the data is passed off to an application, data store or another stream processing engine.

Data sources for stream processing can include transactions, stock feeds, website analytics, connected devices, operational databases, weather reports and others. Stream processing services and architectures are growing in popularity because they allow enterprises to combine data feeds from various sources and get useful, real-time insights for better and faster decision-making.

How does stream processing work?

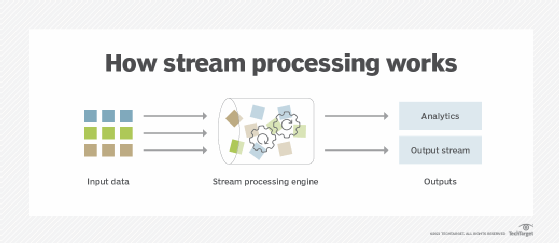

Stream processing starts by ingesting data from sources, such as publish-subscribe services, social media and sensors, for example, into the stream processing engine. Then, data is processed in real time.

The engine might perform an action on the data, such as analysis, filtering, transforming, combining or cleaning it before publishing insights and results back to the publish-subscribe service or another data store. Users can then query the data store for insights that can be used to inform their decisions and further actions. The results may also be made available on dashboards or alert systems for further analysis or action.

Stream processing is also known as streaming analytics or real-time analytics -- which is a relative term. Real time could mean five minutes for a weather analytics app, millionths of a second for an algorithmic trading app or a billionth of a second for a physics researcher.

Despite the nonstandardized meaning of real-time in stream processing, the notion itself points to something important about how the stream processing engine packages up bunches of data for different applications. The engine organizes data events arriving in short batches and processes data continuously as soon as it is generated. Then, it presents the results of the processing to other applications as a continuous feed. This simplifies the logic for combining and recombining data from various sources and from different time scales. It also makes it possible to derive instantaneous insights for fast decision-making, even from large data volumes, or big data.

Why is stream processing needed?

Stream processing is needed in situations where large quantities of data are to be processed quickly to facilitate fast actions and decisions. Traditional batch processing in which data is stored and then processed in discrete batches cannot keep up with data that typically emerges as a continuous stream of events. For big data, stream processing is a more viable data processing strategy.

Stream processing is suitable in the following situations:

- Time-series data is to be processed to detect patterns over some time period.

- Approximate but fast answers are preferable to more precise but slower insights.

- Less hardware is to be used for data processing.

- Processing data that almost always arrives in streaming format, e.g., financial transactions or website visits.

In the stream processing pipeline, streaming data is generated, processed and delivered to its final location. Between generation and delivery, the data might also be aggregated, transformed, enriched, analyzed and ingested.

This process allows applications to respond to new events as soon as they occur. This is why the stream processing approach is ideal for applications that use a wider variety of data sources and/or require real-time data analysis, instantaneous insights and super-fast responses.

Stream processing architecture

Stream processing architectures help simplify the data management tasks required to consume, process and publish the data securely and reliably. There are many architectures available and they handle and process real-time data in different ways.

Popular stream processing architectures include the following:

Lambda architecture

The Lambda architecture combines real-time stream processing with conventional batch processing. Real-time stream processing provides fast data access and instant insights, while batch processing is suitable for performing historical analysis. This architecture is suitable for processing big data.

The main components of the Lambda architecture are data sources, batch layer, serving layer and speed layer. The batch layer holds the master data in an immutable and append-only format. The newly arriving data is queued up for indexing and the serving layer indexes the latest batch views. Then, the speed layer uses stream processing software to index the incoming data and make it available for querying by end users.

Kappa architecture

The Kappa architecture includes a messaging engine, a stream processing engine, and an analytics database. The messaging engine stores the incoming series of data. An example of such an engine is Apache Kafka. Next, the stream processing engine reads the data, converts it into an analyzable format, and then feeds it into the analytics database. Users can query this database to retrieve relevant information.

Once the data enters the messaging engine, it is read and immediately transformed for analytics. This speeds up analytics and data retrieval for end users. Also, the Kappa architecture can perform both real-time and batch processing, so only a single technology stack is needed for both. This is why the Kappa architecture is simpler than the Lambda architecture. In addition to facilitating real-time analytics, this architecture also supports historical analytics.

Benefits of stream processing

Modern stream processing tools are an evolution of various publish-subscribe frameworks that make it easier to process data in transit. Stream processing provides fast data analysis and generates real-time (or near real-time) insights that are critical for many modern applications. Also, by distributing processing across edge computing infrastructure, stream processing can reduce data transmission and storage costs.

Streaming data architectures can make it easier to integrate data from multiple business applications or operational systems in order to generate better insights faster. Parallel data processing also accelerates and enhances decision-making in different industries and applications.

For example, telecom service providers use stream processing tools to combine data from numerous operations support systems. Healthcare providers use it to integrate applications that span multiple medical devices, sensors and electronic medical records systems.

How is stream processing used?

Anomaly detection and fraud detection are two of the most common use cases of stream processing. In financial settings for example, stream processing is used to analyze credit card numbers to recognize and raise alerts on fraudulent charges. Stream processing also supports more responsive applications for internet of things (IoT) data analytics, real-time ad personalization, context-aware marketing promotions, market trend spotting and root cause analysis.

Other common use cases for stream processing include the following:

- Tuning business application features.

- Personalizing customer experience.

- Stock market trading and surveillance.

- Analyzing and responding to IT infrastructure events.

- Digital experience monitoring.

- Customer journey mapping.

- Predictive analytics and maintenance.

- Network monitoring.

- Geofencing.

- Traffic monitoring.

- Supply chain optimization.

In general, the stream processing pipeline and process are best suited to do the following:

- Develop adaptive and responsive applications.

- Provide real-time business analytics.

- Improve and accelerate decision-making with increased context.

- Improve user experiences.

What are the stream processing frameworks?

The core ideas behind stream processing have been around for decades but are getting easier to implement with open source tools, cloud services and frameworks.

Spark Streaming, Flink, Kafka, and Samza are some popular open source stream processing frameworks from Apache.

Apache Spark Streaming is a stream and batch processing system. It can process real-time data from multiple sources like Apache Kafka, Flume and Amazon Kinesis.

Kafka is a distributed event streaming platform that simplifies data integration across multiple applications.

Flink is a distributed processing engine and framework for unbounded and bounded data streams.

Apache Samza can process large volumes of real-time data, in real time. It is a distributed stream processing framework that allows users to build stateful applications. These applications can process data in real time from multiple sources.

Apache Storm is a distributed real-time computation system for processing unbounded streams of data. It is suitable for many data-dependent use cases, including online machine learning and extract, transform, load (ETL) data processing.

In addition, all the primary cloud service providers have native services that simplify stream processing development on their respective platforms, such as Amazon Kinesis, Azure Stream Analytics, and Google Cloud Dataflow.

These frameworks often go hand in hand with other publish-subscribe frameworks used for connecting applications and data stores.

Stream processing vs. batch processing

Stream processing and batch processing represent two different data management and application development paradigms.

Batch processing originated in the days of legacy databases, in which data management professionals would schedule batches of updates from a transactional database into a report or business process. This approach is suitable for regularly scheduled data processing tasks with well-defined boundaries. Examples include the following:

- Pull out transactional numbers from a sales database.

- Generate a quarterly report.

- Tally employee hours to calculate monthly salaries.

In contrast, stream processing is based on ingesting data as a continuous data stream. Although the data still arrives in batches, it is processed continuously. There's no storing data or waiting for a large enough batch of data to accumulate.

Instead, the stream processing engine processes incoming data in parallel. It filters out data updates and keeps track of the data that's already been uploaded into the feed. It also manages other processes like data transformation, enrichment and cleaning. This frees up more time for data engineering and developer teams to code the analytics and application logic.

History of stream processing

Since the dawn of computers, computer scientists have explored various frameworks for processing and analyzing data from multiple sensors. In the early days, this was called sensor fusion. Then, in the early 1990s, Stanford University professor David Luckham coined the term complex event processing (CEP).

CEP is a method for analyzing and correlating data streams to identify meaningful patterns and generate real-time insights about relevant events. CEP's fundamental principles included abstractions for characterizing the synchronous timing of events, managing hierarchies of events and considering the causal aspects of events. The emergence of the CEP approach helped fuel the development of service-oriented architectures (SOAs) and enterprise service buses (ESBs).

The rise of cloud services and open source software led to more cost-effective approaches for managing event data streams, using publish-subscribe services built on Apache Kafka. Over time, many stream processing frameworks emerged that simplified the cost and complexity of correlating data streams into complex events. Multiple stream processing methodologies also emerged, including event stream processing (ESP) and data stream processing (DSP).

With the rise of the cloud, the terms SOA, ESB and CEP are approaching obsolescence and infrastructure built on microservices, publish-subscribe services and stream processing are becoming more popular.

Learn more about how streaming analytics can provide insight and value to your organization. Explore the top data preparation challenges and how to overcome them. Check out four types of simulation models used in data analytics.