What is data transformation? Definition, types and benefits

Data transformation is the process of converting data from one format -- such as a database file, Extensible Markup Language document (XML) or Excel spreadsheet -- into another format.

Transformations typically involve converting a raw data source into a cleansed, validated and ready-to-use format. The main purpose of transforming data is to improve its quality to make it more usable for enterprise decision-making.

Importance of data transformation

Data transformation is crucial to processes such as data integration, management, migration, warehousing and wrangling. These processes are vital for any organization seeking to use its data to generate timely data-driven business insights, such as data analytics and decision-making.

As the volume of data has proliferated, organizations must find an efficient way to harness data to effectively use it for business purposes. Data transformation is one element of harnessing this data, particularly using automation.

The data transformation process typically includes steps to remove duplicates, convert data from one type to another, and improve and enrich the overall data set. When these steps are done properly and consistently, the data becomes easy to access and use. It is also more consistent and secure, and trusted by the intended business users.

Types of data transformation (data transformation techniques)

Data transformation is not a single technique. Rather, users can apply numerous techniques as needed to meet specific business goals. They might also combine various techniques, depending on data types or business requirements.

Some of the most common data transformation techniques include the following:

- Integration. Integration unifies data elements from different data sets, such as combining two different databases. This ensures the indexes and values for every data element are the same, which supports easier, more accurate analytics.

- Deduplication. Deduplication removes redundant or duplicate data, such as duplicate customer entries, from the data set. Deduplication can be part of a broader data cleaning technique to improve data quality.

- Aggregation. Aggregation combines data in different ways to make it more manageable and easier to use. For example, daily data can be aggregated to represent weekly, monthly or quarterly averages.

- Normalization. Normalization is a way to standardize data to improve its usability and minimize errors. It aims to guarantee that all data elements use the same scale or format. For example, if one data set uses three digits of precision and another data set uses only two digits of precision, normalization requires that all data is processed to two or fewer digits of precision so that all data is represented the same way.

- Discretization. Discretization makes data more discrete, breaking it into finer or more detailed elements or buckets. This can make larger data sets easier to analyze and help users extract more detailed insights from the data set.

- Imputation. If a data set is missing some values, imputation can be used to replace those values with other plausible values to improve the quality of the data set.

- Visualization. This is a technique to represent data graphically to make it easier to analyze and use. Visualization can reveal useful patterns or insights in the data that might not be immediately obvious in its original form.

- Feature engineering. Feature engineering, sometimes called data enrichment, creates new data elements or features from existing data elements and is frequently applied to enhance data used to train machine learning models.

ETL data transformation vs. ELT data transformation

The data transformation process can be referred to as Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT).

Organizations with large, highly integrated data sets, often from multiple sources, must perform an enormous amount of data transformation to make data useful for business tasks. They use the following fundamental phases of this data pipeline:

- Extract (E). This phase uses data aggregation to collect raw data from disparate sources. It involves identifying and pulling data from the various source systems that create data and then moving it to a single repository. Next, the raw data is cleansed if needed.

- Transform (T). In this phase, data is processed to make it accurate and integral so that it can be combined. Simply put, the cleansed data is transformed into a target format that can be fed into the target system.

- Load (L). This means to combine the transformed data in a database where it can be processed. Post-transformation, the data is loaded into the target system. This might be an operational system or a data warehouse, a data lake or other repository for use in business intelligence (BI) and analytics applications.

While both ETL and ELT processes employ the same steps, they have different data pipeline techniques.

ETL data transformation employs the above sequence of events, often applying detailed business rules to process data closer to its source before integrating it into a single set; in this case, more processing is performed upfront.

ELT data transformation works slightly differently. It holds off on the data transformation until the data has been combined. In effect, the raw data is collected and loaded first, and then the entire combined data set is transformed.

ELT is generally considered to be the default approach today. Combining raw data into a single data set can transform the data in various ways. Also, the extracted data is typically loaded into a cloud-based target system. This allows for faster, more efficient data processing. It also makes the ELT approach more flexible, allowing the raw database to be used in different business tasks by simply running different or customized transformations against the entire raw data set.

What are the key steps in data transformation?

The process of data transformation involves identifying data sources and types, determining the structure of transformations that need to occur, and defining how fields will be changed or aggregated. It includes extracting data from its original source, transforming it, and sending it to the target destination, such as a database or data warehouse. Extractions can come from many locations, including structured sources, streaming sources or log files from web applications.

Data analysts, data engineers and data scientists are typically in charge of data transformation within an organization. They identify the source data, determine the required data formats and perform data mapping. They then execute the actual transformation process before moving the data into appropriate databases for storage and use.

Their work involves the following six main steps:

- Data discovery. Data professionals use data profiling tools or profiling scripts to understand the source data's structure and characteristics and determine how it should be transformed.

- Data cleaning. Raw source data frequently includes errors, duplicates and inconsistencies that reduce its usability and usefulness. Data cleaning removes these issues, improving data quality.

- Data mapping. Data professionals connect or match data fields from the source system to data fields in the target format. They determine the current structure of the current data set and how the data fields would be modified. Such mapping guides the transformation process and minimizes the potential for errors.

- Code generation. Data professionals use either data transformation tools or write scripts to create the software code required to transform the data.

- Code execution. The code is applied to the source data to transform it into the desired format. The transformed data is then loaded into the target system.

- Data review. Data professionals or end users confirm that the output data meets the established transformation requirements. They check that it is correct and consistent. If not, they address and correct any anomalies and errors.

These steps fall within the ETL process for organizations that use on-premises warehouses. However, scalable cloud-based data warehouses have given rise to ELT processes, which organizations use to load raw data into data warehouses and then transform data at the time of use.

Benefits of data transformation

Organizations must analyze their data for various business operations, from sales, marketing and customer service, to engineering, cybersecurity and supply chain management. They also need data to feed their enterprise's increasing number of automated and intelligent systems. To gain the insights that can help improve all these processes, they need high-quality data in formats compatible with the systems consuming it.

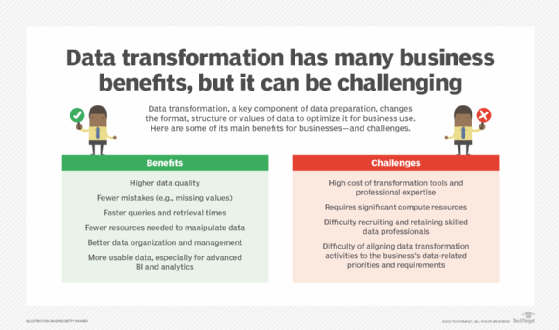

Data transformation is a critical component of enterprise data programs because it delivers the following benefits:

- Higher data quality with fewer mistakes, such as duplicates or missing values.

- More usable data for a wide range of use cases, including advanced BI, big data analytics, machine learning, data warehousing and data mining.

- Faster business queries and retrieval times through data standardization.

- Unifies information from various sources to break down data siloes and speed up decision-making.

- Fewer resources needed to manipulate data and extract insights from it.

- Easier data organization and management.

- Enhanced data security and compliance with data privacy regulations by using techniques like encryption and anonymization.

Data transformation enables organizations to convert existing data into a desired format to enable analysis and decision-making. Transformation techniques also allow raw data from different sources to be integrated, stored and mined to generate useful business intelligence and insights for various purposes.

Challenges of data transformation

The data transformation process can be complex and complicated, particularly for organizations that deal with large data volumes. The challenges organizations face might include the following:

- High cost of transformation tools and professional expertise.

- Significant compute resources, with the intensity of some on-premises transformation processes potentially slowing down other operations.

- Difficulty recruiting and retaining the skilled data professionals required for this work, with data professionals some of the most in-demand workers today.

- Difficulty of properly aligning data transformation activities to the business's data-related priorities and requirements.

Reasons to do data transformation

Organizations must be able to mine their data for insights to successfully compete in the digital marketplace, optimize operations, cut costs and boost productivity. They also require data to feed systems that use AI, machine learning, natural language processing and other advanced technologies.

Data transformation has one simple goal -- to make data better and more useful for business tasks. When approached properly, a successful data transformation process can enhance various data attributes, including the following:

- Quality. Removing errors, duplicates, missing entries or gaps and improper indexing or categorization can improve data accuracy and reliability for data analytics.

- Portability. Transformation uses formats and data types that make data consistent and more easily portable between disparate systems or platforms.

- Integration. Transformations can help integrate or combine different data sources into a single uniform data set.

- Usability. Proper transformations can make the data set more readable by humans and systems, making it easier to access, process and use for tasks such as analytics and visualization.

- Flexibility. Transformations can make data sets simpler and easier to scale or adapt to expanding use cases for the business.

- Security. Transformations can employ actions, such as data anonymization and encryption, to improve data security, data privacy and regulatory compliance issues for the business.

Data transformation tools

Manual data transformation is a time- and resource-intensive endeavor, and the costs involved can overshadow the benefits. Fortunately, data professionals have numerous tools to select from to support the process. These technologies automate many of the transformation steps, replacing much, if not all, of the manual scripting and hand coding that are a major part of the manual process.

Both commercial and open source data transformation tools are available. Some offerings are designed for on-premises transformation processes and others for cloud-based transformation activities. Moreover, some tools are part of platforms that offer a broad range of capabilities for managing and transforming enterprise data.

Examples of data transformation tools include the following:

- Alteryx AI Platform.

- AWS Glue.

- Apache Airflow.

- Denodo Platform.

- Google Cloud Dataform.

- IBM InfoSphere DataStage.

- Informatica Intelligent Data Management Cloud (IDMC).

- Matillion ETL.

- Microsoft Azure Data Factory.

- Oracle Cloud Infrastructure (OCI) GoldenGate.

- Pentaho+ Platform.

- Qlik Replicate.

- SAP Data Services.

- Talend Open Studio.

How to find the right data transformation tool for your organization

There are many data transformation tools are available to assist organizations of all sizes and needs and each presents different features and functionalities, resource demands and staff requirements. To get the best results from a tool, carefully consider these important factors:

- Feature set. The tool should support the types of data transformation that the business needs, such as aggregation, cleaning, normalization and data enrichment. Different tools focus on different strengths, so consider any special focus or transformation use cases that the tool provides.

- Data sources. The tool should support the varied data sources the business requires, such as database files, cloud storage, flat files, or general or third-party formats such as Excel.

- Data management. The tool should provide data management capabilities such as tagging, indexing and other discoverability features that let the business easily correlate raw data with transformed and processed data.

- Automation. The tool should support a full range of automation, allowing transformation workflows to be handled with a high degree of autonomy and consistency.

- Testing and validation. The tool should provide some level of testing so that data passes through the workflow properly and provides valid, useful data for analysis.

- Coding. Most tools require some level of coding to set up transformation processes, workflows, interfaces and automation. However, the best tools are those that minimize or simplify coding requirements and do not burden the development team.

- Scalability. The tool should support current data volumes and workflow complexity and have the potential to handle additional data volumes and more complex workflows in the future.

- Support. Comprehensive and responsive vendor support can help maximize product usage and satisfaction. If the tool is open source, it should have an active, responsive and helpful user community to help simplify troubleshooting.

As with the selection of any enterprise tool, it's worth planning a series of cross-departmental proof-of-concept (POC) tests to evaluate potential data transformation tools. These allow users to get firsthand experience before the organization makes a final decision to purchase a specific product.

Learn what a POC is and how an organization can create one to help lay out the criteria needed when purchasing a new product. Examine how to write a POC using these free templates.