What is data profiling?

Data profiling refers to the process of examining, analyzing, reviewing and summarizing data sets to gain insight into the quality of data. Data quality is a measure of the condition of data based on factors such as its accuracy, completeness, consistency, timeliness and accessibility.

Data profiling also involves reviewing source data to understand the data's structure, content and interrelationships. This review process delivers two high-level values to the organization:

- It provides a high-level view of the quality of its data sets.

- It helps the organization identify potential data projects.

Given those benefits, data profiling is a key component of data preparation programs. Helping organizations identify quality data makes it an important precursor to data processing and data analytics activities.

Moreover, an organization can use data profiling and the insights it produces to continuously improve its data quality and measure the results of that effort.

Data profiling is also be known as data archeology, data assessment, data discovery or data quality analysis.

Organizations use data profiling at the beginning of a project to determine if enough data has been gathered, if any data can be reused or if the project is worth pursuing. The process of data profiling itself can be based on specific business rules that uncover how the data set aligns with business standards and goals.

Types of data profiling

There are three types of data profiling:

- Structure discovery. This focuses on the formatting of the data, making sure everything is uniform and consistent. It uses basic statistical analysis to return information about the validity of the data.

- Content discovery. This process assesses the quality of individual pieces of data. For example, ambiguous, incomplete and null values are identified.

- Relationship discovery. This detects connections, similarities, differences and associations among data sources.

Data profiling techniques

The following four methods, or techniques, are used in data profiling:

- Column profiling. This assesses tables and quantifies entries in each column.

- Cross-column profiling. It is used to analyze relationships between columns by identifying unique values (through key analysis) and finding attribute dependencies (through dependency analysis).

- Cross-table profiling. It uses key analysis to identify stray data as well as semantic and syntactic discrepancies.

- Data rule validation. It assesses data sets against established rules and standards to validate that they're being followed.

- Metadata discovery. It helps understand data structures and relationships across systems. Metadata discovery is often automated and covers column, cross-column and cross-table profiling.

What are the steps in the data profiling process?

Data profiling helps organizations identify and fix data quality problems before the data is analyzed, so data professionals don't have to deal with inconsistencies, null values or incoherent schema designs as they process data to make decisions.

Data profiling statistically examines and analyzes data at its source and when loaded. It also analyzes the metadata to check for accuracy and completeness.

It typically involves either writing queries or using data profiling tools.

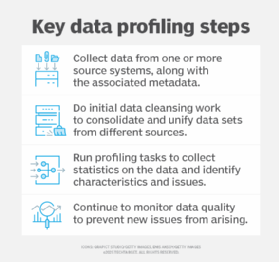

A high-level breakdown of the process is as follows:

- The first step of data profiling is gathering one or multiple data sources and the associated metadata for analysis. A centralized data profiling tool should also be chosen that can analyze all of the collected data.

- The data is then cleaned to unify structure, eliminate duplications, identify interrelationships and find anomalies. This step can use structure, content and relationship discovery.

- Once the data is cleaned, data profiling tools will return various statistics to describe the data set. This could include the mean, minimum/maximum value, frequency, recurring patterns, dependencies or data quality risks.

- Once everything is documented, the data quality should be continually monitored to prevent new issues from arising; data quality is not a one-time task.

For example, by examining the frequency distribution of different values for each column in a table, a data analyst could gain insight into the type and use of each column. Cross-column analysis is used to expose embedded value dependencies; inter-table analysis allows the analyst to discover overlapping value sets that represent foreign key relationships between entities.

Benefits of data profiling

Data profiling returns a high-level overview of data that can result in the following benefits:

- Provides higher-quality, more credible data. Data duplicates and inaccuracies can make data harder to work with and cost an organization money. Data profiling helps find and eliminate a lot of these issues to create more reliable data. Data profiling is just one of many methods that improves data quality as well.

- Supports predictive analytics and decision-making. Data profiling helps with more accurate predictive analytics and decision-making. Having more reliable data will improve the accuracy of machine learning models and forecasting.

- Makes better sense of the relationships between different data sets and sources. Understanding the relationships between data sets helps with processes like optimizing databases and integrating data.

- Keeps company information centralized and organized. Data profiling can create a better-organized data environment, improving how teams access data.

- Eliminates errors. Data profiling can identify and help eliminate errors, such as missing values or outliers, that add costs to data-driven projects.

- Reduces reoccurring errors. Data profiling highlights areas within a system that experience the most data quality issues, such as data corruption or user input errors.

- Produces insights surrounding risks, opportunities and trends. Data profiling can help organizations detect potential data patterns that could indicate compliance risks or market opportunities.

Data profiling challenges

Although the objectives of data profiling are straightforward, the process does still come with some challenges.

- Complexity. The actual work involved in data profiling is quite complex, with multiple tasks occurring from data ingestion through its warehousing. That complexity is one of the challenges organizations encounter when trying to implement and run a successful data profiling program.

- Volume of data. The sheer volume of data being collected by a typical organization is another challenge, as is the range of sources -- from cloud-based systems to endpoint devices deployed as part of an internet-of-things ecosystem -- that produce data.

- Velocity. The speed at which data enters an organization creates further challenges to having a successful data profiling program, as it creates hurdles like having to process constantly changing data at large volumes.

- Data prep challenges. Data prep challenges are even more significant in organizations that have not adopted modern data profiling tools and still rely on manual processes for large parts of this work.

- Resources. Organizations that lack adequate resources -- including trained data professionals, tools and funding for them -- will have a harder time overcoming these challenges.

Those same elements, however, make data profiling more critical than ever to ensure that the organization has the quality data it needs to fuel intelligent systems, customer personalization, productivity-boosting automation projects and more.

Examples of data profiling

Data profiling can be implemented in various use cases where data quality is important.

For example, projects involving data warehousing or business intelligence might require gathering data from multiple disparate systems or databases for one report or analysis. Applying data profiling to these projects can help identify potential issues and corrections that need to be made in extract, transform and load (ETL) jobs and other data integration processes before proceeding

Additionally, data profiling is crucial in data conversion or data migration initiatives that involve moving data from one system to another. Data profiling can help identify data quality issues that might get lost in translation or adaptations that must be made to the new system prior to migration.

Any organization that deals with large volumes of data can also benefit from data profiling. For example, a storefront could use data profiling to collect and analyze data from its point-of-sale systems.

Data profiling tools

Data profiling tools replace much, if not all, of the manual effort of this function by discovering and investigating issues that affect data quality, such as duplication, inaccuracies, inconsistencies and incompleteness.

These technologies work by analyzing data sources and linking them to their metadata to allow further investigation of errors.

Furthermore, they offer data professionals quantitative information and statistics around data quality, typically in tabular and graph formats.

Data management applications, for example, can manage the profiling process through tools that eliminate errors and apply consistency to data extracted from multiple sources without the need for hand coding.

Such tools are essential for many, if not most, organizations today as the volume of data they use for their business activities significantly outpaces even a large team's ability to perform this function through mostly manual efforts.

Data profile tools also generally include data wrangling, data gap and metadata discovery capabilities as well as the ability to detect and merge duplicates, check for data similarities and customize data assessments.

A sampling of data profiling tools includes the following:

- Apache Griffin.

- Ataccama ONE.

- DataCleaner.

- Datameer.

- Informatica.

- SAP Data Services.

There are numerous different data profiling tools available. Learn more in-depth about 10 data profiling tools and their top considerations.