A close look at different types of hyper-converged architectures

Learn more about the major kinds of hyper-converged infrastructure available as we explore the evolution of HCI from basic storage-compute nodes to newer disaggregated types.

What started as a basic conglomeration of servers and storage has fractured into a variety of options. I'm talking about hyper-converged infrastructure, which first came on the scene in relatively simple packaging but, with the passage of time, has transformed what used to be a one-size-fits-all offering into a series of options for enterprises to consider.

This article presents an overview of this evolution of hyper-converged architectures. It defines the various HCI types available, looks at what each looks like and presents the pros and cons of each.

Original HCI architecture

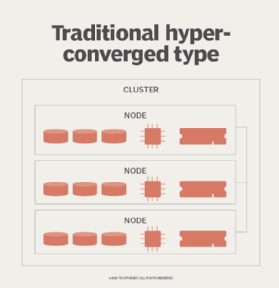

In its original form, HCI smashed together servers and storage, essentially replacing stand-alone storage arrays with servers -- called nodes -- infused with a lot of disks. In these conglomerations, the storage layer went from acting as an independent resource with separate management tools to one brought under the capable auspices of the hypervisor or some other software-defined paradigm. The figure below shows you a cluster of three such nodes. Notice that storage, compute and RAM peacefully coexist in the same server node.

The two big choices these early HCI buyers had to make revolved around the following:

- How would storage be managed? Will the hyper-converged platform use a controller virtual machine of some kind or would it use a kernel-integrated storage management system? The former provided more flexibility in hypervisor choice, while the latter, at the time, eked out a few more units of performance.

- Hardware or software? The choice here is whether to buy an HCI in a done-for-you appliance form factor or as just a piece of software to install on existing servers.

Of course, no matter the era of hyper-converged architectures, the hardware vs. software hyper-convergence question has remained, even as HCI itself has evolved. Evolved? Yes. Read on.

The evolutionary splintering of HCI

Homo sapiens weren't the only humanoids on the planet at one time, and likewise, that original hyper-converged architecture has continued to evolve beyond its original form. Although the primordial HCI type remains a force to reckon with, newer variations provide some interesting options for people to consider.

Let's start with a look at what's consider to be traditional HCI. We still have servers and storage combined and ruling the data center, but vendors have brought a lot more to the hyper-converged equation than what was originally available.

For example, Scale Computing has its unique OS-managed Scribe storage management layer approach to hyper-convergence. Scribe provides many of the same benefits as other HCI systems but with a fraction of the typical resource overhead.

Nutanix, meanwhile, has brought its AHV hypervisor to the forefront of the market. The HCI vendor also enables customers to mix and match AHV and vSphere to add storage capacity to its clusters without incurring VMware licensing penalties. VMware has quickly brought its vSAN offering to the forefront of storage technology by, for example, providing software-defined storage to several different types of HCI clusters, including the stretched cluster.

Pivot3, one of the more unique HCI players, brings to the market a top-to-bottom NVMe data path that improves storage performance. Hewlett Packard Enterprise's (HPE) SimpliVity offers customers an HCI and data protection mashup that brings enviable levels of data reduction.

And that's just with traditional approaches to hyper-converged architectures.

New evolutionary lines of hyper-convergence

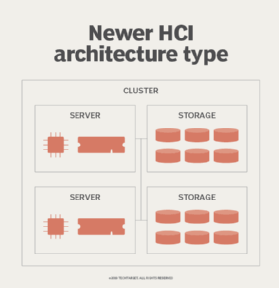

Today, we're seeing the emergence of a new class of hyper-convergence some are calling disaggregated HCI. Others refer to this new class as Tier 1 hyper-convergence. Whatever you choose to call it, these newer hyper-converged architectures look different.

In these HCI platforms, servers and storage are once again physically separate entities. They're bound together with a software layer that makes them appear to be a single entity, however -- at least in theory.

The vendors that have chosen this new hyper-converged architecture route -- Datrium, NetApp and, most recently, HPE with its Nimble-centric dHCI platform -- have done so to enable customers to once again independently scale compute and storage. By doing this, these companies negate what has been a common HCI complaint for some but a key benefit for others in HCI's linear scalability.

Original HCI scaled all resources in lockstep. When you added storage, for example, you also added RAM and compute, whether you needed it or not. In this figure, you can see that HCI systems based on this newer development in HCI architecture look more like traditional approaches to infrastructure than their close cousins.

Choosing the right hyper-converged architecture

So, which type of HCI is the right solution for you? It depends on your needs. If you want ultrasimplicity, the original hyper-converged architecture provides that in abundance. If you need more resource flexibility, newer architectural options might fit the bill.

Analyze your workloads and your resourcing situation, and get proofs of concept up and running from your HCI vendor shortlist. Then, it will be possible to make the decision that's right for your workloads and your company.