Fotolia

Hyper-converged edge infrastructure targets carriers, ROBOs

Hyper-converged systems provide a way to locally process IoT data and deliver streaming content at the network's edge while maintaining service and application performance.

Edge infrastructure has been on the minds of many IT professionals, and hyper-convergence has stood out among infrastructure paradigms as a way to meet the technology requirements that make edge computing possible. In this article, we will explore the benefits of hyper-convergence, as well as the vendors and hyper-converged edge products available to help you meet the computing needs at the periphery of your network.

IT has a decades-long history of oscillating between enterprise architectural archetypes, shifting from extremely centralized to highly distributed infrastructure. As with most things in life, the ideal usually lies somewhere in the middle -- shifting over time depending upon the available technology, networking capabilities and workload characteristics. We've been going through an era of centralization over the past decade, fueled by the emergence, evolution and unrelenting efficiency of cloud services coupled with the globalization of customers, suppliers and employees, thanks to the internet's ubiquity.

As we entered a new decade, there were already signs of strain in the cloud-centric model, even before the coronavirus crisis caused the dissolution of the workplace and made remote, distributed operations a business requirement. The proximate cause for cloud fatigue is a deluge of data as digitally transformed enterprises struggle to cope with an explosion in device, application and transaction telemetry. With most of the data created and eventually used outside corporate data centers or cloud environments, the cost and network capacity required to consolidate, analyze and redistribute it grew increasingly prohibitive.

Instead, organizations began looking for ways to store, process and summarize data closer to its source and to the eventual consumers of data-hungry applications. Thus, edge computing was born, and, with it, two of the most logical usage scenarios in remote offices or worksites and telco carrier infrastructure.

IT's shifting center of gravity to edge infrastructure

Analyst estimates and vendor product and marketing activity demonstrate the rising interest in edge computing, with hyper-converged infrastructure (HCI) being the preferred platform. For example, Gartner predicted the number of so-called micro data centers will quadruple over the next five years, driven by the nexus of HCI, 5G and virtual infrastructure technology. Furthermore, Gartner estimated that over the same period, 80% of enterprises will decommission traditional data centers in favor of a combination of cloud and edge infrastructure.

These trends bolster an already vibrant hyper-convergence market, with IDC estimating total sales of hyper-converged systems at $2 billion in Q3 of 2019, up almost 19% from the prior year. Research and Markets projected HCI equipment and software sales to grow at more than 33% annually for the next several years, reaching $27 billion in global sales by 2025.

Depending on how one analyzes the market, based on system sales or the underlying software stack, IDC estimated the Dell EMC-VMware family garners 35% to 44% of total revenue, followed by Nutanix and Cisco. However, the market remains diverse, with 37% of hyper-converged system sales going to OEM or white box manufacturers.

Over the past year, virtually all of these vendors have introduced new HCI systems for the edge. For example, Lenovo in April announced a ThinkSystem variant, the MX1021, designed for edge workloads and that bundles a single-socket 1U server with Microsoft Azure Stack HCI.

Use cases and benefits of hyper-converged edge infrastructure

Two of the main catalysts and use cases for hyper-converged growth include VDI -- the first enterprise HCI use case -- and, more recently, container infrastructure. The focus here is edge infrastructure, however, and the primary force behind hyper-convergence at the edge is data. As a result, most edge computing scenarios involve equipment, applications and users who remotely generate and consume a lot of data.

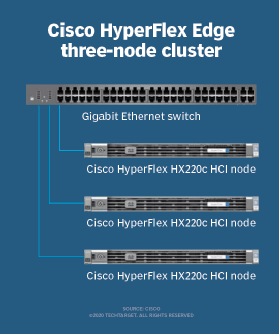

Perhaps the most common edge scenario for HCI is the remote office/branch office (ROBO), in which one to three -- the latter in situations requiring high availability -- compact systems with associated virtualization or container stacks serve as what Gartner calls a micro data center. Indeed, the addition of SD-WAN and virtual network appliances, aka virtual network functions (VNFs), means that a hyper-converged system can also replace a branch office router and firewall. Here, hyper-converged edge systems are used to locally deliver centrally managed applications and cache data stored on large data center arrays or public cloud services.

Outside the office, the density of hyper-converged systems makes them ideal in many industrial environments, such as the following:

- Manufacturing and warehouse control, quality assurance and data analysis

- Resource extraction (oil and gas wells, mines) site monitoring

- Power distribution, control and analysis

Hyper-converged infrastructure for edge computing purposes is also appealing for smart city infrastructure and heavy users of road infrastructure, such as delivery and ride-sharing companies. Here, HCI can power applications like traffic management, which increasingly uses machine learning, route planning and, eventually, networks of autonomous vehicles. In these scenarios, hyper-converged infrastructure serves as a gateway for intelligent sensors and vehicles, collecting and analyzing data to optimize system operations.

For carriers, hyper-convergence at the edge can offload increasingly busy backbone networks by positioning systems in cellular base stations, office or apartment complexes, or central offices. Like the ROBO scenario, HCI systems can deliver network services (aka VNFs), streaming content (aka content delivery networks) and real-time gaming applications.

Typical hyper-converged edge infrastructure system configuration

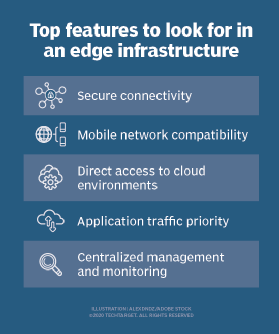

Edge infrastructure systems are reasonably representative of other HCI deployments in that the system configuration can vary according to the workload. Edge implementations also adopt a scale-out design that adds nodes to increase capacity and redundancy. Thus, most hyper-converged edge infrastructure configurations include the following:

- 1U or 2U server, sometimes with dual half-width nodes per chassis.

- 1 or 2S per node using multi-core x86 (Intel Xeon or AMD EPYC) processors with up to 28 (Intel) or 48 (AMD) cores per node.

- 16 to 24 DDR4 memory slots supporting up to 192 GB.

- New Xeon-based systems also support non-volatile persistent memory (Intel Optane).

- Eight to 24 small form factor (2.5-inch) drive bays, typically using SAS, SATA or NVMe SSD.

- Often includes one or two internal 2 NVMe storage slots for boot device and drive caching.

- Two to four 10 Gigabit Ethernet or dual 25 GbE network interfaces.

- Two to six Gen3 PCIe slots.

- One to two typically used for Nvidia GPUs to accelerate machine or deep learning workloads.

- Integrated baseboard management controller with a single 1 GbE interface for out-of-band management.

- Dual power supplies and fans.

HCI as edge infrastructure for telecom

As mentioned, a promising scenario for hyper-convergence at the edge is to offload carrier backbone networks and provide network services to mobile devices consuming rich content like HD and 4K video, online games for vehicles, and remote equipment using 5G networks for streaming telemetry. Carriers see traditional HCI configurations as appropriate in cellular base stations and corporate offices, with smaller form factors useful for universal customer premises equipment devices at remote sites, offices and even homes. Indeed, the versatility of a hyper-converged system is a significant benefit in a post-pandemic world where most employees are working outside traditional offices yet require the same set of enterprise network services and level of security.

For example, the Dell Virtual Edge Platform includes both 1U -- four to 18 core Xeon D-2100 -- and small form factor -- approximately, 8-inch square using a four to 16 core Atom-3000 processor -- devices that can act as a branch office router, VNF host and SD-WAN endpoint. Similar systems in ruggedized housings are designed as IoT gateways that aggregate and process data from scores of local sensors to eliminate sending raw data to the cloud or central data center.

Enterprises and carriers must plan how to handle the network and computing capacity demands of an exploding population of remote workers, 5G phones and smart devices. As organizations look for ways to locally process IoT data and deliver streaming content, hyper-converged edge infrastructure systems will prove invaluable to maintaining superior network service and application performance.