Dive into the history of server hardware

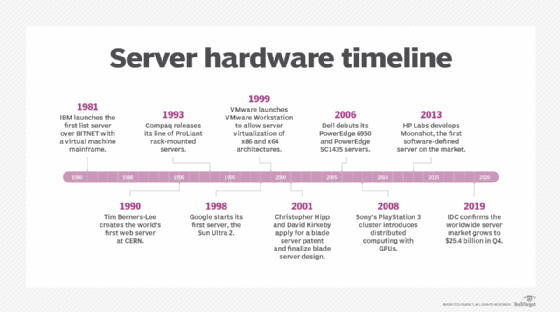

Server hardware has consistently evolved since the first web server in 1990. Here's a look at major advancements in management, virtualization and green initiatives.

Millions of servers exist worldwide, many of which are hidden away in server farms and data centers. But have you ever considered the technology's origins?

The history of server hardware is fascinating because it reveals just how rapidly technology evolves and its role in data center development.

Before a review of the history of servers, it's important to understand what a server is and does. In its most basic form, a server is a computing program or device that provides a service to another computing program or device, also known as the client.

The service device performs a range of tasks from sharing hardware or software resources with a client to securely moving files between computers. Servers are built with powerful hardware processing, memory and storage components, but the type of service a server provides is what separates it from the average computer program, rather than the hardware that makes up the machine.

Today, there are many types of servers, such as application servers, proxy servers, file servers, policy servers and virtual servers. However, the most notable event in server hardware history begins with the invention of the world's first web server in 1990.

1990: The world's first web server

In 1989, Tim Berners-Lee, a British engineer and computer scientist, invented the World Wide Web at the European Organization for Nuclear Research (CERN). He developed the World Wide Web to meet the need for automated information sharing between scientists across the world.

By Dec. 25, 1990, Berners-Lee had set up the world's first web server on a NeXT computer; the device had a 2 GB disk, a gray scale monitor and a 256 MHz CPU. The server is still at CERN and has a big white label attached to the front of the machine that reads, "This machine is a server. Do not power it down!"

The first webpage ever created contained informational links about the World Wide Web project and the technical details of web server creation.

In December 1991, the first web server outside Europe was installed in California at the Stanford Linear Accelerator Center, and by late 1992, the World Wide Web project had expanded to include a list of other web servers available at the time.

In 1993, CERN put the World Wide Web into the public domain, which catapulted its growth and progress. Over 500 known web servers existed worldwide in December 1993, and server use continued to multiply. At the end of 1994, over 10,000 servers with more than 10 million users were established.

1993: The development of rack servers

The proliferation of server technology led to the development of rackmounted servers, the first of which was Compaq's ProLiant series, released in 1993. A rack framework comprises multiple mounting slots, each designed to hold a server. Because a single rack can hold and stack multiple servers, less space is needed to store the machines.

This helped organizations fit even more servers into smaller spaces. However, keeping a rack in a confined area leads to excessive heat buildup and requires specialized cooling systems to maintain optimal temperatures.

Around this time, companies acquired more technology and moved all servers and equipment into singular rooms, colloquially referred to as server rooms. These rooms were unused or old areas within the company's walls. Eventually, organizations started to design rooms specifically for servers and address temperature monitoring and security issues. This change in infrastructure paved the way for the modern data center.

2001: The first commercialized blade server

In 2000, Christopher Hipp and David Kirkeby applied for the blade server patent. One year later, the first commercially available blade server was available from RLX Technologies, the company where Hipp and Kirkeby worked.

Blade servers were a step forward in the history of server hardware because they addressed several limitations of the rackmounted server framework. Blade servers use fewer components than rack servers to minimize power consumption and save space.

Blade servers also fit within a blade enclosure, or a chassis, which can hold multiple blade servers at once. A blade enclosure can provide a variety of functions, such as cooling and networking hardware, and each enclosure can be rackmounted.

With blade servers, the technology became smaller but just as powerful, and companies could increase the density of dedicated servers within a data center. The benefits of blade servers resulted in a massive increase in efficiency and enabled organizations to use computing resources more effectively and strategically.

2005: The emergence of different server management techniques

After the blade server's invention, focus turned from new hardware creation to management for better performance and efficiency. For example, server clusters provide users with higher uptime rates. Server clusters are a group of servers that are connected to a single system. If one server experiences an outage, the data center transfers the workload to another server and avoids any downtime on the front end.

Out-of-band management, also known as remote management or lights-out management, also moved onto the scene. With lights-out management, an IT team could manage, manipulate and monitor servers without even physically stepping into a data center. This method of remote server management further improves efficiency and reduces the number of IT administrators required for server room management.

2013: The world's first software-defined server

In 2013, HP Labs developed Moonshot, the world's first software-defined server. Compared to traditional servers, Moonshot servers run on low-energy microprocessors and use less energy and space. These servers were designed to handle specific data center workloads, such as massive amounts of information and high-performance cloud computing.

Around this time, a new trend started to become popular: virtualization. A virtual server, or a cloud server, has all the capabilities of a hardware-based server but includes virtualization software to divide a physical server into multiple virtual servers. Virtual servers are good for highly variable workloads, so organizations that have fluctuating needs might prefer the flexible scaling provided by cloud servers. This technology takes away much of the physical server management requirements.

The future of servers

As data centers grow to address more diverse IT infrastructures, servers must evolve to meet increased demands in volume, performance and efficiency.

The future of data centers includes green initiatives. Data centers use large amounts of electricity and nonrenewable resources, which have an environmental impact on Earth. Companies like Microsoft, AWS and Google now commit to advancements in green energy as they recycle and reuse computing components to build new ones.

Green data centers minimize their building footprints and e-waste with alternative energy use. With this, server hardware will likely become smaller, more compact and even more simplified, with a big focus on virtualization. It will be an interesting space to watch, and if the history of servers can tell us anything, the next big step forward is already in development.

Jacob Roundy is a freelance writer and editor, specializing in a variety of technology topics, including data centers and sustainability.