What is a kernel?

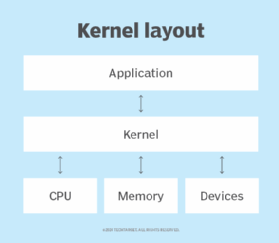

A kernel is the essential foundation of a computer's operating system (OS). It's the core that provides basic services for all other parts of the OS. It's the main layer between the OS and underlying computer hardware, and it helps with tasks such as process and memory management, inter-process communication, file system management, device control and networking.

During normal system startup, a computer's basic input/output system, or BIOS, completes a hardware bootstrap or initialization. It then runs a bootloader which loads the kernel from a storage device -- such as a hard drive -- into a protected memory space. Once the kernel is loaded into computer memory, the BIOS transfers control to the kernel. It then loads other OS components to complete the system startup and make control available to users through a desktop or other user interface.

If the kernel is damaged or can't load successfully, the computer won't be able to start completely -- if at all. Service will be required to correct hardware damage or to restore the OS kernel to a working version.

What is the purpose of the kernel?

In broad terms, an OS kernel performs the following three primary jobs:

- Provides the interfaces needed for users and applications to interact with the computer.

- Launches and manages applications.

- Manages the underlying system hardware devices.

In more granular terms, accomplishing these three kernel functions involves a range of computer tasks, including the following:

- Loading and managing less-critical OS components, such as device drivers.

- Organizing and managing threads and the various processes spawned by running applications.

- Scheduling which applications can access and use the kernel and supervising that use when the scheduled time occurs.

- Deciding which nonprotected user memory space each application process uses.

- Handling conflicts and errors in memory allocation and management.

- Managing and optimizing hardware resources and dependencies, such as central processing unit (CPU) and cache use, file system operation and network transport mechanisms.

- Managing and accessing I/O devices such as keyboards, mice, disk drives, USB ports, network adapters and displays.

- Handling device and application system calls using various mechanisms such as hardware interrupts or device drivers.

Scheduling and management are central to the kernel's operation. Computer hardware can only do one thing at a time. However, a computer's OS components and applications can spawn dozens and even hundreds of processes that the computer must host. It's impossible for all those processes to use the computer's hardware -- such as a memory address or CPU instruction pipeline -- at the same time. The kernel is the central manager of these processes. It knows which hardware resources are available and which processes need them. It then allocates time for each process to use those resources.

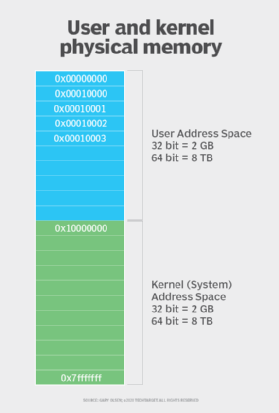

The kernel is critical to a computer's operation and requires careful protection within the system's memory. The kernel space it loads into is a protected area of memory. That protected memory space ensures other applications and data don't overwrite or impair the kernel, causing performance problems, instability or other negative consequences. Instead, applications are loaded and executed in a generally available user memory space.

A kernel is often contrasted with a shell, which is the outermost part of an OS that interacts with user commands. Kernel and shell are terms used more frequently in Unix OSes than in IBM mainframe and Microsoft Windows systems.

A kernel isn't to be confused with a BIOS, which is an independent program stored on a chip within a computer's circuit board.

What are device drivers?

A key part of kernel operation is communication with hardware devices inside and outside of the physical computer. However, it isn't practical for an OS to interact with every possible device in existence. Instead, kernels rely on the ability of device drivers, which add kernel support to specialized devices -- such as printers and graphics adapters.

When an OS is installed on a computer, the installation adds device drivers for any specific devices detected within the computer. This helps tailor the OS to the specific system with just enough components to support the devices present. When a new or better device replaces an existing device, the device driver also needs to be updated or replaced.

There are several types of device drivers. Each addresses a different data transfer type. Some of the main driver types include the following:

- Character device drivers. These implement, open, close, read and write data, as well as grant data stream access for the user space.

- Block device drivers. These provide device access for hardware that transfers randomly accessible data in fixed blocks.

- Network device drivers. These transmit data packets for hardware interfaces that connect to external systems.

Device drivers are also classified as kernel or user. A kernel mode device driver is a generic driver that's loaded along with the OS. These drivers are often suited to small categories of major hardware devices, such as CPU and motherboard device drivers.

User mode device drivers encompass an array of ad hoc drivers used for aftermarket, user-added devices, such as printers, graphics adapters, mice, advanced sound systems and other plug-and-play devices.

The OS needs the code that makes up the kernel. Consequently, the kernel code is usually loaded into an area in computer storage that's protected so that it won't be overlaid with less frequently used parts of the OS.

Kernel mode vs. user mode

Computer designers have long understood the importance of security and the need to protect critical aspects of the computer's behavior. Long before the internet, or even the emergence of networks, designers carefully managed how software components accessed system hardware and resources. Processors were developed to support two operating modes: kernel mode and user mode.

Kernel mode

Kernel mode refers to the processor mode that enables software to have full and unrestricted access to the system and its resources. Kernel mode is used by the OS kernel and its core services, including device drivers, system calls and interrupt handlers.

User mode

All user applications and processes that aren't part of the OS kernel use user mode. This enables user-based applications, such as word processors or video games, to load and execute. The kernel prepares the memory space and resources for that application's use and launches the application within that user memory space.

User mode applications are less privileged and can't access system resources directly. Instead, an application running in user mode must make system calls to the kernel to access system resources. The kernel then acts as a manager, scheduler and gatekeeper for those resources and works to prevent conflicting resource requests.

The processor switches to kernel mode as the kernel processes its system calls and then switches back to user mode to continue operating the applications.

Kernel and user modes are processor states and have nothing to do with actual solid-state memory. There's nothing intrinsically safe or protected about the memory used for kernel mode. Kernel driver crashes and memory failures within the kernel memory space can still impair the OS and the computer.

Types of kernels

Kernels fall into the following architectures. The main difference among these types is how they manage address spaces, flexibility and performance:

- A microkernel delegates user processes and kernel services in different address spaces.

- A monolithic kernel implements services in the same address space.

- A hybrid kernel, such as the Microsoft Windows NT and Apple XNU kernels, attempts to combine the behaviors and benefits of microkernel and monolithic kernel architectures.

- A nanokernel focuses on providing minimal services limited to low-level hardware management, delegating most other services to higher-level modules.

- An exokernel exposes hardware resources directly to applications, giving them more control over hardware.

- A multikernel uses multiple kernels to manage different hardware resources, commonly used in distributed environments.

Overall, these kernel implementations present a tradeoff. For example, admins get the flexibility of more source code with microkernels or increased security without customization options with the monolithic kernel.

Some specific differences among kernel types include the following:

Microkernels

Microkernels have all their services in separate address spaces from the kernel. Microkernels use message passing for their communication protocol, which sends data packets, signals and functions to the correct processes. Microkernels also provide greater flexibility than monolithic kernels; to add a new service, admins modify the user address space for a microkernel.

Because of their isolated nature, microkernels are more secure than monolithic kernels. They remain unaffected if one service within the address space fails.

Monolithic kernels

Monolithic kernels are larger than microkernels because they house both kernel and user services in the same address space. Monolithic kernels use a faster system call communication protocol than microkernels to execute processes between the hardware and software. They're less flexible than microkernels and require more work; admins must reconstruct the entire kernel to support a new service.

Monolithic kernels pose a greater security risk to systems than microkernels because, if a service fails, the entire system shuts down. Monolithic kernels also don't require as much source code as a microkernel, which means they're less susceptible to bugs and need less debugging.

The Linux kernel is a monolithic kernel that's constantly growing; it had 20 million lines of code in 2018. From a foundational level, it's layered into a variety of subsystems. These main groups include a system call interface, process management, network stack, memory management, virtual file system and device drivers.

Administrators can port the Linux kernel into their OSes and run live updates. These features, along with the fact that Linux is open source, make it more suitable for server systems and environments that require real-time maintenance.

Hybrid kernels

Apple developed the XNU OS kernel in 1996 as a hybrid of the Mach and Berkeley Software Distribution (BSD) kernels and paired it with an Objective-C application programming interface (API). Because it's a combination of the monolithic kernel and microkernel, it has increased modularity, and parts of the OS gain memory protection.

Hybrid kernels are used in most commercial OSes. They're similar to microkernels but include additional code in the kernel-space meant to increase performance. They also enable faster development for third-party software.

Nanokernels

Nanokernels provide a minimal set of services limited to just low-level hardware management functions. This approach delegates almost all operating system services, such as interrupt controllers or timers, to device drivers. Nanokernels are designed to be portable, enabling them to run on several different hardware architectures. They also have a smaller attack surface, which can improve security.

Exokernels

Exokernels are unique in that they expose hardware resources directly to applications. Instead of abstracting hardware functionality like other kernel types, exokernels enable applications to implement their own abstractions and management policies. This means that application developers can make the most efficient use of resources for each program. Exokernels also come with library OSes, which can export different APIs.

Exokernels provide applications with better control and flexibility, which can add to performance gains.

Multikernels

Multikernels use several different kernels to manage hardware resources. They're used either in multi-core machines or in distributed systems. In multi-core machines, multikernels manage each core as a separate entity with its own kernel instance.

In distributed systems, multikernels manage separate nodes, with each node running its own kernel instance. Kernel instances communicate over a network, providing a cohesive operating environment.

A multikernel approach optimizes the use of multi-core processors and distributed systems, which improves scalability and fault tolerance.

History and development of the kernel

Before the kernel, developers coded actions directly to the processor, instead of relying on an OS to complete interactions between hardware and software.

The first attempt to create an OS that used a kernel to pass messages was in 1969 with the RC 4000 Multiprogramming System. Programmer Per Brinch Hansen discovered it was easier to create a nucleus and then build up an OS, instead of converting existing OSes to be compatible with new hardware. This nucleus -- or kernel -- contained all source code to facilitate communications and support systems, eliminating the need to directly program on the CPU.

After RC 4000, Bell Labs researchers started working on Unix, which radically changed OS and kernel development and integration. The goal of Unix was to create smaller utilities that perform specific tasks well instead of having system utilities try to multitask. From a user standpoint, this simplifies creating shell scripts that combine simple tools.

As Unix adoption increased, the market started to see a variety of Unix-like computer OSes, including BSD, NeXTSTEP in the 1980s and Linux in 1991. Unix's structure perpetuated the idea that it was easier to build a kernel on top of an OS that reused software and had consistent hardware, instead of relying on a time-shared system that didn't require an OS.

Unix brought OSes to more individual systems, but researchers at Carnegie Mellon University expanded kernel technology. From 1985 to 1994, they expanded work on the Mach kernel. Unlike BSD, the Mach kernel is OS-agnostic and supports multiple processor architectures. Researchers made it binary-compatible with existing BSD software, enabling it to be available for immediate use and continued experimentation.

The Mach kernel's original goal was to be a cleaner version of Unix and a more portable version of Carnegie Mellon's Accent interprocessor communications (IPC) kernel. Over time, the kernel brought new features, such as ports and IPC-based programs, and ultimately evolved into a microkernel.

Shortly after the Mach kernel, in 1986, Vrije Universiteit Amsterdam developer Andrew Tanenbaum released MINIX (mini-Unix) for educational and research uses. This distribution contained a microkernel-based structure, multitasking, protected mode, extended memory support and an American National Standards Institute C compiler.

The next major advancement in kernel technology came in 1991 with the release of the Linux kernel. Founder Linus Torvalds developed it as a hobby, but he still licensed the kernel under general public license, making it open source.

Most OSes -- and their kernels -- can be traced back to Unix, but there's one outlier: Windows. With the popularity of DOS- and IBM-compatible PCs, Microsoft developed the NT kernel and based its OS on DOS. That's why writing commands for Windows differs from Unix-based systems.

Likewise, in 2001, Apple's Mac OS X was released. This was based on the Mach microkernel and BSD.

The rise of smartphones in the 2010s also saw the increase in mobile OSes and mobile-specific kernels. Android is based on the Linux kernel, and Apple iOS is based on a variant of the Mac OS X kernel.

There are several types of kernels available. Learn more about the differences between monolithic and microkernel architectures.