Liquid cooling vs. air cooling in the data center

The quest to maintain operating temperatures for increasing computing densities has firms transitioning from air cooling to liquid cooling. We assess both methods.

Data centers continue to pack more computing power into smaller spaces to consolidate workloads and accommodate processing-intensive applications, such as AI and advanced analytics. As a result, each rack consumes more energy and generates more heat, putting greater pressure on cooling systems to ensure safe and efficient operations.

In the past, when rack power requirements remained well below 20 kilowatts (kW), data centers could rely on air cooling to maintain safe operating temperatures. But today's high-performing racks can easily exceed 20 kW, 30 kW or more. This is, in large part, because the computing systems within these racks are configured with CPUs and GPUs that have much higher thermal power densities than previous generations. Although some air cooling systems can support racks that require more than 20 kW of power, they are inefficient and complicated to maintain, causing organizations to look into liquid cooling.

There are many factors to consider when debating liquid cooling vs. air cooling. This article describes these two main types of data center cooling methodologies, compares their benefits and drawbacks, and then discusses what factors to consider when choosing between the two.

What is air cooling?

Data centers have been using air cooling since its inception and continue to use it extensively. Although technologies have evolved over the years, with cooling systems growing ever more efficient, the basic concept has remained the same. Cold air is blown across or circulated around the hardware, dissipating the heat by exchanging warmer air with cooler air.

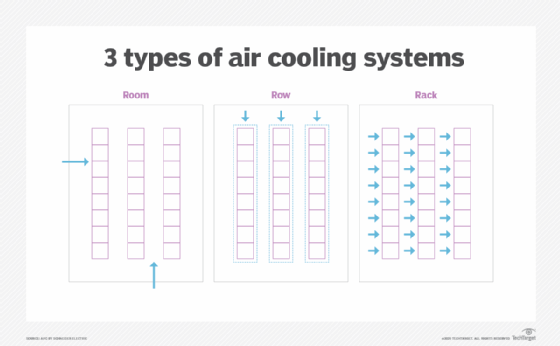

The main differences between air cooling systems lie in how they control airflow. The systems are generally categorized into three types: room-, row- and rack-based.

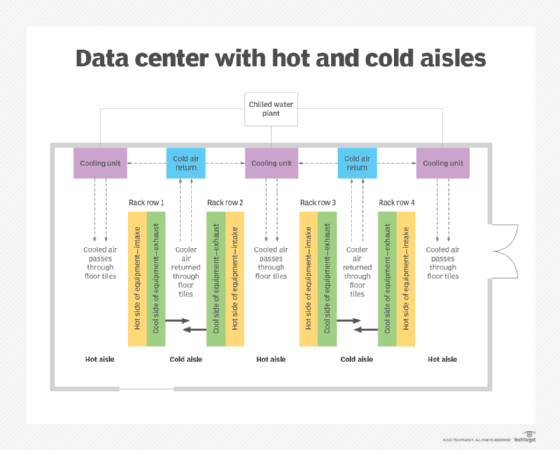

A room-based system uses computer room AC units to push chilled air into the equipment room. The air might be circulated around the entire room or vented through raised floors near the equipment. Many room-based systems now incorporate a hot and cold aisle configuration to better control airflow and target the equipment, helping conserve energy and lower costs. The configuration might also use some form of containment to better isolate the hot and cold aisles from each other.

Row-based cooling is more targeted than a room-based system. Each row contains dedicated cooling units that focus the airflow at specific equipment. Sometimes referred to as in-row cooling, the row-based approach improves cooling efficiency and reduces the amount of fan power required to direct airflow, helping to lower energy usage and costs. Row-based cooling can be implemented in different ways, such as positioning the cooling units between server racks or mounting them overhead.

A rack-based system takes this a step further by dedicating cooling units to specific racks, achieving even greater precision and efficiency than the other approaches to air cooling. The cooling units are often mounted on or within those racks. In this way, cooling capacity can be configured to meet a rack's specific requirements, leading to more predictable performance and costs. However, a rack-based system requires many more cooling units and increases overall complexity.

Over the years, air cooling has proven to be an invaluable tool for protecting data center equipment. The technologies behind it are well understood, widely deployed and still used extensively in data centers around the world. Data center personnel are familiar with air cooling and what it takes to keep it running. Maintaining these systems is a straightforward process with plenty of industry experience behind it.

Cons of air cooling

Unfortunately, air cooling also presents several challenges. At the top of the list is its inability to meet modern workload demands. Air cooling simply can't keep up with the increased densities and heavy processing loads. At some point, the capital outlay for air cooling -- along with the added complexity -- can no longer be justified. Already, air cooling represents a significant percentage of data center Opex. Rising energy costs only exacerbate the issue.

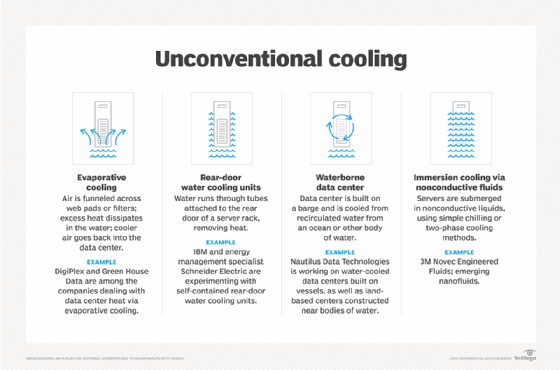

Water restrictions and costs can also present a challenge for air cooling systems that rely on evaporative cooling or cooling towers. In addition, higher computing densities translate to more cooling fans and pumps, making data centers so noisy that personnel must wear protective hearing devices.

The underlying problem is that air isn't an effective heat transfer medium, despite its widespread use, and a better cooling method is needed to meet modern workload demands.

What is liquid cooling?

Data centers have started to adopt liquid cooling for more than just mainframes and supercomputers. Water and other liquids are far more efficient at transferring heat than air -- anywhere between 50 and 1,000 times more efficient. Liquid cooling promises to help address many of the challenges that come with air cooling systems, especially as computing densities increase.

The liquid-based cooling technologies used in data centers are commonly divided into three categories: direct-to-chip cooling, rear-door heat exchangers and immersion cooling.

Direct-to-chip cooling -- sometimes referred to as direct-to-plate cooling -- integrates the cooling system directly into the computer's chassis. Cool liquid is piped to cold plates that sit directly next to components such as CPUs, GPUs or memory cards. Small tubes carry the cool liquid to each plate, where the liquid draws off the heat from the underlying components. The warm liquid is then circulated to a cooling device or heat exchange. After it's been cooled, the liquid is then circulated back to the cold plates.

A similar concept can be applied at the rack level using rear-door heat exchangers. In this scenario, an exchanger is mounted on the back of the rack in place of its back door. Server fans blow the warm air through the exchanger, which dissipates the heat. Liquid is circulated through a closed-loop system that carries out the heat exchange. Although the exact process varies from one system to the next, a rear-door cooling approach typically includes a contained coolant that runs through the exchanger and a system for lowering the coolant temperature as it circulates. This system might be nothing more than a local cooling unit, but it could also be part of a much bigger operation. For example, the coolant might be piped underground to lower its temperature.

A newer technology making headway is immersion cooling. In this approach, all internal server components are submerged in a nonconductive dielectric fluid. The components and fluid are encased in a sealed container to prevent leakage. The heat from the components is transferred to the coolant, a process that requires far less energy than other approaches. Immersion cooling can be single- or two-phase. With single-phase cooling, the coolant is continuously circulated and cooled to dissipate the heat. In a two-phase system, a coolant with a low boiling point is used. When the coolant boils, it turns to vapor and rises to the container lid, where it's cooled and condensed back to liquid.

Because liquid cooling can conduct heat better than air, it can handle a data center's growing densities more effectively, helping accommodate compute-intensive applications. In addition, liquid cooling significantly reduces energy consumption, and it uses less water than many air cooling systems, resulting in lower Opex and a more sustainable data center. Liquid cooling also takes up less space, produces less noise and helps extend the life of computer hardware.

Cons of liquid cooling

Despite these advantages, liquid cooling has its downsides. In addition to the potential for a much higher Capex, the risk of leakage is a big concern for many IT professionals, especially with direct-to-chip cooling. If leakage were to occur, it could have a devastating effect on the hardware.

Liquid cooling also requires IT and data center operators to learn new skills and adopt a new management framework, which can represent a significant undertaking and additional operating costs.

It might also mean bringing in new personnel or consultants, undermining the Opex advantage even more. In addition, the liquid cooling market is still maturing, with a wide range of technologies, resulting in proprietary products and the risk of vendor lock-in.

Factors to consider when choosing air cooling vs. liquid cooling

Organizations setting up new data centers or updating existing ones might be evaluating whether it's a good time to implement liquid cooling or to stick with tried-and-true air cooling. To decide between the two, they need to factor in several important considerations.

Price

Cost is a deciding factor in choosing a data center cooling method, but arriving at a true total cost of ownership (TCO) can be a complex process. Liquid cooling is typically thought to have a much higher Capex; however, some in the industry are beginning to question this assumption. According to a cost study conducted by Schneider Electric, the Capex for chassis-based immersion cooling for a 10 kW rack is comparable to air cooling the rack using hot aisle containment. The greater efficiency that comes with liquid cooling can also translate to lower Opex, especially as densities grow.

In addition, liquid cooling uses less power and water, which can be especially important in areas where water is in short supply. On the other hand, the risk of vendor lock-in could affect long-term TCO. Plus, liquid cooling typically requires special training or personnel to implement and maintain, and managing the system is more complex and time-consuming, which can increase Opex. IT administrators and site operators are familiar with air-cooled systems, and the cost of supporting them is generally lower.

The computers themselves should also be considered when evaluating TCO. Liquid cooling makes it possible to support greater computing densities, while reducing the data center footprint, leading to better space utilization and lower costs. The support for greater densities can benefit an organization that's been unable to implement processing-intensive workloads because of air cooling limitations. Supporting these workloads could translate into additional revenue, helping offset both Capex and Opex.

Ease of installation and maintenance

Another important consideration is what it takes to deploy and maintain a cooling system, which goes hand in hand with Opex considerations. With air cooling, operating the equipment and swapping out components are generally straightforward, and they seldom affect the computer components themselves. That's not to say air cooling doesn't present its own challenges, such as ongoing water treatment or mechanical maintenance, but it's a known entity with a long history to back it up.

Liquid cooling requires a new mindset and new way of working. IT and data center teams will have a steep learning curve and, in some cases, might be dependent on a vendor for routine maintenance. For example, what if IT must replace the memory board in a server that uses immersion cooling? The server must be lifted out of the dielectric liquid -- no small task in itself -- and the fluid cleaned off the components. The fluid might also require special handling because it's hazardous or raises environmental concerns, resulting in further complexity. When analyzing costs, organizations must evaluate all the implications of deploying and maintaining a cooling system.

Sustainability

Data center operators are under greater pressure than ever to make their data centers more sustainable. This pressure comes not only from customers, but from employees, stockholders, investment firms, governments and the public at large. At the same time, operators are trying to negotiate the challenges that come with increasing workload densities and growing data volumes, which can affect resource usage.

Organizations moving toward greener data center practices should consider liquid cooling over air cooling because it uses less electricity and water and can more easily accommodate denser workloads and data volumes. Given the increasing pressure to support greater sustainability, liquid cooling could become the only viable option, so organizations should prepare for the transition.

Location

Location can be a significant factor in choosing between air and liquid cooling. A data center near the Arctic, for example, can use the plentiful cold air to reduce operation temperatures. However, they must still filter the outside air and regulate its humidity, which mitigates some of the benefits of using that air. On the other hand, a data center in a warmer climate or near factories or other harsh settings -- where outside air can't be used -- might have difficulty maintaining its air cooling systems as rack densities increase, making liquid cooling a more viable option. The same goes for a data center in a crowded urban setting that must increase computing density to maximize floor space. Local regulations, tax advantages or similar issues can also play a role in choosing between air and liquid cooling.

Future

Some organizations don't support the type of advanced workloads that require high processing density, so a switch to liquid cooling might not be warranted. That said, densities are only likely to grow in the coming years as data centers scramble to better utilize floor space and IT consolidates workloads to improve efficiency. In addition, most organizations will likely be moving toward more sustainable data centers, which brings its own set of challenges. At some point, liquid cooling might become the only viable option, although that doesn't mean organizations have to rush into it. However, they should be prepared for its coming.

The role of technology maturity in cooling selection

Rear-door heat exchangers are becoming more commonplace in the data center, and other forms of liquid cooling have begun making important strides. However, liquid cooling is still a relatively young industry when it comes to anything other than mainframes and supercomputers. Therefore, it's hard to gauge which technologies will emerge as leaders, how the technologies might be standardized, or what to expect four or five years from now.

With air cooling, organizations know what they're getting into, but its long-term practicality will likely be limited. Organizations that don't need to rush into a decision might want to give liquid cooling more time to mature. Those already feeling the crunch might consider a phased approach toward liquid cooling.