Sergey Nivens - Fotolia

How a backup administrator can manage large volumes in 3 steps

Backup administrators have a tall task: managing a vast amount of data. Even if the volume is seemingly growing out of control, it's possible to keep a handle on it.

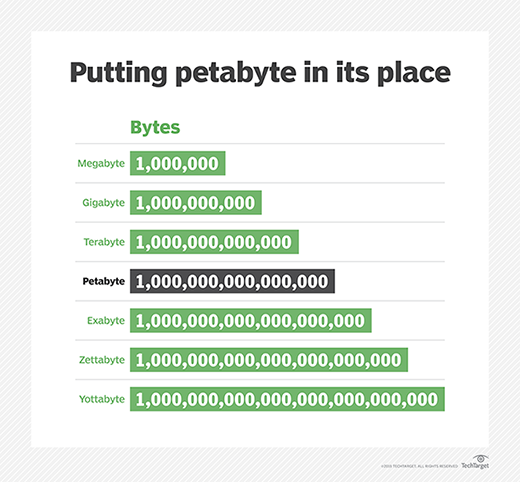

The volume of backup data is increasing so dramatically that those tasked with managing backups may find themselves responsible for overseeing multiple petabytes. This kind of uncontrolled growth can put a backup administrator into the position of constantly playing catch-up and will likely place a major strain on IT budgets.

Fortunately, there are best practices that IT professionals can follow to get a handle on their backup data. Backup administrators who find themselves in possession of petabytes of backup data should take a three-step approach to get that data under control.

Step 1: Verify the data protection requirements

The process of evaluating a large enterprise's data protection efforts is not easy. It involves determining what resources are currently being backed up and who owns each of those protected resources.

To whatever extent possible, the backup administrator must meet with stakeholders to determine the protection requirements for each workload. The goal behind this process isn't simply to find out what service-level agreements (SLAs) may already be in place -- although that is important, too. The larger goal should be to determine each workload's backup retention requirements and anticipated future data growth, both of which are essential to long-term capacity planning efforts.

Simply put, it is nearly impossible to gain control over a large and unwieldy backup data set without knowing the specific data protection requirements and anticipated data growth.

Step 2: Examine the current backup architecture

A second important step in evaluating an organization's current data protection efforts is to spend some time examining existing backup jobs to discover any potential inefficiencies. It is common to find overlapping backup jobs, which can greatly contribute to backup data growth. A backup administrator may even discover an incorrectly configured job is rapidly growing the backup data footprint by performing a daily full backup of a protected resource -- as opposed to backing that resource up incrementally.

As the backup administrator works through this process, it is important to make sure backups are being created in a way that complies with any existing SLAs. This is also a good time to make sure the organization is using deduplication to reduce the size of the backup data.

Step 3: Develop a data lifecycle management policy

Once backup administrators have firmly established data protection requirements, they can begin creating data lifecycle management policies for the backup data. Such policies ensure the working backup set is kept at a manageable size, while also guaranteeing backup data is retained for the required period of time.

A data lifecycle management policy will typically move aging data off the primary backup target and into an archive. This archive can exist on premises or in the cloud. If the organization decides to move some of the existing archive data to the cloud, however, then the backup administrator will need to work with the cloud provider to determine the best way to migrate such a large collection of data.

A data lifecycle management policy is about more than just migrating data to the archives. Backup data eventually reaches the end of its useful lifespan. A good data lifecycle management system should be able to automatically purge expired data in accordance with the organization's data retention policy.

Dealing with multiple petabytes of backup data is no easy task. By taking a methodical approach, however, a backup administrator can better organize data and shrink the organization's footprint.