8 data protection challenges and how to prevent them

Businesses contend with a combination of issues spawned by data overload, privacy regulations, access rights, cyberattacks, cloud environments, generative AI and human error.

Data protection requires administrative and operational controls; technical controls, such as access management, authorization and encryption; and physical controls that protect the computing hardware and work environments.

Many organizations focus on technical controls and protections, said Rebecca Herold, founder and CEO of consultancy Rebecca Herold & Associates, but they're not addressing the administration protections, such as documented policies and procedures to make employees aware, or sufficiently monitoring the physical aspects. "So, it is just piling into the ongoing breaches that you read about every day in many industries around the world," she added.

Everyone rightly focuses on the regulated data, including personal, card holder and healthcare information. But there's a lot more that needs attention. "It's expanded," said Heidi Shey, analyst at Forrester Research. "It's not just the source code that people have historically been worried about or secret formulas."

Data protection challenges mount

New data protection challenges can involve IoT sensor data collection, algorithms, APIs, and machine learning and AI models that companies have developed. "Many times, consumer IoT devices such as smart cameras and smart vehicles are being used to support the business," Herold explained. "And there is data that is shared that is collected by those products that usually the business isn't even aware of."

With the potential for financial loss, reputational harm and penalties for noncompliance with data privacy protection regulations, security leaders need to track the location and ownership of the company's data at rest, in transit and in use and understand the security risks. That includes the data encryption and sensitivity level, potential impact on the business if the data is compromised and the dependencies between the data and other applications.

Globally, the 35 highest data privacy violation fines in 2023 totaled $2.6 billion, according to Forrester. The EU issued 19 of those fines to companies that failed to comply with the General Data Protection Regulation (GDPR), federal and state regulatory bodies in the U.S. issued 15 and South Korea issued one.

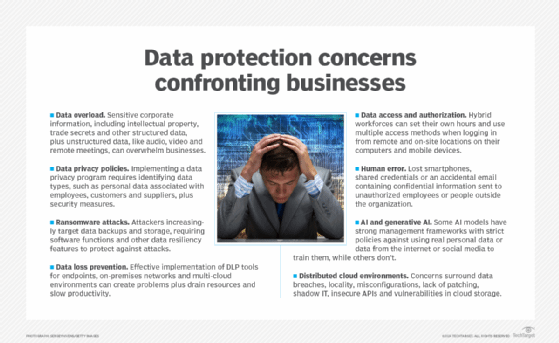

Today's businesses are facing a complex combination of data protection challenges, including data overload, risk management, cyberattacks, access rights, distributed cloud environments, AI and generative AI, human error, and new and stricter privacy regulations.

1. Too much data

In addition to sensitive corporate information such as intellectual property, trade secrets and other structured data in the form of formatted text and data sets, there's a lot of unstructured data, like audio and video, that's not necessarily considered sensitive but might need to be safeguarded.

"People are working in a remote or in a hybrid way," Shey said. "You're having Teams meetings or Zoom meetings and you are recording these meetings. The recordings and the transcripts of these meetings may have sensitive information." Companies need to establish the retention period of those files, even though those who missed a meeting might still want access to them.

"Data lifecycle management," Shey explained, "is challenging because companies sometimes struggle with making decisions about data retention, what should that policy look like, how long should we hold on to this data?" It's not uncommon to see internal struggles between security leaders who consider data a potential liability and business stakeholders who want to hold onto data to mine more information from it. While companies might have regulatory requirements outside of records retention, some regulations are not that specific. "It's based on what the business decides," Shey said, "so you do find that a lot of companies are hesitant to delete data."

2. Data privacy risks

Implementing an enterprise privacy program requires identifying data types, such as personal data (name, address, birth date, and account information) associated with employees, customers and suppliers, as well as developing security measures to protect sensitive information and ensure compliance.

It's critical to map out the business environment, including data the company collects, creates and stores, and to communicate the various regulations that apply. What data, for example, is protected by the GDPR or the California Consumer Privacy Act (CCPA) and should not be acquired? When data is stored for an extended period after its intended use, transferred across borders or belongs to a child, companies can face stiff penalties. Meta and TikTok were hit with fines totaling about $1.7 billion in 2023 for GDPR-related violations.

Currently, GDPR and federal data protection regulations don't directly address AI. Differentiated privacy and federated learning tools use mathematical techniques to offer protections to individuals when personal data is used in data sets. Private data, for example, might be used to train AI models, but it's not searchable.

3. Ransomware attacks

Ransomware, which encrypts a company's files and makes them unreadable until the victim pays a ransom for the decryption key, remains a major concern for businesses, with the threat of financial loss and reputational damage from sensitive data exposure. Attackers are increasingly targeting data backups and storage, forcing tool vendors to provide functions like immutable backup and other data resiliency features to protect against attacks.

Ransomware was a factor in 24% of breaches, with system intrusion in 94% of those attacks, according to Verizon's 2023 Data Breach Investigations Report (DBIR) report. The top three vectors for these attacks were email, desktop sharing software and web applications.

"How do we reduce the capability for malware on our networks to perform reconnaissance, to move laterally and to do a broad-based infection across a network," said Jason Garbis, principal and founder of consultancy Numberline Security, who co-chairs the Cloud Security Alliance's Zero Trust Working Group. Companies need to make devices and services resilient to ransomware attacks, he advised, by creating a small enough "blast radius" so only a user device or server gets infected. "If you've got hundreds of systems or thousands of systems infected," he explained, "you are in a much worse place than if you have one or two."

4. Data loss prevention

A data loss prevention (DLP) strategy consists of policies, tools and techniques to help security teams increase data visibility and protect sensitive data against unauthorized use in enterprise and cloud environments. DLP software monitors data entering and leaving the network, sending alerts when it detects suspicious activity, such as unauthorized data transfers. It can help security leaders enforce data protection regulatory compliance.

Modern DLP logs files and events and might include real-time data analysis and user behavior analytics. Some cloud providers offer DLP services that scan and classify different types of data, offering masking, tokenization and redaction for highly sensitive information, like credit card numbers. Deploying DLP software is one of the CIS Critical Security Controls for data protection best practices. But effective implementation for endpoints, on-premises networks and multi-cloud environments can present data protection challenges, especially when it comes to integration, scaling and the use of endpoint agents, which can drain resources and slow productivity.

"How do I classify my data?" said Garbis, raising questions about the challenges of implementing an effective DLP strategy. "How do I protect it, and what does that even mean to protect it, while keeping users productive?"

5. Access and authorization

Enterprises today deal with a hybrid workforce that sets its own hours and uses multiple access methods from remote and on-site locations on computers and mobile devices to log in to the company's network environments and resources. Third-party suppliers might also access the company's resources. Access control management requires enforcement of processes for revoking data access such as automatic device lockout and remote wipe capabilities if portable devices are lost or stolen.

An untethered workforce can complicate privilege access management and access rights -- who needs access to the data at what time. DBIR research found 406 incidents of privilege misuse, 288 resulting in data disclosure, the majority of which were for financial gain. Credentials are even harder to control. Stolen credentials from weak passwords and brute-force attacks were the entry point for 86% of web application breaches. Of 1,404 incidents reported by DBIR, 1,315 (94%) resulted in data disclosure, primarily for financial gain.

6. Human error

Sensitive data exposure often comes down to human error: lost smartphones, shared credentials or an accidental email containing confidential information sent to unauthorized employees or people outside the organization. The top reasons for human error-related breaches, according to DBIR, were misdeliveries -- sending information to the wrong person (43%); publishing errors -- showing physical documents to the wrong audience (23%); and misconfigurations of hardware, software and cloud services (21%). Developers caused the most error-related breaches, followed by system administrators and end users.

Failure to back up data can make a bad situation worse. Global management consulting firm KPMG lost its Teams chat history in August 2020 when a Microsoft 365 admin attempted to remove an individual's retention policy and inadvertently deleted employee communications across the company. Without Microsoft 365 backup, there was no way to recover the information.

7. AI and generative AI threats

Like cloud services, AI tools and algorithms are more different than alike, Herold noted. Some AI models have strong management frameworks with strict policies against using real personal data or data from live environments to train them. "Others are going out there and scraping data as much as they can off of social media sites and everywhere else," Herold said, creating a huge number of issues surrounding exposure of personal data and intellectual property when the data is used in training AI and machine learning models. "AI," she said, "is really highlighting the fact that a lot of intellectual property might be going out and being exposed."

Threat actors are also using AI capabilities. An unidentified intruder used stolen credentials and an API with AI capabilities to infiltrate T-Mobile's computer systems in November 2022, the company disclosed in an SEC filing in January 2023. The attacker managed to steal 37 million customer records, containing addresses, phone numbers and dates of birth. The attack followed a series of high-profile data breaches and settlements against the besieged carrier.

A shareholder filed a lawsuit in September 2022 claiming that in 2018 T-Mobile had started an "aggressive and reckless plan" at the behest of its largest stockholder, Deutsche Telekom, to centralize customer data and credentials for use in the training of AI models.

8. Secure cloud data

Distributed cloud environments offer greater flexibility and potential cost savings for moving data and other applications. But data protection issues, such as data breaches, locality, misconfigurations, lack of patching, shadow IT, insecure APIs and vulnerabilities in cloud storage, cause concerns for many companies.

"The cloud has so many advantages," said W. Curtis Preston, technology evangelist at consultancy Sullivan|Strickler and host of the Backup Wrap-up podcast, "but because you never actually see the company and you never actually see the service, you don't get to verify that they are doing things in a normal way." It's also important to ask basic questions about data protection, he added: "Where is my data located? Is my data encrypted? Is it encrypted in my environment or is it encrypted when it leaves my environment? How are you protecting my data against ransomware attacks?"

The capability to discover and classify data using machine learning and AI is increasingly built into tools and platforms. "Especially with the cloud environments, we are seeing data security posture management," Shey said. "It's the new shiny that people are turning to to help them identify what data they have in cloud environments."

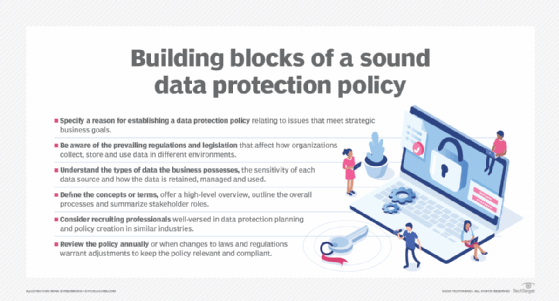

How to prevent data protection challenges

Despite the numerous data protection challenges confronting today's businesses, a measure of relief comes from advancements in data backup and recovery, encryption, multifactor authentication (MFA), secure access service and AIOps, as well as adoption of corporate AI policies that will depend heavily on data privacy regulations eventually providing companies with more specific guidance on generative AI's impact.

Data backup and recovery systems. Companies should require modern storage and backup capabilities that support immutable copies of data, distributed copies across locations and, most importantly, testing those scenarios. "Many organizations only do the first part," Garbis acknowledged. "They have never tested a particular scenario like, 'Our on-prem data center is compromised, how do we restore this up into a cloud environment?' Or 'This machine is compromised. We didn't have a plan for restoring the application that houses the data.'"

Encryption. Even if data is leaked or stolen, encryption can prevent it from being read. Symmetric (AES) and asymmetric (RSA, ECC) encryption algorithms can protect data at rest and in transit, such as communications over the internet. Effective encryption depends on proper implementation and secure key storage. Homomorphic encryption enables mathematical operations like addition and multiplication on encrypted data (ciphertext). When decrypted, the results match those of unencrypted text. Quantum encryption is designed to protect public and private keys against the mathematical computing power of Quantum computers.

Multifactor authentication. MFA requires two or more verifiable factors to achieve authentication. It's based on information the user knows (static password, PIN), something the user has (one-time password token, digital certificates) or something physical about the user (biometrics like fingerprints or facial recognition). Companies should require MFA for external use of applications and remote network access. It's also important to understand how the authentication and authorization system of cloud service providers (CSPs) works and how they keep everything up to date. "MFA is huge," Preston said, "so if [CSPs] are not using a modern MFA system, then there is really no point in talking to them."

Greater network visibility. Logging systems can help to establish a baseline of enterprise activity to identify anomalies in network traffic or user behavior. But IP addresses and device identifiers (media access control) can identify users for data that has been anonymized, raising privacy concerns. A continuous monitoring strategy uses tools and processes to implement and monitor security controls in compliance with the NIST SP 800-53 Cybersecurity Framework, which can help ensure compliance with GDPR, HIPAA and other data protection regulations.

Generative AI policies. Organizational teams that include data protection officers are developing companywide policies for generative AI. "It needs to be a first step to give employees guidance about what they should be doing and what they should not be [doing]," Shey said. "This is what this is, here is the purpose of this, here is how we use this as an organization, here's how we won't." The policies should outline social media and personal device use, such as bring your own AI like ChatGPT or Gemini. From there, companies are branching out, Shey said, reviewing licensing agreements and contracts to account for these changes and updating acceptable use, privacy and data retention policies.

Voluntary frameworks. Global companies can work toward implementing information security management systems that meet ISO/IEC 27001 certification guidelines, which are designed to protect data stored electronically, physical copies and third-party suppliers and are based on confidentiality, integrity and availability, also known as the CIA triad. The certification, which includes audits, needs to be met every three years. ISO-27017 offers guidance on moving or sharing data to the cloud. In the U.S., businesses that handle customer data may benefit from SOC 2 Compliance & Certification, an annual reporting method developed by the American Institute of CPAs, which evaluates data protection practices based on the five trusted services criteria that go to the core of data protection practices: security, availability, confidentiality, processing integrity and privacy.

Kathleen Richards is a freelance journalist and industry veteran. She's a former features editor for TechTarget's Information Security magazine.