Assess managed Kubernetes services for your workloads

Managed services from cloud providers can simplify Kubernetes deployment but create some snags in a multi-cloud model. Follow three steps to see if these services can benefit you.

Managed Kubernetes services from public cloud providers offer resilient and highly available Kubernetes control plane deployments. These services integrate with the native features of their respective cloud provider, as well as with on-premises Kubernetes deployments. However, these services don't always integrate with other cloud providers' offerings -- at least not easily or well.

It's best to use managed Kubernetes services -- such as Amazon Kubernetes Service (EKS), Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE) -- when you commit to a single cloud provider and associate all your orchestration processes with deployments on that provider's platform. The more exceptions to this rule your application presents, the less likely a single managed Kubernetes service will work for you.

Organizations that choose to work with multiple cloud providers should expect the integration of container orchestration tasks across a multi-cloud deployment to become difficult.

To determine if a managed Kubernetes service is right for your cloud deployment, follow these three steps.

1. Map out deployment

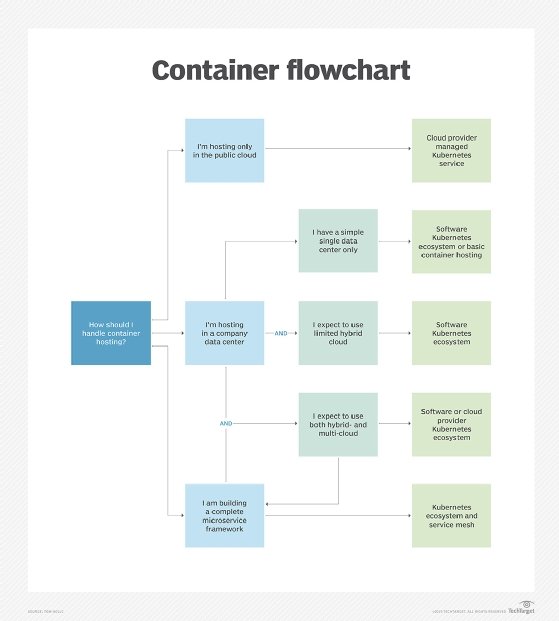

The first step in any container orchestration strategy is to map out the hosting space -- meaning the complete resource set you'll use to host applications. This might include an on-premises data center and multiple public cloud providers. For each application, determine the scope of its deployment, including where you will host its components. This exercise will frame how diverse your Kubernetes deployment will be.

Strong candidates for managed Kubernetes services will have an orchestration map that shows two important things: First, they only plan to use one cloud provider and are prepared to rework their operations strategy if they change providers. Second, any applications with components hosted in the cloud and in the data center perform with little to no failover or bursting across that boundary.

At the other extreme, poor candidates for managed Kubernetes services are those who plan to use several public cloud providers and move applications quickly between them. In addition, companies that plan to use all hosting resources, including an on-premises data center, as a large resource pool from which application components can draw are not a good fit for these managed services.

2. Determine multi-cloud goals

Most companies fall somewhere in the middle of these two extremes. If this is the case, the next step is to define your multi-cloud strategy. Determine whether you have a static multi-cloud model -- where you place application components into fixed cloud provider hosting groups -- or a dynamic multi-cloud model -- where components move freely across different cloud providers' platforms.

For those with the static model, using a managed Kubernetes service in each of your public clouds is most likely justified, but only if the cloud provider tightly integrates Kubernetes with a tool, such as Istio, that can distribute work and manage distributed processes. In this case, the use of each cloud provider's tools would likely improve your container hosting capabilities.

Those with a dynamic multi-cloud model, however, most likely won't benefit from cloud providers' managed Kubernetes services. Instead, they need an overarching orchestration approach that crosses cloud boundaries freely. These enterprises should look to deploy their own Kubernetes orchestration platform with cloud-agnostic tools.

3. Choose how you want to commit

Cloud-hosted managed Kubernetes services won't integrate with the native features of other cloud providers. This means, if you commit to these services in a multi-cloud model, you will, in most cases, also commit to separately orchestrating each of your public clouds.

If you select a software distribution of Kubernetes, such as Red Hat OpenShift, you'll have to deploy both your applications and Kubernetes in each of your cloud domains. You'll also have to manage the availability of Kubernetes elements and their control plane connectivity.

One common multi-cloud framework for Kubernetes is Stackpoint.io, and it supports the three major public cloud providers -- AWS, Azure and Google -- as well as on premises. With Stackpoint.io, enterprises can create a universal, multi-cloud Kubernetes control plane that can unify deployment.

Editor's note: Stackpoint.io was acquired by NetApp in December 2018, and is now called NetApp Kubernetes Service. Support for public cloud providers remains the same, and on-premises support via VMware is in public preview and functional, according to the company.

Lastly, consider your cloud provider's commitment to containers and Kubernetes, and their strategy to evolve Kubernetes ecosystems. Hybrid cloud users need a federation tool to oversee Kubernetes in both the cloud and in the data center, and currently this comes mostly from data center giants, including Red Hat, VMware and IBM. Cloud providers are now looking to offer services that fuse managed Kubernetes and hybrid cloud -- Google Anthos is one example. Microsoft likely will address this in its Azure platform via Azure Arc, although this extends the Azure control plane in more of a subduction than federation. AWS, meanwhile, continues to favor a cloud-specific approach.