Roman Sakhno - Fotolia

The hybrid cloud challenge CIOs need to address

Your IT team needs a standardized approach to application development to truly benefit from a hybrid cloud. Containers and Kubernetes may be the key to making that happen.

CIOs are confronted with a real challenge when they seek to deploy hybrid clouds. They need to create an application model that addresses their data center, security and compliance rules but also takes full advantage of the elasticity of the public cloud.

The biggest benefit of cloud computing is agility -- the ability to replace broken components or scale overloaded ones. But CIOs are finding that cloud front ends are less resilient than expected when incorporated into hybrid cloud architectures. That's because the back-end data center portions of these applications can't respond to problems with the same agility. In fact, scalable cloud front ends can overload on-premises, legacy applications and negate the public cloud benefits.

Third-party vendors and cloud providers have added more container and Kubernetes services to respond to these hybrid cloud challenges, but this disconnect between on-premises and public cloud models remains one of the central obstacles for CIOs today.

Why CIOs turn to containers, Kubernetes and PaaS models

A combination of containerized applications and Kubernetes deployments works for all kinds of scenarios, from legacy monolithic applications to microservices-based cloud applications. This strategy can unify deployment and redeployment practices across a hybrid cloud. And since all major public cloud providers support containers and Kubernetes, and offer managed Kubernetes services, a multi-domain Kubernetes approach can also unify deployment across multiple public clouds.

However, the container/Kubernetes approach is still incomplete. When you consider the complexity of cloud-native component relationships, application deployment really requires a complete model for workflow connection and service discovery. But in order to implement such a model, you need a development framework, which around the mid-2010s would have been called a PaaS.

Today, PaaS is being redefined for hybrid and multi-cloud, since programming still requires a language and a set of APIs that provide applications with access to hardware, software and network resources. The APIs define a platform, a kind of virtual computer and operating system that, in this case, represents a combination of data center and public cloud resources. Until we clearly define this new PaaS model, hybrid cloud users will have to optimize the evolving set of add-ons to build a unified model of their own.

The most immediate disadvantage to the revamped PaaS model is a lack of middleware consistency. Organizations need a standardized way to discover services, bind containers into workflows and monitor conditions to allow applications to scale under load.

It's not uncommon to find different applications in use across a business that rely on different tools and practices. This increases overall operations cost and complexity, and eventually results in compatibility problems if these applications share services and microservices.

Centralize hybrid and multi-cloud controls with Kubernetes domains

The best way to synchronize on-premises and cloud-based assets is to consider your hybrid cloud infrastructure as a series of Kubernetes "domains" -- Kubernetes clusters that are aligned to the hybrid cloud relationship, rather than to data center locations. Components will normally scale and redeploy within a domain, so if you want to scale across multiple clouds or between a cloud and data center, define a set of boundary domains. These will hold the resources you'll use at the boundary between your public and private clouds to handle aspects such as host scaling or resilience. Be sure to select tools that support this kind of separation.

Operations teams face their own challenges in this emerging PaaS approach. Users don't have to define specific resource needs or compliance risks during development with Kubernetes, and it's nearly impossible for ops to classify and test policies on these containers after the fact. The lack of a complete PaaS layer means ops teams have to hybridize their policy controls.

Domain-separated clusters can start to address this problem, as it's easier to define and enforce resource policies for similar containerized applications. If you have containers with unique security or compliance requirements within your domains, label your nodes and use policies to filter pods to the right nodes, or use taints and tolerations to enforce avoidance policies.

Build a consistent Kubernetes ecosystem

Managed Kubernetes services, such as Amazon Elastic Kubernetes Service or Google Kubernetes Engine, are attractive tools to deploy cloud front ends for on-premises applications as part of a mobile or web modernization. While these services are all built around Kubernetes, each one provides enough of its own elements that it's difficult to integrate applications across platforms. This creates problems for organizations that want to do more cross-cloud backups or scaling across environments.

To resolve this hybrid and multi-cloud challenge, follow these steps:

- Define an application model.

- Centralize the assembly of your Kubernetes ecosystem.

- Monitor trends in application, infrastructure and Kubernetes ecosystem.

Your development model might start with APIs that are somewhat specific to your environments, but the goal should be to create one approach that spans your hybrid and multi-cloud framework. That will simplify all the other problems by tailoring your processes to an application's needs and specifications.

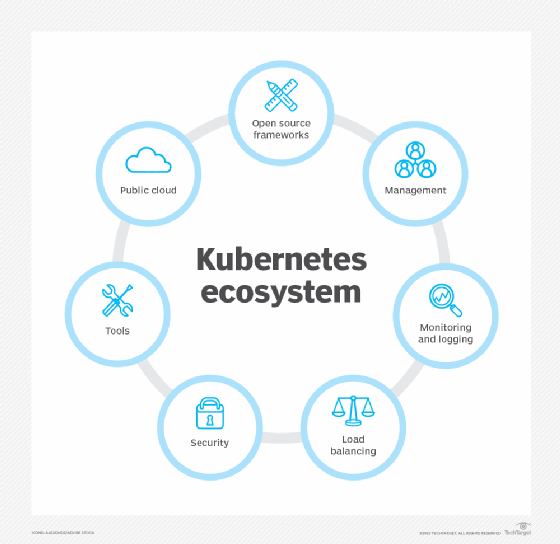

From there, centralize your Kubernetes toolkit to deploy applications across all your domains. This should include the complete set of Kubernetes and Kubernetes-related tools you plan to use. Craft a single strategy that includes Kubernetes in the broadest ecosystem form that fits your needs.

As you consider tools and services, target on-premises vendors like Red Hat and VMware as well as cloud platforms such as Google Cloud, Microsoft Azure and AWS. Add in service discovery, service mesh, monitoring and management, and multi-domain Kubernetes support as needed -- capabilities increasingly included in managed Kubernetes services.

Even after you've completed these tasks, continue to keep tabs on the latest relevant trends. Be prepared to adjust your strategy if you find previous choices now look suboptimal. Don't fall too far behind the state of the art in this rapidly evolving field, or the time and cost associated with getting up to date may be more than you can afford.