Getty Images/iStockphoto

Selecting an AWS EC2 instance for machine learning workloads

Certain workloads require specific EC2 instances. For AI and machine learning workloads, teams need to find instances that balance app requirements, performance needs and costs.

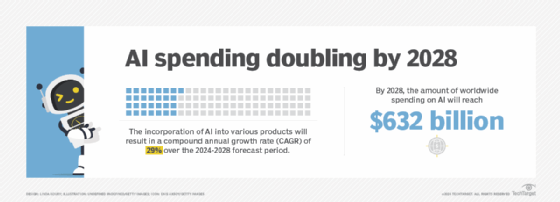

Enterprises across numerous industries continue to see the value of AI. Whether it is to automate tasks, optimize business processes, detect fraud or make more informed decisions based on forecasting, enterprises are looking for ways to profit off AI and machine learning technology. To best optimize the power of AI, organizations must use the right EC2 instances.

One of Amazon SageMaker's main features is to provide and manage compute capacity for the multiple stages of machine learning applications. Even though it offers a few serverless options, many scenarios require the use of EC2 instances managed by the SageMaker service.

When choosing an instance type, users must fully understand their workload and evaluate the task's compute and application requirements. For an ML or AI workload, users require instances that provide optimal performance for deep learning and computing. The accelerated computing instance family, which includes p5, g5, trn1 and inf2 instances, can provide teams with this functionality.

Let's discuss why instance types matter, the EC2 instance options for ML workloads and how teams can determine the best instance for their workloads.

Why does instance type matter?

The available EC2 instance types in SageMaker vary according to the task being performed, such as development, training and inference. Not all instance types are suitable for any type of task; for example, types available for training are not necessarily available for notebooks or processing. Also, there are tasks that could be more compute- or memory-intensive or require particular storage or network throughput.

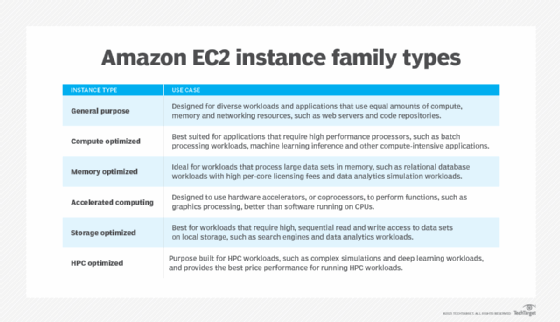

SageMaker provides several general-purpose EC2 instance families, as well as more optimized ones as shown in this chart. These instance families contain specialized instance types created for a variety of tasks.

Some instances offer a combination of optimizations -- for example, compute and network (c5n) or memory and storage (r5d).

The amount of processed data also has a significant impact on the type of instance that is optimal for a particular task. If data is stored inside EC2 instances, a storage-optimized instance type is a good fit. If large amounts of data will be retrieved from external storage, then network-optimized instances could be a good fit. Accelerated computing instance types are recommended for deep learning and large language models (LLMs).

EC2 instances for machine learning

SageMaker offers instances with accelerated computing instances, such as p5, g5, trn1 and inf2. These are optimal for multiple ML tasks. Let's take a closer look at the following instances.

P5

P5 instances are powered by Nvidia H100 GPUs and provide high performance for deep learning and computing. At this time, SageMaker only offers ml.p5.48xlarge, which, depending on the component type, can cost between $113 and $118 per hour. This price point makes it less accessible for many teams.

These instances are a good fit for extremely demanding workloads, such as generative AI apps, LLMs, graphics and video generation in development, training and inference.

G5

G5 instances are powered by Nvidia A10G Tensor Core GPUs and are also a good fit for heavy training and inference workloads. SageMaker offers more size options for G5 instances, delivering more flexibility to application owners regarding compute capacity and cost management compared to P5 instances. The sizes include the following:

- ml.g5.xlarge.

- ml.g5.2xlarge.

- ml.g5.4xlarge.

- ml.g5.8xlarge.

- ml.g5.12xlarge.

- ml.g5.48xlarge.

Trn1

Trn1 instances use the AWS Trainium chip, which was built explicitly for deep learning training tasks while keeping costs low. According to AWS, it offers up to 50% cost-to-train savings over similar EC2 instances. This makes it more accessible to business of all sizes. Also, for sustainability-conscious businesses, it is said to be 25% more energy-efficient for deep learning training compared to other accelerated computing EC2 instances.

Currently, two sizes are available: ml.trn1.2xlarge and ml.trn1.32xlarge.

Common use cases include natural language processing, computer vision and search, as well as recommendation and ranking.

Inf2

Inf2 instances use AWS Inferentia2 chips and are intended for deep learning inference tasks. Like trn1 instances, inf2 instances provide sustainability benefits and deliver up to 50% better performance over similar Amazon EC2 instances.

The available instance sizes are the following:

- ml.inf2.xlarge.

- ml.inf2.8xlarge.

- ml.inf2.24xlarge.

- ml.inf2.48xlarge.

They are commonly used for image and text generation and summarization, as well as speech recognition.

Which accelerated instance is right for you?

Given that the process of releasing ML applications consists of multiple steps, it requires having different types of compute capacity throughout the whole lifecycle. Capacity depends on the tasks being performed. Examples of tasks related to deploying ML models include the following:

- Development.

- Preprocessing and postprocessing.

- Evaluation.

- Training.

- Data preparation.

- Inference.

In most cases, each type of task has its own compute and application requirements and challenges. It is important to choose the appropriate EC2 instances, depending on the specific task being performed.

Examine performance needs and requirements

Before choosing a particular instance type and size, it is critical to identify the performance requirements for each task. There are multiple cases where optimized instances can have a 30% to 75% higher cost compared to more generic ones with similar capacity. There are business goals that could justify the additional cost, such as maximum latency or volume of data to be processed, trained or inferred.

There are cases where a generic instance type could meet the application requirements. But there are also situations where higher performance can result in lower cost. Execute multiple tests for each task to determine performance needs. Each test must be done with the expected data and transaction volume. Calculate cost using multiple instance types, and measure relevant metrics that impact UX and application performance.

Look at the metrics

CloudWatch offers relevant metrics in areas such as invocations, latency, errors, and CPU and memory utilization. Given that machine learning tasks can quickly reach several thousands of dollars, focus on optimal infrastructure costs for specific requirements. CloudWatch metrics are an essential tool to assess if a particular instance type is the right one for a particular workload's objectives.

Save money

Amazon SageMaker Savings Plans can reduce cost by committing users to an hourly spend for a period of one or three years. Depending on the instance type and the commitment period, savings can range from approximately 20% to even 64%. It's important to note that not all instance types for a particular component type, such as processing, inference, training and notebooks, are supported. For example, SageMaker doesn't support Reserved Instances.

Ernesto Marquez is owner and project director at Concurrency Labs, where he helps startups launch and grow their applications on AWS. He enjoys building serverless architectures, building data analytics solutions, implementing automation and helping customers cut their AWS costs.