ra2 studio - Fotolia

Prepare your data center for a hybrid cloud strategy

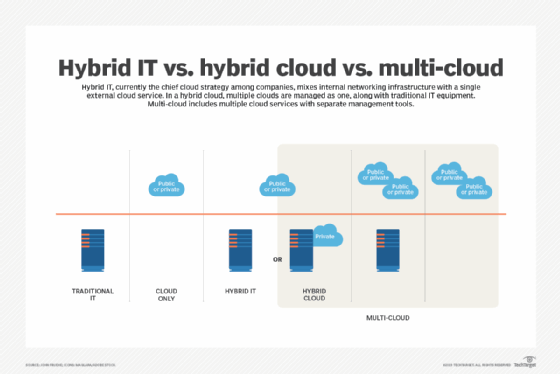

On-premises assets and public cloud resources won't work together without a clear hybrid cloud strategy. Learn how to prepare your on-premises architecture for hybrid cloud.

An IT team must weigh two important questions before it implements a hybrid cloud strategy. How should it reorganize on-premises assets, and how should it build an application architecture and deployment model?

IT teams can't have a successful hybrid cloud without addressing these two questions, and they'll need to do so in one coordinated strategy that involves several important decisions. Let's walk through some of those choices to help your team plan for its hybrid cloud environment.

Establish your API DMZ

A good hybrid cloud strategy assumes that the public cloud provides front-end application support, including information support designed to stimulate or enhance a transactional application. The transactional application is most likely to run in the data center, which means the data center has to expose APIs to which a cloud front end can reasonably connect.

The first step is to define, secure and expose the proper APIs to link the cloud and data center. Think of this hybrid cloud boundary as a demilitarized zone (DMZ), a place that has its own rules, but also has to support both worlds and in some cases be a part of each one. These APIs are the border in the middle of the DMZ.

APIs also represent a kind of functional handoff between the cloud and the data center. Cloud-architected and deployed resources are on one side of the APIs; on the other side of the DMZ, the core business applications that policy, costs and other factors stay on premises. This exposes features that the cloud can utilize without requiring any changes to your core applications. This way, development in the cloud and data center remain partitioned.

You can also decide where exactly to draw that line. Higher-level, abstracted APIs limit the changes that can be made in the cloud. Alternatively, you could expose lower-tier APIs, perhaps all the way down to database access and update layers. This exports a lot of customization work to the cloud but also creates security and compliance risks that will have to be mitigated.

Unlock scalability with hybrid cloud containers

Once you've established your API DMZ, you need to ensure your data center can accommodate the scalability and resiliency of the cloud.

Public clouds can quickly add instances as load increases and replace failed components, but it doesn't make sense to build a 10-lane superhighway if it empties onto a two-lane dirt road, i.e., your ill-prepared data center. So, as you incorporate greater scalability with your public cloud component, you'll need to account for the scalability in the components that are exposed through your DMZ APIs.

At this point, you'll need to define a resource pool and create components that can accommodate your scaling demands, such as load balancers and a form of state control. You also need a scheduler to operate across the resource pool you've created. You can address all these requirements with a single core concept -- container hosting.

Containers provide an efficient strategy for virtualization since you can host more of them on a server than you can with VMs. Containers are easy to deploy in the data center, even for legacy applications, and they are suitable for all forms of stateful and scalable application models. In the cloud, you can deploy your own container system on compute instances, or you can use a cloud provider's managed container system. Best of all, there are many ways of harmonizing your cloud and data center environments through containers.

Kubernetes is the go-to container scheduling and orchestration tool, and it's equally suitable for private data centers or public clouds. There are a number of Kubernetes sources, such as Google's Anthos, IBM's Kabanero, VMware's Topaz and Red Hat's OpenShift, that can support hybrid orchestration and scheduling, even across the previously discussed API DMZ.

Prepare your cross-environment orchestration

The next important consideration is cross-boundary orchestration and scheduling. You need to decide if your scalable DMZ components will be bound to the cloud, or whether you can schedule components across that boundary. If you choose the latter, then the data center and the cloud can back each other up or provide additional resources as needed.

The use of Kubernetes for both the cloud and the data center enables the most robust and flexible option for cloud bursting and for using cloud and data center resources as backup should something fail. If data center and cross-boundary scalability is important to you, you'll want to organize and partition your servers to optimize for it.

The servers that host your containers as part of this scalable resource pool should be located nearest to the users' internet connections for minimum latency and optimum performance. Their configurations should be standardized as much as possible, and the application components themselves shouldn't use resources that won't be widely available in either your public or private environments. This will improve the overall efficiency of your resource pool.

The deeper you extend your DMZ into the data center, the more important it will be to adopt a container strategy for deploying and scheduling components. Even applications that don't need to scale will benefit from this approach because it will harmonize operations practices and lower operational costs. It will also prepare your organization for a deeper commitment to the public cloud, as your hybrid cloud experience evolves.