IT teams face an uphill battle with serverless migrations

Moving serverless functions between cloud platforms is met with various obstacles. Make sure to look out for the following key features before starting the process.

Serverless computing remains one of the most discussed, disruptive and controversial cloud technologies.

Aside from the technically inaccurate moniker -- there are servers involved, after all -- serverless generates so much debate because of its combination of benefits and drawbacks. Because of this, proponents and detractors have argued for years over whether the glass is half full or half empty. However, when it comes to serverless migrations, there are so many obstacles to overcome that some users may just see the glass as completely empty.

Pros and cons of serverless

Before we discuss the difficulties around migrations, let's review why serverless generates so much disagreement among IT pros. For starters, incorporating serverless into an infrastructure design has many benefits, including:

- Granular billing and lower costs compared to capacity, on-demand pricing

- Auto scalability

- Improved reliability

- Faster deployment time

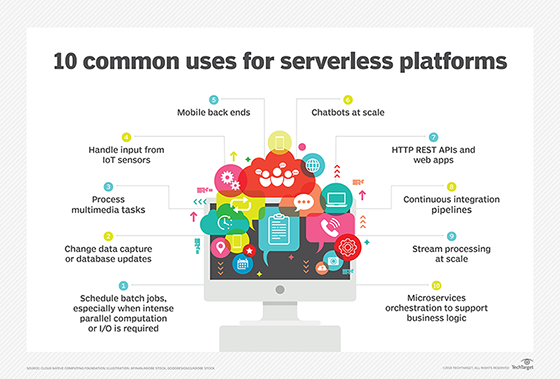

These features make serverless particularly useful for sporadic workloads that match the event-driven model used to trigger cloud-based services. There are widespread use cases, including multimedia processing, data capture and transformation, scheduled batch jobs, and stream and IoT sensor data processing.

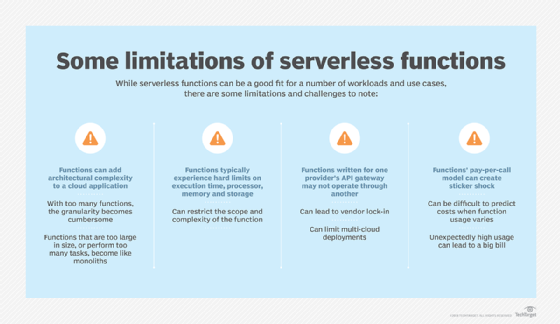

However, the benefits must be weighed against the enormous caveats with serverless implementations: the difficulty of porting applications from one cloud service to another and vendor lock-in. Other drawbacks with the most popular services – namely, AWS Lambda, Azure Functions and Google Cloud Functions -- that can put a wrinkle in serverless migrations include:

- Lack of interoperability and ability to communicate between implementations in multi-cloud deployments

- Inconsistent startup performance, notably the latency of cold starts

- Security risks resulting from an increased attack surface, insecure dependencies and other vulnerabilities

Avoid these hiccups in serverless migrations

Since serverless functions are little more than an executable wrapper for code, it is easier to move functions written in Java or Python from Lambda to Azure, since Azure Functions supports most of the same languages.

However, serverless functions are never deployed in isolation and are always as part of a larger system, so there are vendor lock-in risks. Such risks generally come from what that code does and how deeply it is tied to the event model, APIs and other services on a particular cloud platform.

Due to the nuances and idiosyncrasies of each cloud platform, there isn't a simple way of translating an application with serverless elements from one to another. Developers have asked for an automated wizard for serverless migrations, but don't expect one anytime soon.

The task of modifying a function for another cloud, say from AWS Lambda to Azure Functions, will vary widely depending on the language, complexity and cloud service dependencies. In general, functions that use scripted languages, like Python and Node.js, are the easiest to move and require minimal modifications. It is the dependencies that cause the most trouble in a serverless migration.

And some dependencies can prove to be more of a challenge than others. For example, proprietary services that are deeply embedded into application functionality -- databases with unique capabilities such as Google Cloud Spanner or Azure Cosmos DB, AI tools and core infrastructure -- are likely harder to swap out than providers' APIs for logging and notification services.

Here are some provider features users should carefully study before they manually migrate serverless code from one cloud to another, since they often vary by provider:

- Deployment package format

- Handling of environment variables and metadata

- Source of event triggers

- Workflow design and orchestration tools

- Log data format and destination

- User authentication and role definition

- Pricing model

Open source serverless frameworks

The top three cloud vendors have little incentive to reduce the complexities around serverless migrations between their platforms, so some developers have turned to open source technologies to build serverless frameworks that can run on any public or private cloud platform. Popular container-based frameworks for serverless include Kubeless, OpenFaaS, Fission and Apache OpenWhisk. A research paper from Aalto University in Finland found OpenFaaS to be the most flexible and easily extendable of that quartet, while Kubeless was the most consistent across environments.

Knative, which has broad industry support, is an open source project for Kubernetes container orchestration. It can manage the container back end for serverless functions and link together workloads under an event-driven approach. For AWS Lambda and Knative serverless migrations, TriggerMesh helps eliminate numerous roadblocks via its Knative Lambda Runtime, a set of Knative build templates that enable Lambda functions to run on Knative-enabled Kubernetes clusters.

Expect to see more tools in the future that reduce the overhead involved with serverless migrations. However, since services like Lambda Google Cloud Functions essentially act as glue to connect databases, AI, analytics, logging and other components to form a composite application, moving between these platforms will probably never be completely automated or painless.