KOHb - Getty Images

Deploy ML on edge devices with SageMaker, IoT Greengrass

Amazon SageMaker, ONNX and AWS IoT Greengrass help deploy machine learning models to edge devices. Steps include component creation, recipe building and core device configuration.

Many applications need to deploy and execute machine learning inference tasks on devices as close as possible to end users, instead of remotely executing these tasks in the cloud. This requirement introduces the use case of running ML models on edge devices, such as smart cameras, robots, sensors, and industrial and mobile devices.

Amazon SageMaker has been a key tool to simplify the development, training, deployment and execution of ML tasks in the cloud. One of its features, SageMaker Edge Manager, helps manage and deploy ML functionality on edge devices, but AWS announced it is decommissioning the feature on April 26, 2024. AWS recommended enterprises use the Open Neural Network Exchange (ONNX) format in combination with AWS IoT Greengrass V2 as an Edge Manager replacement.

IoT Greengrass is an AWS IoT feature designed to manage fleets of IoT devices and the software deployed to them. This design makes it a great option for deploying ML functionality to remote IoT and edge devices.

ONNX is an open source tool that enables teams to execute ML inference tasks on a range of edge devices. It supports multiple hardware types, OSes and frameworks. ONNX models also integrate with many existing Amazon SageMaker features. As a result, ONNX and AWS IoT Greengrass are a good match for optimizing the ML deployment process on edge devices, including large-scale deployments.

How to use ONNX and AWS IoT Greengrass

Enterprises can follow three steps to use ONNX and AWS IoT Greengrass.

Step 1. Train an existing ML model and make it available in ONNX format

The first step involves the usual tasks related to building and training ML models, such as classification, regression and forecasting, depending on the required use case.

SageMaker delivers multiple tools that help with this process, such as JumpStart, Studio, Jupyter Notebook, Autopilot and explicit triggers of SageMaker training jobs. Enterprises can also consider SageMaker Neo as an option because it creates a performance-optimized ONNX ML executable based on the type of target edge device. Given the compute capacity constraints edge devices often present, SageMaker Neo can deliver UX value.

Teams should place generated output ONNX files in an S3 bucket, where IoT Greengrass can access them in subsequent steps.

Step 2. Create AWS IoT Greengrass components using the generated files in ONNX format

Once the model in ONNX format is ready, create an IoT Greengrass component. This is the software package that deploys to edge devices.

First, create an IoT Greengrass component recipe file. This file contains detailed component configurations, such as ONNX runtime, platform OS, scripts to execute at runtime and the S3 location where the ONNX artifacts are uploaded, among other parameters.

Once the recipe file is ready, teams can launch a component using the AWS SDK, CLI or the AWS IoT console.

As part of the process, provide specifications for the component recipe.

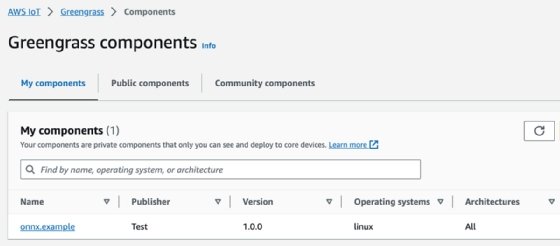

Once Greengrass creates the component, it is available in the AWS IoT Greengrass console.

Step 3. Deploy IoT Greengrass components to target edge devices

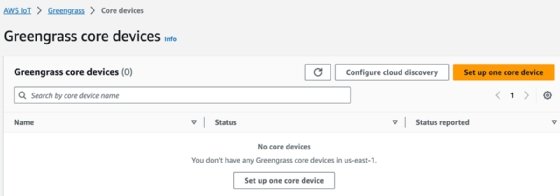

Before proceeding with a deployment, teams must configure the Greengrass core devices. Do this from the Greengrass core devices screen.

For the target device to be visible to Greengrass, it must have the AWS IoT Greengrass Core software installed and run the Greengrass client. Upon launch, the client software contains parameters such as the core device name. IoT Greengrass receives these published parameters, and the edge devices are visible and ready for deployment.

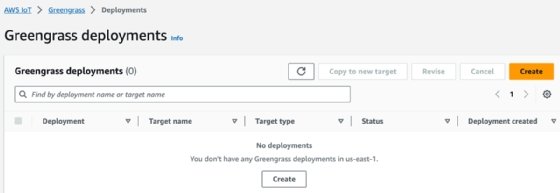

Once core devices are visible to Greengrass, the configured component is ready to be deployed to target devices. From the Greengrass deployments screen, create a new deployment by clicking the Create button.

Next, specify a target device or group for this deployment.

Once the deployment is complete, ML functionality executes on remote devices and completes any implemented inference tasks locally. It also publishes data to AWS IoT Core as needed.

Once this data arrives to AWS IoT, it can integrate with multiple AWS services, such as Lambda, CloudWatch Events, CloudWatch Logs, S3, Kinesis, DynamoDB, Simple Notification Service and Simple Queue Service. This integration enables application monitoring, as well as the additional implementation of complex and scalable functionality in the cloud, based on the data provided by edge devices.

When using Amazon SageMaker, application owners can use a range of features for the entire ML lifecycle. These features, in combination with AWS IoT Greengrass, provide a solid approach to manage devices and ML software deployments for IoT and edge strategies. The combination of these two services simplifies the lifecycle of ML-based applications that require executing logic at the edge at any scale.

Ernesto Marquez is owner and project director at Concurrency Labs, where he helps startups launch and grow their applications on AWS. He particularly enjoys building serverless architectures, automating everything and helping customers cut their AWS costs.