Choose the right workloads for serverless platforms in cloud

Serverless might be the next big thing in cloud, but that doesn't mean it's a fit for all enterprise workloads. See if your requirements align with these common use cases.

Serverless technology is the ultimate form of compute on demand, but it's still new territory for most enterprises, which can prompt questions about when, exactly, to use it.

With serverless computing in the cloud, the provider -- such as AWS, Google, Microsoft or IBM -- manages the underlying infrastructure and dynamically allocates resources, based on need. Customers pay for those resources as they use them and don't pay when those resources sit idle.

One of the standard use cases for serverless platforms -- also known as functions as a service (FaaS) -- is DevOps, said Greg Schulz, analyst at StorageIO. But while software development and testing processes lend themselves well to serverless platforms, there are many other potential applications for the technology.

"Serverless has a life beyond DevOps, and that's where the real opportunities are," he said.

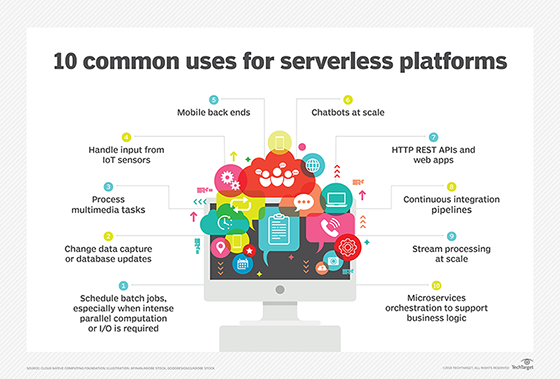

Common uses for serverless platforms

In general, serverless is a good fit for modular or simple applications that don't require a whole virtual or physical machine, but just a place to run code. For example, the customer service bots that greet users upon opening a website are often serverless apps; a user's visit to the site triggers the bot to offer its help.

Another common serverless use case is back-end processing for AWS, Azure and other cloud platforms, said Torsten Volk, analyst at Enterprise Management Associates. For example, an image upload to an Amazon S3 storage bucket could trigger a Lambda serverless function that uses the Amazon Rekognition service to extract metadata about what the image shows. Then, another function could execute to write this metadata into a database.

A further subset of this use case, according to IDC analyst Deepak Mohan, is batch processing alongside traditionally architected applications. This could enable health checks, telemetry collection or alerts.

"We see a lot of customers deploying such batch operations on serverless, alongside a VM-based workload," Mohan said.

Services or applications that require high scalability, such as data analytics, document indexing or training AI or machine learning models, are also a good match for serverless computing, Volk said.

Lastly, enterprises who want to get their feet wet with serverless technology could do so with microservices -- in particular, microservices that are mostly stateless and remain small, or modular, in nature.

"Before creating new microservices, instead of defaulting to containers, development teams should first evaluate the use of FaaS, as these functions are easier to manage and share compared to directly deploying to containers," Volk said.

Serverless platforms greatly simplify the effort and time it takes to launch a new service, including microservices-based web applications, Mohan agreed.

What's not right for serverless?

Of course, just because you can move a workload to a serverless environment doesn't mean you always should, Schulz said. There are several use cases and applications that wouldn't be a good fit. For instance, large, long-running workloads, such as databases, wouldn't be a good candidate for serverless, mostly because of cost. A dedicated database server on its own VM would be a better bet.

Another use case unlikely to fit the serverless model is video rendering.

"This would probably be more effective in a larger, virtual machine setup where it has access to more resources," Schulz said.

As with any emerging technology, a lack in skill sets might be an issue as well. In addition to prior exposure to containers, helpful skills for serverless computing include familiarity with writing shells, command-line activity and scripting.

"[Serverless teams] should typically be teams with sufficient skill sets and capabilities, unconstrained by traditional approaches and inertia," Mohan said.