Getty Images/iStockphoto

5 Azure Functions logging best practices

Implement these Azure Functions best practices to improve logging strategies, optimize application performance and ensure application strength and dependability.

As with any software application, monitoring and diagnosing issues in Azure Functions can be complex. That's why logging is essential as a reliable mechanism for maintaining application health and responsiveness.

Logging is a crucial part of any application's lifecycle, especially in the serverless world. Effective logging can provide deep insights into troubleshooting and how applications function. It can also help users track the path and outcomes of function executions, identify errors and understand the performance characteristics of functions.

As developers explore Azure Functions logging, take a deeper dive into the following five key areas:

- Structured logging. This strategy maintains a consistent logging format, which improves log readability and analysis.

- Log levels. These help developers manage different event severities during debugging.

- Integration with Microsoft's Application Insights. Integrating with this monitoring tool provides advanced analytics and telemetry features.

- Centralized logging. This method streamlines logging management and facilitates more straightforward access to log data from multiple sources.

- Managing sensitive information in logs. This strategy ensures that logging practices maintain data privacy and meet compliance standards.

Implement structured logging

Use structured logging to enhance the logging of Azure Functions. This method involves formatting and storing log events in an organized manner that can be easily searched and analyzed. Each log message is a structured data object, usually in JSON format, rather than a plain text string. This format makes it more convenient to query and analyze log data, leading to more efficient and effective debugging.

With structured logging, it is easier to correlate events, identify patterns and detect anomalies. With structured logs, developers can quickly search vast amounts of log data, filter logs by specific properties and analyze trends over time.

To implement structured logging in Azure Functions, you can use the ILogger interface. This interface lets developers use semantic logging to add structured data to log messages. For example, consider a traditional logging statement like the following:

log.LogInformation("Function processed a request");

With structured logging, you could write the following instead:

log.LogInformation("Function processed a request at {RequestTime} with {RequestData}", DateTime.UtcNow, requestData);

In this example, RequestTime and RequestData are structured data that can be easily queried and analyzed. Structured logging is powerful not only because it's easy to use but because it can transform log data into valuable insights. With structured logging, you can record events in Azure Functions and create a valuable source of information that can guide application development and optimization efforts.

Use log levels appropriately

Log levels categorize logs based on their importance or severity, making it easier to analyze specific data and determine how much to log and retain. Different levels of logging messages serve various purposes. The ILogger interface supports the following six log levels:

- Trace. The most detailed level, which mainly serves debugging purposes.

- Debug. For internal system events that might not be observable from the outside.

- Information. Tracks the general flow of the application's execution process.

- Warning. Indicates the potential for future issues.

- Error. Signifies that an error has occurred and the function can still run.

- Critical. For severe errors that might prevent the process from continuing to execute.

By using these log levels appropriately, developers can improve the effectiveness of their logging strategy. Information, Warning and Error logs are most common in a healthy production system. Due to their verbosity, Debug and Trace logs are often only enabled during development or troubleshooting sessions.

The chosen log level affects system performance and diagnostics. Logging at a high volume, especially at the Trace or Debug level, can generate meaningful data, which potentially affects system performance and storage. It is essential to balance logging enough data for diagnostics with not overloading the system or filling the log with irrelevant information.

For a simplified process of filtering through logs and finding necessary information, use descriptive tags and messages instead of relying solely on log levels. This approach enables quicker identification of the type of log message.

Integrate with Application Insights

Application Insights is an easy-to-use tool that comprehensively views a live application's performance and usage. Its powerful analytics features can identify performance issues and help developers understand user behavior for effective diagnosis.

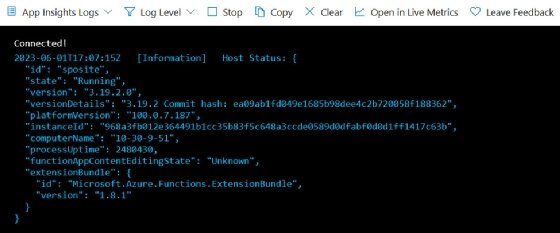

Add the Application Insights connection string to your function app settings to integrate Azure Functions logging with Application Insights. Azure Functions then automatically sends all log data to Application Insights, where you can query and analyze it using the Kusto Query Language.

With Application Insights, you can gain deeper insights from your function logs. It captures log data and provides additional telemetry like request rates, response times, failure rates, dependencies and exceptions. You can also set up alerts, visualize log data in charts and correlate logs with other telemetry for comprehensive diagnostics.

Remember that the true power of logging lies not merely in recording events but in using those records to gain valuable insights.

Implement centralized logging

Centralized logging consolidates logs from various applications, functions and services into a single, centralized location. This practice helps eliminate the need to access logs in different places, which streamlines the log analysis process. Other benefits include the following:

- Simplifies the log management process.

- Makes correlating events across multiple functions or services more straightforward.

- Enables users to apply analytics across all log data.

Azure provides a log analytics service where users can store and analyze log data. It's also possible to use external services like Elastic Stack for more advanced analysis and visualization capabilities.

Manage sensitive information in logs

Managing sensitive data in logs requires vigilance and best practices. When logging, avoid inadvertently including sensitive information, such as personally identifiable information, credit card numbers or passwords in logs. If you need to log sensitive data for debugging purposes, consider log data obfuscation or anonymization techniques, such as hashing or encryption.

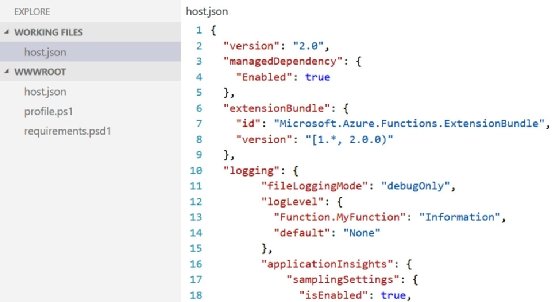

Azure Functions provides some features to help manage sensitive information in logs. For instance, users can configure host.json to prevent data logging in HTTP request and response bodies.

Always remember that protecting sensitive data in logs isn't just about compliance. It's about preserving user trust in applications.