How to build a cloud capacity management plan

Running workloads in the cloud gives an organization access to unlimited resources. That's a good thing, but only if the IT team adopts good capacity planning practices.

One of cloud computing's biggest benefits is that it supports highly flexible and dynamic resource use. Cloud users consume as many or as few resources as they need and have the freedom to adjust consumption as their needs fluctuate.

But that doesn't mean cloud platforms automatically optimize resource allocation. For most types of cloud services, it is left to the user to determine how many resources cloud workloads will require at any given moment. (Exceptions are cloud services like serverless computing, which don't require preset resource allocations.)

Cloud capacity management is critical to an effective IT strategy. It gives developers, IT teams and DevOps engineers the insights they need to ensure that their workloads have the required resources. At the same time, it lessens the risk that workloads will become overprovisioned in ways that waste money and add unnecessary management overhead.

Why cloud capacity planning and management are crucial

Cloud capacity planning is the practice of anticipating how many resources a cloud workload will require before deploying it. Cloud capacity management, in contrast, means tracking resource consumption and adjusting it as necessary once a workload is running.

This article is part of

What is cloud management? Definition, benefits and guide

Both of these practices are important.

Consider a cloud server that hosts several web applications. Proper capacity planning ensures that the server runs on a virtual server instance with enough CPU, memory and storage resources to support the applications but not so many resources that a significant portion goes unused.

Likewise, capacity planning can help determine how many servers to include in a cluster that shares responsibility for hosting an application. In this case, the IT team must be sure to include enough servers to handle the load placed on the application and also keep sufficient backup systems in place to guarantee the application remains available in the event some servers crash.

If the estimates made during capacity planning turn out to be inaccurate, cloud capacity management helps correct the issue. For instance, IT could migrate a cloud server instance that lacks enough CPU and memory to support its applications to a different instance type to improve performance. Or, if the server is overprovisioned, IT can choose a new instance type that costs less and provides fewer resources.

This balancing act is the key to capacity management. An organization wants to avoid underprovisioning workloads in such a way that they can't perform adequately, while also avoiding overprovisioning them by allocating resources they don't need.

The benefits of an effective capacity management plan

Effective capacity planning and management are more than just ways to optimize performance and cost. They help to create value for the organization in a variety of ways:

- Provide insight into long-term IT planning. For example, capacity planning can help determine which workloads to move to the cloud. Workloads with fast-changing capacities are ideal candidates for the cloud, where resource allocations can be easily scaled up and down.

- Determine which infrastructure and application architectures align with your needs. For instance, if you have a virtual server with routinely fluctuating capacity demands, you might find that serverless computing would be a better way to host that workload. Serverless functions enable users to allocate large amounts of resources for short periods in a more cost-effective and easy-to-manage way than is possible with virtual servers.

- Arrange the right people and tools. This is a step beyond your team knowing how many resources to allocate to workloads. It's important to find out if you have the organizational resources necessary to assign those resources. You'll need staff on hand to perform the necessary provisioning, and those workers should have the requisite skills to work with the tools you use to manage resource allocation.

- Avoid disruptions to users. Wrong-sized workloads can create problems for the people who expect a specific application to be ready for them when they need it. When your workload capacities are well managed, you minimize the risk of having applications or servers fail.

Note, too, that while capacity planning and management have been part of IT workflows for decades, their importance has grown since the emergence of cloud computing. This is because scalability is a crucial factor in an organization's decision to migrate to the cloud. To capitalize fully on that scalability, IT teams must manage resource utilization effectively and continuously. If they can't, they miss one of the chief advantages of cloud architecture: being able to scale workloads up and down on demand, such that they always have sufficient resources but don't cost more than they need to. Companies that can't do this might do better to stick with on-premises architectures.

Steps to plan and manage cloud capacity

The nature of cloud architectures and services varies widely, so there is no single or simple way to approach cloud capacity management. In general, however, an effective cloud management and capacity planning strategy will involve several key steps.

1. Assess baseline capacity requirements

First, determine how many cloud servers, application instances, databases and so on your team requires on average to maintain adequate performance. You'll need to know how many CPU, memory and storage resources each workload requires. Those are your baseline capacity requirements.

It's important to remember that you shouldn't use that baseline alone to decide how many resources to allocate, especially if demands placed on the workloads often fluctuate. You might need to allocate more resources than the baseline to accommodate periods of increased demand. Still, knowing your baseline provides a starting point for capacity planning.

2. Assess scalability needs

Once you know the baseline requirements for each workload that you run in the cloud, examine the scalability they'll require. Do this by evaluating how much variation in workload demand occurs between different times of day, days of the week or seasons of the year. Some of your cloud workloads will have higher scalability requirements than others. For instance, a website with a globally dispersed user base probably won't see as much fluctuation in use in a full day as a website that caters to users in a specific geographic location, which likely will see most demand during that locale's daytime hours. Likewise, a website for a meal-delivery service will probably experience higher load during mealtimes than at other times of day.

3. Make initial resource allocations

For workloads that don't already run in the cloud, you'll need to set initial resource allocations before you start them. As a rule of thumb, plan to allocate 20% more resources to each workload than the baseline requirements dictate. This provides a healthy buffer in case demand unexpectedly jumps but doesn't unreasonably overprovision your environment.

4. Set up autoscaling policies

Most cloud providers allow you to create autoscaling policies. With these policies in place, the cloud platform automatically increases or decreases the resource allocations assigned to your workloads based on the traffic thresholds you configure in the policies. You can apply autoscaling policies to most types of cloud workloads, including virtual machine instances, databases, containers and serverless functions. However, certain niche categories of cloud workloads, such as IoT devices managed through cloud services, typically can't be managed using autoscaling.

5. Collect and analyze capacity data

Whether or not you configure autoscaling for your workloads, it's important to assess how well the allocations work and adjust accordingly. Consider these metrics and factors:

- How often do your autoscaling policies trigger? If they are rarely applied because your workloads never reach the minimum thresholds for autoscaling, the workloads are likely overprovisioned. This means it might be time to reconfigure your thresholds.

- How do your actual cloud costs, as reflected in monthly bills, compare to your anticipated costs? Beating cost expectations is one sign that you are managing capacity well, but if cloud expenses are too high, you could probably do a better job at capacity management.

- How often do you experience disruptions or downtime related to capacity or resource allocation?

- How often does your team intervene manually to correct a capacity issue? You might need more intensive autoscaling to reduce the need for manual changes or to migrate your workload to a different type of architecture, such as serverless.

- Do the baseline workload requirements and the anticipated scalability needs that you identified for each workload remain consistent with its actual performance?

Key components of a cloud capacity management plan

Like most complex technical operations, an effective cloud capacity management plan requires the right people, processes and technology. Here's what each category entails:

- People. Capacity planning and management mainly involves technical skills, but it also requires a measure of familiarity with finance and cost optimization, since avoiding financial waste is a key goal of capacity management. For that reason, businesses should formulate cloud capacity management plans that designate staff with the requisite skills to oversee the process.

- Process. Cloud capacity management requires the ability to predict cloud workload resource needs, monitor actual resource consumption and correct resource configurations as necessary.

- Technology. A number of tools, such as cost calculators and monitoring software, can assist with cloud capacity planning and management. These are detailed in the following section.

Cloud capacity management tools

Cloud capacity management is a complex, multifaceted process, and there is no single tool that will meet all your capacity planning needs. But a variety of tool types can assist in the process, including the following:

- Cost calculators. To manage the financial aspects of capacity planning, the cost-prediction tools that cloud vendors offer are useful. They can help evaluate the costs associated with different resource allocations or workload types.

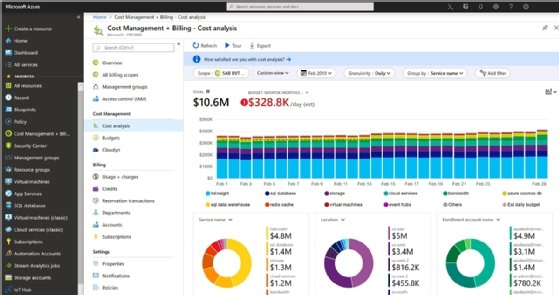

- Monitoring and log management. Data collected by monitoring and logging tools, such as AWS CloudWatch, Azure Monitor and third-party monitoring platforms, can help you keep track of performance trends and alert you to changing capacity needs.

- Infrastructure as code. Infrastructure-as-code tools automate infrastructure setup and resource allocation, so it is much easier and faster to reconfigure allocations in response to capacity changes.

- Rightsizing and cost management. Cloud vendors offer tools designed to help predict capacity requirements. AWS has a cost management tool, as does Microsoft Azure. Some third-party application performance management tools also offer rightsizing features.

- FinOps tools. A growing array of FinOps tools from vendors that specialize in cloud cost management can help to identify capacity management problems like overprovisioned cloud workloads.

- Cloud recommendation engines. The major public clouds also offer services, such as Azure Advisor and Google Cloud Recommender, that automatically assess workload configurations and recommend changes to help save money or improve performance. These services are usually more basic than the recommendations provided by FinOps tools, but they're easy to use because they are built into public clouds.

Capacity management is important in any IT environment, but it's especially critical if you want to get the most out of cloud environments. While there is no single, one-size-fits-all approach to cloud capacity planning, a mix of techniques and strategies will help ensure you assess capacity needs accurately, even for fast-changing workloads.

Future-proofing your cloud capacity management plan

The strategies above will help you manage cloud capacity on an everyday basis. You'll also need to plan for long-term capacity needs so that your IT infrastructure evolves appropriately over time to meet changing workload requirements.

Traditionally, long-term capacity management centered on the purchase and deployment process for new servers, storage media and other on-premises data center infrastructure. This is irrelevant in the cloud, where a service provider already has made those investments on a vast scale and offers as much infrastructure as any customer needs.

Instead, long-term capacity management for the cloud should focus on how to evolve your cloud architecture over time in response to changing capacity requirements. If today you use just one cloud, for example, assess your long-term workload expectations and think about whether it might make sense to adopt a multi-cloud strategy to meet future capacity requirements. Or you might decide that the organization's long-term capacity efficiency will be improved with a decision to refactor applications to run as microservices inside containers, which could help them consume resources more efficiently than monolithic apps.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society who has previously worked as a journalist and Linux systems administrator.