ktsdesign - stock.adobe.com

5 machine learning skills you need in the cloud

Put your IT team in the best position to succeed with AI. Develop these machine learning skills and see how they translate to the major cloud platforms.

Machine learning and AI continue to reach further into IT services and complement applications developed by software engineers. IT teams need to sharpen their machine learning skills if they want to keep up.

Cloud computing services support an array of functionality needed to build and deploy AI and machine learning applications. In many ways, AI systems are managed much like other software that IT pros are familiar with in the cloud. But just because someone can deploy an application, that does not necessarily mean they can successfully deploy a machine learning model.

While the commonalities may partially smooth the transition, there are significant differences. Members of your IT teams need specific machine learning and AI knowledge, in addition to software engineering skills. Beyond the technological expertise, they also need to understand the cloud tools currently available to support their team's initiatives.

Explore the five machine learning skills IT pros need to successfully use AI in the cloud and get to know the products Amazon, Microsoft and Google offer to support them. There is some overlap in the skill sets, but don't expect one individual to do it all. Put your organization in the best position to utilize cloud-based machine learning by developing a team of people with these skills.

1. Data engineering

IT pros need to understand data engineering if they want to pursue any type of AI strategy in the cloud. Data engineering is comprised of a broad set of skills that requires data wrangling and workflow development, as well as some knowledge of software architecture.

These different areas of IT expertise can be broken down into different tasks IT pros should be able to accomplish. For example, data wrangling typically involves data source identification, data extraction, data quality assessments, data integration and pipeline development to carry out these operations in a production environment.

Data engineers should be comfortable working with relational databases, NoSQL databases and object storage systems. Python is a popular programming language that can be used with batch and stream processing platforms, like Apache Beam, and distributed computing platforms, such as Apache Spark. Even if you are not an expert Python programmer, having some knowledge of the language will enable you to draw from a broad array of open source tools for data engineering and machine learning.

Data engineering is well supported in all the major clouds. AWS has a full range of services to support data engineering, such as AWS Glue, Amazon Managed Streaming for Apache Kafka (MSK) and various Amazon Kinesis services. AWS Glue is a data catalog and extract, transform and load (ETL) service that includes support for scheduled jobs. MSK is a useful building block for data engineering pipelines, while Kinesis services are especially useful for deploying scalable stream processing pipelines.

Google Cloud Platform offers Cloud Dataflow, a managed Apache Beam service that supports batch and steam processing. For ETL processes, Google Cloud Data Fusion provides a Hadoop-based data integration service. Microsoft Azure also provides several managed data tools, such as Azure Cosmos DB, Data Catalog and Data Lake Analytics, among others.

2. Model building

Machine learning is a well-developed discipline, and you can make a career out of studying and developing machine learning algorithms.

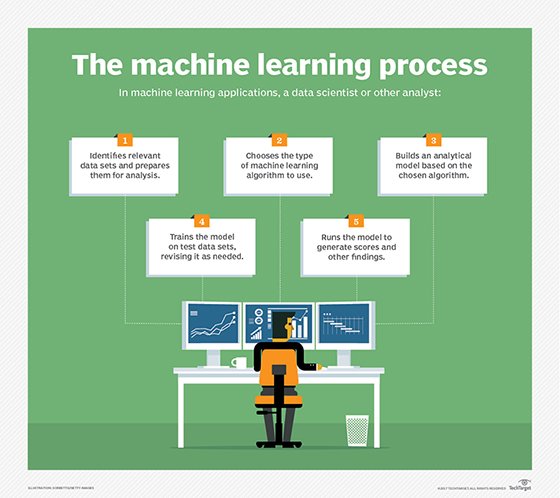

IT teams use the data delivered by engineers to build models and create software that can make recommendations, predict values and classify items. It is important to understand the basics of machine learning technologies, even though much of the model building process is automated in the cloud.

As a model builder, you need to understand the data and business objectives. It's your job to formulate the solution to the problem and understand how it will integrate with existing systems.

Some products on the market include Google's Cloud AutoML, which is a suite of services that help build custom models using structured data as well as images, video and natural language without requiring much understanding of machine learning. Azure offers ML.NET Model Builder in Visual Studio, which provides an interface to build, train and deploy models. Amazon SageMaker is another managed service for building and deploying machine learning models in the cloud.

These tools can choose algorithms, determine which features or attributes in your data are most informative and optimize models using a process known as hyperparameter tuning. These kinds of services have expanded the potential use of machine learning and AI strategies. Just as you do not have to be a mechanical engineer to drive a car, you do not need a graduate degree in machine learning to build effective models.

3. Fairness and bias detection

Algorithms make decisions that directly and significantly impact individuals. For example, financial services use AI to make decisions about credit, which could be unintentionally biased against particular groups of people. This not only has the potential to harm individuals by denying credit but it also puts the financial institution at risk of violating regulations, like the Equal Credit Opportunity Act.

These seemingly menial tasks are imperative to AI and machine learning models. Detecting bias in a model can require savvy statistical and machine learning skills but, as with model building, some of the heavy lifting can be done by machines.

FairML is an open source tool for auditing predictive models that helps developers identify biases in their work. Experience with detecting bias in models can also help inform the data engineering and model building process. Google Cloud leads the market with fairness tools that include the What-If Tool, Fairness Indicators and Explainable AI services.

4. Model performance evaluation

Part of the model building process is to evaluate how well a machine learning model performs. Classifiers, for example, are evaluated in terms of accuracy, precision and recall. Regression models, such as those that predict the price at which a house will sell, are evaluated by measuring their average error rate.

A model that performs well today may not perform as well in the future. The problem is not that the model is somehow broken, but that the model was trained on data that no longer reflects the world in which it is used. Even without sudden, major events, data drift can occur. It is important to evaluate models and continue to monitor them as long as they are in production.

Services such as Amazon SageMaker, Azure Machine Learning Studio and Google Cloud AutoML include an array of model performance evaluation tools.

5. Domain knowledge

Domain knowledge is not specifically a machine learning skill, but it is one of the most important parts of a successful machine learning strategy.

Every industry has a body of knowledge that must be studied in some capacity, especially when building algorithmic decision-makers. Machine learning models are constrained to reflect the data used to train them. Humans with domain knowledge are essential to knowing where to apply AI and to assess its effectiveness.