AI in cloud computing: Benefits and concerns

Businesses see public cloud as the primary vehicle for delivering generative AI's benefits in productivity, operational efficiency and workflow automation, but challenges remain.

A significant portion of large enterprises have adopted machine learning and artificial intelligence services from public cloud service providers. These companies are using the cloud providers' advanced AI technologies and pretrained machine learning models for proof-of-concept applications, decision-making analytics and data-driven task automation.

With the explosion of generative AI (GenAI), the business decision to use AI in cloud computing follows a familiar path. Gartner reported that approximately 70% of enterprises already use some form of public cloud services provided by the big three -- AWS, Microsoft Azure and Google Cloud -- although Alibaba Cloud and Huawei Cloud also own notable IaaS market share.

IaaS providers run numerous AI and machine learning (ML) workloads in multi-tenant cloud environments. Organizations use public cloud to implement AI to access a complete stack of technologies and services, including computational power to process large amounts of data, as well as storage, data analytics, large language models (LLMs), AI algorithms, APIs and a host of tools maintained by the cloud service providers (CSPs). All these technologies are accessible via the internet. Companies subscribe to these services, often on a monthly pay-as-you-go basis, and use service-level agreements (SLAs) to ensure performance levels, security measures, downtime and disaster recovery plans, as well as compensation if something goes wrong.

Impact of AI in cloud computing

Early AI implementations in the cloud focused on access to computational resources and database storage. These projects often required data science expertise and the ability to bring your own resources. GenAI flipped the switch. GenAI finally gained traction when OpenAI launched ChatGPT, an early text-based chatbot technology, in November 2022.

GenAI's humanlike text generation requires LLMs that are continuously trained on large text-based data sets. As medium and large companies begin to adopt AI policies and test drive AI and ML capabilities in the public cloud, more organizations are investing in ways to create business value by taking advantage of GenAI's economies of scale.

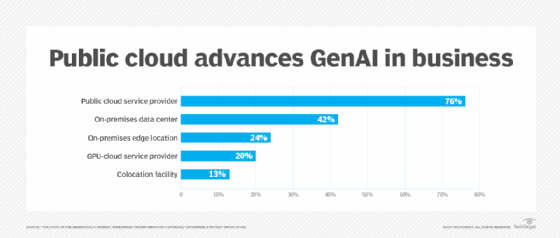

Three-fourths of organizations worldwide are running their GenAI workloads on public cloud providers, according to a September survey by TechTarget's Enterprise Strategy Group. IT and business decision-makers responding to the multiple-response survey are involved in their company's GenAI initiatives, which range from proof of concept to production. By contrast, 42% of respondents are using on-premises data centers and 24% on-premises edge computing. "Most companies, the bigger they are, use a 'blend' because they have their own data centers," said Mark Beccue, principal analyst at Enterprise Strategy Group. "They have their own cloud. They kind of hedge their bets."

Key benefits of AI in cloud computing

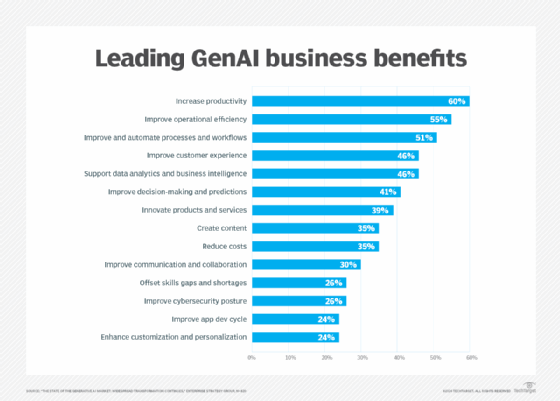

Cloud AI benefits are many and varied, including the ability to access services from any location, pay only for services as needed, experiment with projects cost-effectively, build LLMs on AI infrastructure and collaborate with experienced cloud AI teams.

AI infrastructure with advanced technologies

Public cloud providers offer a faster and sometimes cost-effective way to try out proof-of-concept projects using the latest AI services with access to prebuilt models and tools. For many enterprises, these resources and collaboration with highly trained AI cloud computing teams offer valuable partnerships not available on-premises. All three major cloud providers, Beccue noted, are at the top of the AI field and have been investing in AI for a long time.

Pay-as-you-go model

Updating on-premises infrastructure that has the computing power (GPUs), storage capacity and network bandwidth to support the data pipeline and training of GenAI models is a major investment. Businesses can benefit from the cost savings of AI in cloud computing when they only pay for what they use. "Companies are picking public cloud right now because it is pay as you go," Beccue said. "When you are testing the waters, this is a great way to do that. You can spin things up pretty quickly."

Scalability and performance

Cloud computing offers a way to test, build and scale LLMs on AI infrastructure. Project managers can adjust their servers and databases to meet demand by scaling the systems up or down. This flexibility can help businesses handle peak usage, high volumes of data and unexpected events.

Accessibility via the public internet

Companies that have adopted distributed and remote work environments can access their AI services and technologies from anywhere if their teams have internet connectivity.

Challenges of AI in cloud computing and actions to take

Public cloud providers offer a multi-tenancy model with shared resources. A major concern for many companies is where the data for the AI technologies resides. Heavily regulated industries can face strict data privacy, security and compliance requirements.

Data privacy

Companies comply with data privacy regulations (HIPAA and GDPR) and privacy standards (ISO 31700, ISO 29100, ISO 27701, FIPS 140-3 and NIST Privacy Framework) or risk penalties. AI projects increase the risks because massive amounts of real data dictate the behavior of ML training models. Model developers need to ensure that data is treated with fairness and transparency, said Rob van der Veer, senior principal expert at software assurance platform provider Software Improvement Group, co-editor of the EU's AI Act security standard and advisor to ISO/IEC and Open Worldwide Application Security Project. The data privacy requirements in the EU's GDPR are not specific to AI. Meanwhile, California, one of the U.S. states with data privacy regulations (CCPA and CPRA), is taking the lead in passing GenAI-related bills. Data residency and geolocation are also concerns with cloud AI, especially in Brazil, Singapore and the EU. Companies can set boundaries around data location in their SLAs.

Security and compliance

Public cloud providers offer security and compliance frameworks that can aid anomaly detection in real time. Many companies adopt cloud data storage running on CSPs, but their sensitive data remains on-premises to meet information security and compliance requirements. Cloud data storage and analytics platform maker Snowflake, which processes proprietary and sensitive data of many Forbes Global 2000 companies on AWS, Azure and Google Cloud, was breached when, according to the company, a user logged in and failed to use multifactor authentication. Companies that use public cloud AI services should examine the CSP's monitoring and logging tools, employ data encryption at rest and in transit, require strict identity and access management controls, and perform regular audits for compliance.

Cost controls

For many companies, the cost of cloud AI services is difficult to gauge. Shadow AI, similar to shadow IT, is another concern. The FinOps Open Cost and Usage Specification (FOCUS 1.0), released in June, aims to normalize cloud billing for IaaS by using a common taxonomy and metrics for cost and usage data sets. AWS, Google, Microsoft and Oracle contributed to the open source project, which is hosted on GitHub. FOCUS can be extended to SaaS.

Integration and vendor lock-in

Public cloud providers might favor integration with their own services instead of third-party applications, which could lead to vendor lock-in. Data integration remains a major challenge for AI deployments. AI models require massive amounts of structured and unstructured data often coming from fragmented systems whose protocols and APIs might need updating to facilitate data exchange. Many organizations rely on legacy IT systems that may not be compatible with modern AI technologies and standards.

Talent gap

Finding personnel with cloud expertise is challenging enough -- not to mention data scientists, AI and ML engineers, or the nearly impossible-to-find prompt engineer, a role that all too often gets added to the duties of another member of the AI team. Google Cloud offers a prompt-grounding tool designed to address prompting tasks. Project managers need to ensure that AI and software teams follow best practices. Data engineers might not know about standard software development practices, such as versioning, unit testing and keeping documentation up to date -- even when experimenting with AI. Software engineering teams tasked with AI model alignment -- ensuring the AI system matches the designer's goals and is ethically sound -- might lack AI expertise.

Regulatory and legal issues

Commercial data sets that augment the data pipeline used to train and fine-tune the LLMs needed for AI can help companies get started. But some data sets may offer limited information, which can lead to bias or diversity issues. Improper handling of private, confidential and copyrighted data used to train AI models can result in compliance violations and lawsuits.

Examples of AI in cloud computing

Cloud AI business applications, such as the following, permeate numerous industries, including retail, customer service, financial services, product development, manufacturing and IT automation:

- Dynamic workloads. Companies with seasonal business fluctuations may benefit from AI in cloud computing because public cloud data centers enable rapid scalability of services. Financial software provider Intuit is developing GenAI to increase personalization and performance across its product lines, including TurboTax, QuickBooks and CreditKarma.

- Expansion into new verticals. The integration of deep learning and ML models has helped food delivery company DoorDash, which runs primarily on AWS, use personalization, catalog development, product knowledge graph building and search optimization to expand beyond restaurants into new verticals, such as groceries, convenience and retail. When training these systems, humans need to remain in the loop. "Essentially, you can speed up what humans can do with AI," said Sudeep Das, head of ML/AI and new business verticals at DoorDash, who discussed his company's integration of LLMs during The AI Conference in September 2024.

- AI agents. AI-powered chatbots use ML and natural language processing to interact with customers and perform routine tasks that require limited functionality. DoorDash worked with the AWS Generative AI Innovation Center to develop a contact center powered by AI agents with voice and chat capabilities to support delivery personnel known as "Dashers." In other applications, AI agents use task-specific AI models to help with image and video generation, translation, IT automation and code completion.

- Global applications. Companies that need to run their AI capabilities in multiple geographical regions could benefit from public cloud AI services. Financial services company Block, owner of Square, which enables SMBs to accept card payments, offers its payment processing platform in multiple countries. The company runs on AWS and Google Cloud and uses GenAI for its customer support options.

- Startups and cloud-first organizations. While large enterprises represent a significant percentage of AI public cloud users, new companies can also take advantage of public cloud AI technologies and, in some cases, lead innovation. Microsoft's Startups Founders Hub for the Azure ecosystem offers free access to AI models, such as OpenAI's GPT-4 and Meta's Llama 2; one-on-one meetings with experts; numerous software tools; and up to $150,000 in free Azure credits, according to Microsoft.

Many companies with proof-of-concept AI projects fail to make it to the production application phase, partly because no one has clearly identified the business objectives.

Can AI in cloud computing help your organization?

"What business problem is the organization trying to solve?" Beccue offered. A cost-benefit analysis could determine if AI cloud is the answer. "The business case will give you the answer of where you want to go," he explained. Companies should also look at their core capabilities and determine if they should build, buy or partner with other companies.

"There has been a proliferation of companies that all claim that they have generative AI capabilities to offer to organizations," said Eric Buesing, partner at McKinsey & Company. Business leaders can be overwhelmed by the evangelism. "I think the hyperscalers are the most advantaged," he reasoned, pointing to Microsoft, AWS, Google and Salesforce, among others. "These organizations are investing a tremendous amount. They already have the contact center technology infrastructure, in many cases, in many organizations. They are betting heavily that they will unlock use cases not only for agents, but for the end customer."

Future of AI in cloud computing

As more organizations invest in hybrid and multi-cloud architectures, a host of cloud AI startups offer GPU infrastructure, storage and related services. IBM Cloud and Oracle Cloud Infrastructure are also in the mix for public and hybrid clouds, especially for companies that want industry-specific AI tools or systems integration. "Public cloud," Beccue said, "is set up well as a key piece of AI going forward."

Kathleen Richards is a freelance journalist and industry veteran. She's a former features editor for TechTarget's Information Security magazine.