AHMAD FAIZAL YAHYA - stock.adobe

Ensuring predictive model accuracy in the age of COVID-19

Data scientists are used to concept drift, but the constantly changing data due to COVID-19 can be hard to address. Experts discuss how to maintain model accuracy in the pandemic.

A growing number of organizations in every industry have been relying on predictive models to improve operational efficiency, customer relationships and financial performance. However, the sudden and dramatic impacts of the COVID-19 pandemic negatively impacted predictive model accuracy everywhere.

Gradual changes in data -- such as changes in shopping patterns -- can cause concept drift, which means the predictive model accuracy progressively degrades. Michael Berthold, CEO and co-founder of KNIME, an open source analytics platform provider, said concept drift can be difficult to detect because it may appear to be a random effect. However, because the COVID-19 impact was so sudden, concept jump occurred instead.

How COVID-19 impacted predictive model accuracy

Several pandemics have occurred in recent history, such as the H1N1 influenza pandemic in 2009, but their impacts were mild compared to COVID-19. The current health crisis has disrupted lives and businesses around the world.

"In November or December [2019], we might have been trying to decide whether to run a special at our hotel on the weekend; now we're worrying about whether you can even get anybody into a hotel," said Fallaw Sowell, associate professor of economics at Carnegie Mellon University's Tepper School of Business. "Most likely the models we were looking at and were important are no longer really meaningful because we're thinking about other criteria."

And it's not that organizations have different objectives than they did a few months ago: They're going to be evaluated on different criteria, which will require new models, Sowell said.

One of the challenges is a lack of relevant data. While the Spanish flu's impact is more similar to COVID-19 than recent pandemics, there is little data available about the 1918 pandemic. While past financial crises may help inform current economic models, the 2001 dot-com crash and 2008 financial crisis stemmed from entirely different circumstances.

"Even though this economic crisis is different from the previous one, there are certain [lessons] we can take forward," said Anand Rao, global artificial intelligence lead at PwC. "We all know about the U curve, the J curve, the V curve and the W curve -- all of those different sort of economic scenarios people started working. Even though you don't know which curve you're on, you can look at 'How do I get prepared for the different types.'"

Why maintaining predictive model accuracy remains difficult

The rapidly changing nature of COVID-19 means that data scientists cannot simply retrain models using 2020 data.

"If I now train [using] the past year of customer behavior data, my model is going to be totally messy because it has some normal data, some total lockdown data and some getting out of lockdown data," Berthold said. "You get sort of the worst of all three models."

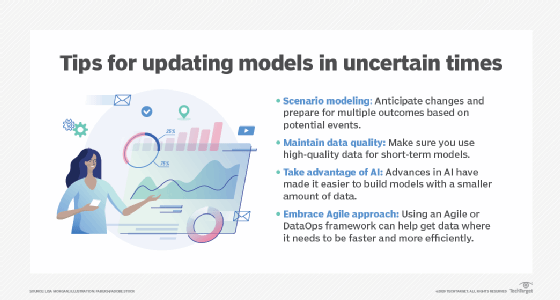

Rao said recent interest in scenario modeling has spiked because organizations want to be prepared for multiple outcomes. For example, a Consumer packaged goods (CPG) company wanted to examine demand given different COVID-19 scenarios, which PwC accomplished using only 20 data points. Rao said he would have dismissed the idea of such a project out of hand just a few months ago.

"COVID-19 accelerated different ways of approaching AI in a shorter time frame with a [smaller] amount of data," Rao said. "I'm not saying that the AI has got everything right, but it allows you to explore various options, maybe more nimbly than what people are used to for sure."

Other creative problem-solving is occurring out of necessity. Sowell said the Center for Disease Control and Prevention (CDC) is faced with a data quality problem, which is that different locations in the country are recording and reporting COVID-19 deaths differently. Another way to approach this particular problem is to calculate the number of excess deaths. Using five years of data (2015 to 2019), the CDC can compare the average number of deaths per month by location to actual number of 2020 deaths.

"They're now going to a better source of data and a little different model to address the question," Sowell said. "They're building off the idea [that] it's a break. If we can look at it the right way, we can get some meaningful information about total excess deaths."

What might future hindsight suggest?

Will data scientists wish they had solved today's problems differently? If so, what might they suggest? Berthold thinks organizations will prioritize human supervision of automated predictive systems.

"From a data science perspective, you cannot detect an event like [COVID-19] purely based on data," Berthold said. "You'll find that out in a few minutes or a few hours -- depending on what you're looking for -- that something has changed massively, but you're not going to find that without context knowledge. I think people are realizing that running predictive models in a totally unbounded and unmonitored way is dangerous."

Vikas Khorana, co-founder and CTO at Ntooitive, a digital marketing and technology company, expected extreme testing would become the norm and that the outputs would be applied to processes, including business continuity and disaster recovery.

Rao thinks data scientists will embrace their own form of Agile.

"That's something people are doing now, but we should have been doing it before the pandemic," Rao said. "Everyone has said it takes six or eight weeks before you can build a model, but people are slowly dying. You need something quick and dirty to go with and then go and refine it. Having a methodology around some of those things is still evolving, and that's something that we should probably figure out how best to do."