Data science vs. machine learning vs. AI: How they work together

Data science, machine learning and AI are central to analytics and other enterprise uses. Here's what each involves and how combining them benefits organizations.

Today's organizations are awash in data. Just a decade ago, a gigabyte of data still seemed like a large quantity. Nowadays, however, some large organizations are managing upward of a zettabyte. To get a sense of how much data that is, if your typical laptop or desktop computer has a 1 TB hard drive inside it, a zettabyte is equal to one billion of those hard drives.

How can organizations even hope to get any business value from so much data? They need to be able to analyze it and identify needles of valuable knowledge in an almost infinite haystack. That's where the combination of data science, machine learning and AI has become remarkably useful -- but you don't need anywhere near a zettabyte of data for those three things to be relevant.

Once relegated to esoteric corners of academia and research or the wonky side of IT and data management, they've collectively emerged as crucial technology topics for organizations of all types and sizes in various industries. However, there's often still confusion about data science vs. machine learning vs. AI and what each involves. Understanding the nature and purpose of these transformative concepts will point the way toward how to best apply them to meet pressing business needs.

Let's look at each one, plus the differences between them and how they can be used together.

What is data science?

While data has been central to computing since its inception, a separate field dealing specifically with data analytics didn't emerge until many decades later. Rather than the technical aspects of data management, data science focuses on statistical approaches, scientific methods and advanced analytics techniques that treat data as a discrete resource, regardless of how it's stored or manipulated.

At its core, data science aims to extract useful insights from data given the specific requirements of business executives and other prospective users of those insights. What are customers interested in purchasing? How is the business doing with a particular product or in a geographic region? Is the COVID-19 pandemic straining or growing resources? These are questions that can be answered using the mathematics, statistics and data analytics that are part of the data science process.

Traditionally, organizations have depended on business intelligence systems to derive insights from their growing pools of data. However, BI systems depend partly on humans to spot trends in spreadsheets, dashboards, charts or graphs. They're also challenged by at least four of the Vs of big data: volume, velocity, variety and veracity. As organizations store data in increasing quantities and collect it at increasing speed from a wide variety of data sources, in different formats and with different data quality levels, the conventional data warehousing and business analytics approaches that BI is built on fall short.

By comparison, the experiences of leading-edge companies, such as Amazon, Google, Netflix and Spotify, show how applying the fundamental aspects of data science can help uncover deeper insights that provide significant competitive advantages over business rivals. They and other organizations -- banking and insurance companies, retailers, manufacturers and many more -- use data science to spot patterns in data sets, identify potentially anomalous transactions, uncover missed opportunities with customers and create predictive models of future behavior and events.

Likewise, healthcare providers rely on data science to help diagnose medical conditions and improve patient care, while government agencies use it for things such as providing early notification of potentially life-threatening situations and ensuring the safety and security of critical systems and infrastructure.

Data science work is done primarily by data scientists. While there's no universal consensus on their job description, this is the minimum set of skills that effective data scientists must have:

- a firm grasp on statistics and probability;

- knowledge of various algorithmic approaches to analyzing data;

- the ability to use various tools, technologies and techniques to plumb large data sets for the desired analytics results; and

- data visualization capabilities to provide visibility into the derived insights.

As part of data science teams, data scientists often work with data engineers to facilitate the collection and wrangling of data from multiple source systems, as well as business analysts who understand evolving business needs, data analysts who understand the characteristics of changing data sets and developers who can help put the analytical models generated by data science applications into production.

Increasingly, those models are being called on to do more than just provide a snapshot of insights into the current state of data. Data scientists can train algorithms to learn patterns, correlations and other characteristics about sample data and then analyze full data sets that they haven't seen before. In this way, data science has contributed to the growth of artificial intelligence and, in particular, the use of machine learning to support the goals of AI.

What is machine learning?

One of the hallmarks of intelligence is the ability to learn from experience. If machines can identify patterns in data, they can then use those patterns to generate insights or predictions on new data that they're run against. This is the fundamental idea behind machine learning.

Machine learning relies on algorithms that can encode learning from examples of good data into models. The models can be used for a wide range of applications, such as classifying data into categories ("Is this image a cat?"), predicting a value for some data given previously identified patterns ("What is the probability that this transaction is fraudulent?"), and identifying groups in a data set ("What other products can I recommend to those who have bought this product?").

The core concepts of machine learning are embodied in the ideas of classification, regression and clustering. A wide range of machine learning algorithms have been created to perform those tasks across disparate data sets. The available algorithms include decision trees, support vector machines, K-means clustering, K-nearest neighbors, Naïve Bayes classifiers, random forests, Gaussian mixture models, linear regression, logistic regression, principal component analysis and many others. Data scientists typically build and run the algorithms; some data science teams now also include machine learning engineers, who help code and deploy the resulting models.

The machine learning process involves different types of learning, with varying levels of guidance by data scientists and analysts. The primary alternatives are:

- supervised learning, which starts with human-labeled training data that helps instruct algorithms on what to learn;

- unsupervised learning, a method in which an algorithm is left to discover information on its own using unlabeled training data; or

- reinforcement learning, which lets algorithms learn through trial and error with initial instructions and ongoing oversight from data scientists.

Of late, no algorithmic approach has generated as much excitement and promise as the use of artificial neural networks. Like the biological systems they're inspired by, neural networks comprise neurons that can take input data, apply weights and bias adjustments to the inputs and then feed the resulting outputs to additional neurons. Through a complex series of interconnections and interactions among these neurons, the neural network can learn over time how to adjust the weights and biases in a way that provides the desired results.

What started out in the 1950s simply as a single layer of neurons in the perceptron algorithm has evolved into a much more complicated approach -- known as deep learning -- that uses multiple layers to produce nuanced and sophisticated results. These multilayered neural nets have shown a remarkable ability to learn from large data sets and enable uses such as facial recognition, multilingual conversational systems, autonomous vehicles and advanced predictive analytics.

With a significant push from data-drenched companies like Google, Netflix, Amazon, Microsoft and IBM, what once seemed like a research hypothetical rapidly became the here-and-now possible, really taking hold in the early 2000s. The availability of big data, capabilities of data science and power of machine learning not only provide answers to today's organizational challenges but also may help crack the longstanding challenge of making AI a full reality.

What is artificial intelligence?

AI is an idea older than computing itself: Is it possible to create machines that have the cognitive ability of humans? The idea has long inspired academicians, researchers and science fiction writers, and it emerged as a practical pursuit in the middle of the 20th century. In 1950, computing pioneer and well-known code-cracker Alan Turing came up with a fundamental test of machine intelligence, which became known as the Turing Test. The term artificial intelligence was coined in the proposal for a seminal AI conference that took place at Dartmouth in 1956.

AI still remains a dream, at least in the form that many envisioned decades ago. The concept of a machine with the full range of cognitive and intellectual capabilities that people have is known as artificial general intelligence (AGI), or, alternatively, general AI. No one has yet built such a system, and the development of AGI may be decades away, if it's feasible at all.

However, we have been able to tackle narrow AI tasks. Cognilytica, my research firm, has defined seven patterns of AI that focus on specific needs for perception, prediction or planning. For example, they include training machines to:

- accurately recognize images, objects and other elements in unstructured data;

- have meaningful conversational interactions with people;

- use derived insights to run predictive analytics applications;

- spot patterns and anomalies in large data sets;

- create detailed profiles of individuals for hyper-personalization uses;

- drive autonomous systems with minimal or no human involvement; and

- solve scenario simulations and other challenging goal-driven problems.

Each of these narrow use cases provides significant capabilities and value today, despite not addressing the overarching goals of AGI. The development of machine learning has directly led to the advancement of these narrow AI applications. And because data science has made machine learning practical, it too has helped make them a reality.

Differences between data science, machine learning and AI

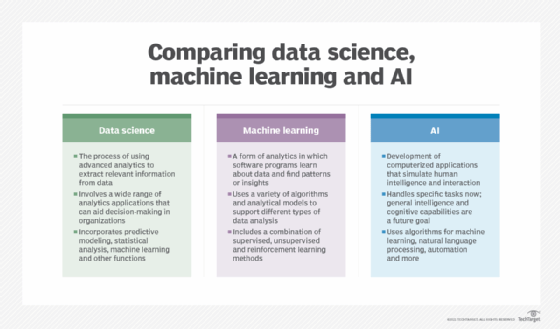

While data science, machine learning and AI have affinities and support each other in analytics applications and other use cases, their concepts, goals and methods differ in significant ways. To further differentiate between them, consider these lists of some of their key attributes.

Data science:

- focuses on extracting information needles from data haystacks to aid in decision-making and planning;

- is applicable to a wide range of business issues and problems through descriptive, predictive and prescriptive analytics applications;

- deals with data at a small scale up through very large data sets; and

- uses statistics, mathematics, data wrangling, big data analytics, machine learning and various other methods to answer analytics questions.

Machine learning:

- focuses on providing a means for algorithms and systems to learn from experience with data and use that experience to improve over time;

- learns by examining data sets rather than explicit programming, which makes use of data science methods, techniques and tools a key asset;

- can be done through supervised, unsupervised or reinforcement learning approaches; and

- supports artificial intelligence uses, especially narrow AI applications that handle specific tasks.

Artificial intelligence:

- focuses on giving machines cognitive and intellectual capabilities similar to those of humans;

- encompasses a collection of intelligence concepts, including elements of perception, planning and prediction;

- is capable of augmenting or replacing humans in specific tasks and workflows; and

- currently doesn't address key aspects of human intelligence, such as commonsense understanding, applying knowledge from one context to another, adapting to change and displaying sentience and awareness.

How data science, machine learning and AI can be combined

The business value of data science on its own is significant. Combining it with machine learning adds even more potential to generate valuable insights from ever-growing pools of data. Used together, data science and machine learning also drive a variety of narrow AI applications and might eventually solve the challenge of general AI.

Here are some specific examples of how organizations are combining data science, machine learning and AI to great effect:

- predictive analytics applications that forecast customer behavior, business trends and events based on analysis of constantly changing data sets;

- conversational AI systems that can engage in highly interactive communications with customers, users, patients and other individuals;

- anomaly detection systems that underpin adaptive cybersecurity and fraud detection processes to help organizations respond to continually evolving threats; and

- hyper-personalization systems that enable targeted advertising, product recommendations, financial guidance and medical care, plus other personalized offerings to customers.

While data science, machine learning and AI are separate concepts that individually offer powerful capabilities, using them together is transforming the way we manage organizations and business operations -- and how we live, work and interact with the world around us.