noisy data

What is noisy data?

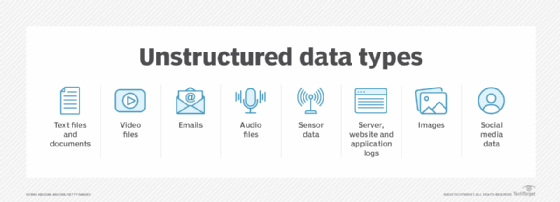

Noisy data is a data set that contains extra meaningless data. Almost all data sets will contain a certain amount of unwanted noise. Noisy data can be filtered and processed into a higher quality data set. The term has also been used as a synonym for corrupt data or data that cannot be understood and interpreted correctly by machines, such as unstructured data.

To illustrate the effect of noisy data, imagine trying to listen to a conversation in a crowded room. The human brain is excellent at filtering out other conversations so that you can focus on one, but if the room is too loud it becomes difficult or impossible to follow the conversation you are listening to and you lose the message you are trying to hear. In the same way, the more extra information is added to a data set, the harder it becomes to find the pattern you are looking for in the data.

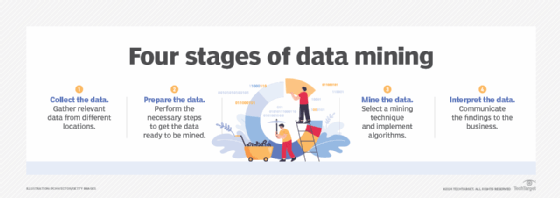

Noisy data unnecessarily increases the amount of storage space required and can adversely affect the results of any data mining analysis. Statistical analysis can use information gleaned from historical data to weed out noisy data and facilitate data mining.

Machine learning algorithms are particularly adept at sorting through noisy data to find underlying patterns. These algorithms can be misled though if the data is of low quality or has misleading components. This can lead to a garbage in, garbage out situation.

Noisy data can be caused by hardware failures, programming errors, and gibberish input from speech or optical character recognition programs. Spelling errors, industry abbreviations and slang can also impede machine reading. Natural fluctuations in sensors and measurement can add extra noise to readings. Gathering too broad of a data set can also make it hard to analyze.

Types of noisy data

Since the fields of data science and statistical analysis are very broad, there isn't an established classification for noise in data. Nevertheless, it can be broadly gathered into a few categories that can help us to understand the causes and types of noise.

To help illustrate, imagine a study of school-age children's growth rates that uses a data set with the heights of children in various school grades.

Random noise is extra information that has no correlation to the underlying data that is somehow introduced into the measurements or data set. It may also be called white noise. Almost any measurement will have a certain amount of random noise added to it, especially if it involves real-world measurements.

In this imagined study, many things can add random noise to measuring someone's height: how accurate the ruler is, how they round off the measurement, the person's posture or even how thick their socks are.

Misclassified data is information that is incorrectly labeled or sorted in a data set. This can be caused by human error or as a fault during data importing.

Many things can happen to misclassify measurement data. Someone might incorrectly use inches instead of centimeters, or accidentally write in the weight where the height should be written. The data may also be damaged during import -- perhaps a spreadsheet has an extra cell inserted, causing all the data of one column to be offset by one.

Uncontrolled variables are extra factors that affect the data but are not accounted for. They can make the data look random when it is not or introduce patterns that aren't there.

Many factors can affect a child's height and growth including nutrition, family history and even socioeconomic factors. If these aren't accounted for, the data may be difficult to interpret.

Superfluous data is extra information that is completely unrelated to the information being examined. There may be so much extra information that what you are looking for is completely hidden.

The study might add in the height data from the last hundred years or military recruitment height data. If all this was added to the same data set but not properly identified, it would be difficult to untangle and find the modern data the researchers are looking for.

How to clean noisy data

There are many methods to remove noise and produce the cleanest possible data. The exact methods and implementations will depend on the data being worked on and the end goals.

Filtering is removing unwanted data. This can be as simple as removing certain categories or types of data from the analysis. Analysts may also filter out outliers, such as unusually high or low readings or ones very far from the mean data set.

Data binning is where the data is sorted into groups or categories to remove some of the random variance between entries.

Linear regression is a mathematical method to determine the correlation between the data and other variables. It can help determine how closely related the data is to the output.

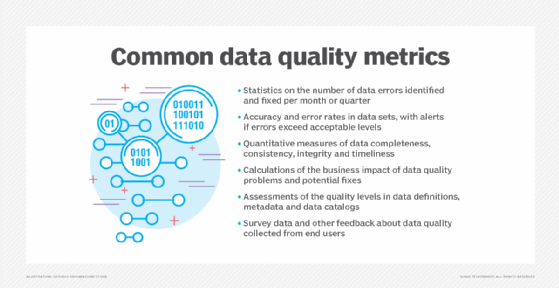

Read how organizations can use unstructured data to their benefit. Explore nine data quality issues that can sideline AI projects and see why good data quality for machine learning is an analytics must.