data sampling

What is data sampling?

Data sampling is a statistical analysis technique used to select, manipulate and analyze a representative subset of data points to identify patterns and trends in the larger data set being examined. It enables data scientists, predictive modelers and other data analysts to work with a smaller, more manageable subset of data, rather than trying to analyze the entire data population. With a representative sample, they can build and run analytical models more quickly, while still producing accurate findings.

Why is data sampling important?

Data sampling is a widely used statistical approach that can be applied to a range of use cases, such as analyzing market trends, web traffic or political polls. For example, researchers who use data sampling don't need to speak with every individual in the U.S. to discover the most common method of commuting to work. Instead, they can choose a representative subset of data -- such as 1,000 or10,000 participants -- in the hopes that this number will be sufficient to produce accurate results.

Data sampling enables data scientists and researchers to extrapolate knowledge about the broader population from a smaller subset of data. By using a representative data sample, they can make predictions about the larger population with a certain level of confidence, without having to collect and analyze data from each member of the population.

After the data sample has been identified and collected, data scientists can use it to perform their intended analytics. For example, they might use the sample to perform predictive analytics for a retail business. From this data, they could identify patterns in customer behavior, conduct predictive modeling to create more effective sales strategies or uncover other useful information and patterns.

Types of data sampling methods

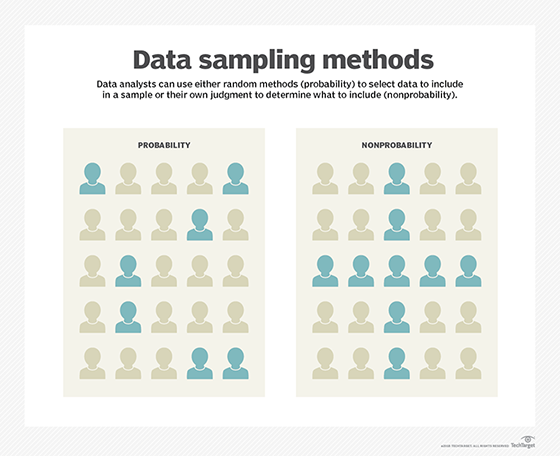

There are many different methods for drawing samples from data. The best choice depends on the data set and situation. Sampling methods are generally grouped into two broad categories: probability sampling and non-probability sampling.

Probability data sampling

Probability sampling uses random numbers that correspond to points in the data set. This approach avoids correlations between the data points chosen for the sample. It also ensures that every element within the population has an equal chance of being selected. The following methods are those most commonly used for probability sampling:

- Simple random sampling. Software is used to randomly select subjects from the whole population.

- Stratified sampling. Analysts create subsets within the population based on a common factor and then collect random samples from each subgroup.

- Cluster sampling. Analysts divide the population into subsets (clusters), based on a defined factor. Then, they analyze a random sampling of clusters.

- Multistage sampling. This approach is a more complicated form of cluster sampling. It divides the larger population into multiple clusters and then breaks those clusters into second-stage clusters, based on a secondary factor. The secondary clusters are then sampled and analyzed. This staging could continue as multiple subsets are identified, clustered and analyzed.

- Systematic sampling. A sample is created by setting an interval at which to extract data from the larger population. For example, an analyst might select every 10th row in a spreadsheet of 2,000 items to create a sample size of 200 rows to analyze.

Non-probability data sampling

In non-probability sampling, selection of the data sample is based on the analyst's best judgment in the given situation. Because data selection is subjective, the sample might not be as representative of the population as probability sampling, but it can be more expedient than probability sampling. The following methods are commonly used for non-probability data sampling:

- Convenience sampling. Data is collected from an easily accessible and available group.

- Consecutive sampling. Data is collected from every subject that meets the criteria until the predetermined sample size is met.

- Purposive or judgmental sampling. The researcher selects the sample data based on predefined criteria.

- Quota sampling. The researcher ensures equal representation within the sample for all subgroups in the data set or population.

Once generated, a sample can be used for predictive analytics. For example, a retail business might use data sampling to uncover patterns in customer behavior and predictive modeling to create more effective sales strategies.

Advantages of data sampling

Data sampling is an effective strategy for analyzing data when working with large data populations. Through the use of representative samples, analysts can realize a number of important benefits:

- Time savings. Sampling can be useful with data sets that are too large to efficiently analyze in full, such as those used in big data analytics or generated by large, comprehensive surveys. Identifying and analyzing a representative sample is more efficient and less time-consuming than working with the entire population.

- Cost savings. Data sampling is often more cost-effective than collecting and processing data from the entire population because it requires less time and fewer resources.

- Accuracy. Correct sampling techniques can produce reliable findings. Researchers can accurately interpret information about the total population by selecting a representative sample.

- Flexibility. Data sampling provides researchers with the flexibility to choose from a variety of sampling methods and sample sizes to best address their research questions, yet work within the limitations of their resources.

- Bias elimination. Sampling can help to eliminate bias in data analysis. For example, a well-designed sample can limit the influence of outliers, errors and other kinds of bias that could skew the results of the analysis.

An important consideration in data sampling is the sample's size. In some cases, a small sample is enough to reveal the most important information about the full population. In many cases, however, a larger sample increases the likelihood of accurately representing the population, even though the increased size makes it more difficult to manipulate and process the data.

Challenges of data sampling

For sampling to be effective, the selected subset of data must be representational of the larger population. However, analysists face several important challenges when trying to ensure that the sample is indeed representational:

- Risk of bias. One of the main challenges with data sampling is the possibility of introducing bias into the sample. If biases are introduced when collecting the sample, the results could be incorrect or misleading.

- Determining the sample size. With data sampling, determining an appropriate sample size can sometimes be difficult. If the sample size is too small, the data is easier to work with, but the results might not be accurate since the sample is not fully representative of the population.

- Sampling error. A data sample might be subject to a sampling error, which is a discrepancy between the sample and the population. An error might be the result of chance, bias or other factors. Such errors can affect the accuracy of the data analytics.

- Sampling method. The choice of a sampling method often depends on the research question and population being studied. However, selecting the appropriate sampling technique can be difficult, as different techniques are better suited for different research questions and populations.

Data sampling process

The process of data sampling typically involves the following steps:

- Define the population. The population is the entire set of data from which the sample is drawn. To ensure that the sample is properly representative, the target population must be precisely defined, including all essential traits and criteria.

- Select a sampling technique. Analysts should choose the best sampling method for the research question and the population's characteristics. Multiple methods are available for drawing samples from data, such as simple random sampling, cluster sampling, stratified sampling and systematic sampling.

- Determine the sample size. Analysists should determine the optimum sample size required to produce accurate and reliable results. This decision can be influenced by factors such as money, time constraints or the need for greater precision. The sample size should be large enough to be representative of the population, but not so large that it becomes impractical to work with.

- Collect the data. The data is collected for the sample using the chosen sampling approach, such as interviews, surveys or observations. This might entail random selection or other stated criteria, depending on the research question. For example, in random sampling, data points are selected at random from the population.

- Analyze the sample data. After the data sample has been collected, it is processed and analyzed. From these results, analysts can draw conclusions about the data, which are then generalized or applied to the entire population.

Common data sampling errors

A sampling error is a difference between the sampled value and the true population value. Sampling errors can occur during data collection if the sample is not representative of the population or is biased in some way.

Because a sample is merely an approximation of the population from which it is collected, even randomized samples might contain errors such as the following:

- Sampling error. Sampling bias arises when the sample is not representative of the population. This can occur when the sampling method is incorrect or when there is a systemic inaccuracy in the sampling process. Errors may develop as a result of a large variance in a specific metric across a specified date range. Alternatively, errors could occur due to a generally low volume of a given measure in relation to entire population. For instance, if a website has a very low transaction count in comparison to overall visits, sampling could result in substantial disparities.

- Selection error. Selection bias arises when the sample is chosen in a way that favors a specific group or trait. For example, if a health study targets only people who are willing to participate, the sample might not be representative of the overall community.

- Non-response error. This bias happens when the people chosen for the sample do not participate in the survey or study. If this occurs, certain groups could be underrepresented, affecting the accuracy of the results.

Predictive analytics is being used by many organizations to forecast occurrences and improve the accuracy of data-driven choices. Examine the four popular simulation approaches used in data analytics.