zagandesign - Fotolia

When and how to use a FIFO queue in SQS

Using FIFO queues in Amazon SQS support Exactly-Once Processing and keep tasks in order. But they can create bottlenecks if used with the wrong workloads.

Software developers routinely use queues to manage and process tasks in an orderly fashion. And while first-in, first-out queues are a popular way to process items in sequential order, they suffer from scalability issues.

A first-in, first-out (FIFO) queue is ideal for tasks, such as copying database updates or performing transactional updates, but because of scalability issues, IT teams should avoid a FIFO queue system unless an application absolutely needs it. For example, if an application pushes items A, B and then C, item A needs to finish before item B or item C can process. With standard SQS queues, all three items can process at once.

A FIFO queue on Amazon Simple Queue Service (SQS) supports Exactly-Once Processing, while standard queues support only At-Least-Once processing. Standard SQS queues operate with eventual consistency, meaning multiple processes receive the message at one time. Therefore, individual applications need to assume any message could be received multiple times and never assume that this is the first -- or only time -- the message has been received.

When a message is received multiple times, applications can fail or end up with error conditions, such as charging an account twice. In some cases, IT teams can use an external locking mechanism, such as DynamoDB with Conditional Writes. For example, if the team sends an SQS message to charge a customer's account, it can include a transaction ID, along with the message, and then write that ID to a DynamoDB table with an Expected Value of the transaction ID to Does not exist. If the transaction ID has processed already, this write would cause a DynamoDB Conditional Failure Exception, and the application could handle that error and not perform the operation.

When and how to use a FIFO queue

Often, having transactions process in sequential order is more important than having them complete quickly. A FIFO queue works for transactional database updates, purchases or any other synchronous tasks. It's also ideal when charging a user's account, which may not require a specific order of processing, but must not have duplicate events or extra charges.

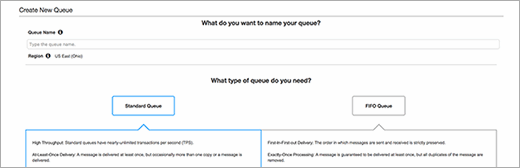

Creating a new SQS queue in the AWS Management Console requires developers to choose between a standard queue and FIFO queue -- they can't convert existing SQS queues to FIFO queues. FIFO queues must include .fifo at the end of the name, as shown in Figure 1.

FIFO SQS queues aren't available in all regions, such as U.S.-East-1, which was the first -- and default -- region for many developers. When creating an SQS queue, if the FIFO option doesn't show up, switch to a different region.

FIFO queues operate as a single pipe and support groups to process multiple messages at once through the MessageGroupId parameter in the SQS API. Essentially, each group is a separate queue or a stack of messages that process in sequence. Use the parameter to specify a unique ID for each group of messages that must process in order.

For example, to reduce a user's account balance and return content, set the User ID as the MessageGroupId to ensure each action is performed in sequence for that user. This way, if the user makes several purchases, actions are delivered in order. If his account balance falls below zero, you can pause the queue until he adds funds and then continue processing requests after the account balance is replenished.

Redrive policies with a FIFO queue

Just like standard queues, FIFO queues can include redrive policies, which enable developers to set it so the system only processes a message a certain number of times before it gives up. FIFO queues also have Exactly-Once delivery policies, so the redrive policy tries to process once, instead of an estimated number of attempts. Standard SQS queues can deliver the same message multiple times, so a redrive policy can only estimate how many times it will be retried before going to the Dead Letter Queue.

Messages can be hidden for a period of time and retried, but a developer must remove them from the queue before the next one is delivered. A redrive policy prevents backlog in a group by automatically moving messages that cannot process into another queue for inspection or reprocessing.

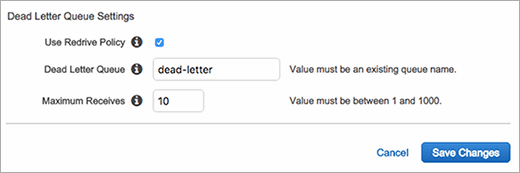

To configure the redrive policy after a queue has been set up, create a queue and use it as the Dead Letter Queue. In the console -- under Queue Actions -- choose Configure Queue.

Enable the redrive policy and then enter the name of the Dead Letter Queue. Configure the maximum number of times a message should be tried before being moved to the Dead Letter Queue:

If a worker process fails after the maximum number of receives, the message will automatically move into the new Dead Letter Queue. Another worker process should pick up that message and attempt a different type of action, such as manually alerting a developer of an error or perhaps retrying again several hours later in a different method.

It's especially important to use Dead Letter Queues with FIFO queues because messages could otherwise block future events from processing. Sometimes this can be a good thing, but in many cases, it has negative consequences.

FIFO queues are a nice addition to the SQS lineup, but they're not ideal for all projects. They introduce a single point of failure and potential bottlenecks. Developers can use the MessageGroupId for every message to avoid the requirement to process tasks in exact order. But a FIFO queue has a 300-messages-per-second process limit. But overall, FIFO queues are a better option than having to manually check the order and status of a standard queue each time a new message arrives.