Andrea Danti - Fotolia

DynamoDB Streams keep database tables in sync

Developers who want to deploy localized apps in different regions should know about database synchronization challenges. DynamoDB Streams and Lambda can ensure tables are updated.

Amazon DynamoDB is a nonrelational schema-less database capable of almost infinite scale. DynamoDB is an easy-to-use key-value store that is automatically managed within AWS. Developers can provision DynamoDB to handle any application's expected throughput requirements. The service is fast and returns most queries in under 10 milliseconds. But DynamoDB is only fast when accessed locally. If a developer wants to deploy localized versions of an application in different regions, he must find a way to synchronize frequently accessed DynamoDB tables across regions. AWS Lambda and DynamoDB Streams can help with this process.

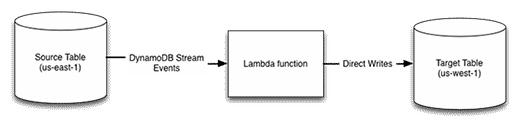

DynamoDB Streams allow developers to trigger events when changes are made to a DynamoDB table, including any form of write operation. A stream event can trigger a Lambda function, which executes when the operation runs. This event is on one table, so it's relatively simple to replicate that event on another table in a separate region.

Setting up a one-way mirror

A one-way mirror is a copy of a data set that improves availability and redundancy. When setting up a localized application, developers mirror one database table to another to help keep the app functioning at a high level. To set up a one-way mirror from one DynamoDB table to another, developers need to create a Lambda function with access to write to a DynamoDB table in another region.

Once the developer has a DynamoDB table in the target region, he creates a simple Lambda function that copies events from the DynamoDB Stream from the source table to the target DynamoDB table. This creates a one-way DynamoDB Stream -- it writes from the Source table to the Target table, but not vice versa. Because of this, developers can think of the Target table as a read-only copy. Writes can technically be performed on the Target table, but they will not be saved to the Master table.

First, developers need to create a new AWS Identity Access and Management (IAM) role for the custom Lambda function. Attach the AWSLambdaBasicExecutionRole policy to the role to give it basic Lambda access to CloudWatch Logs. Next, add read access for Source tables and write access for Target tables. Give the function full access to all DynamoDB tables to allow it to copy to and from tables as needed.

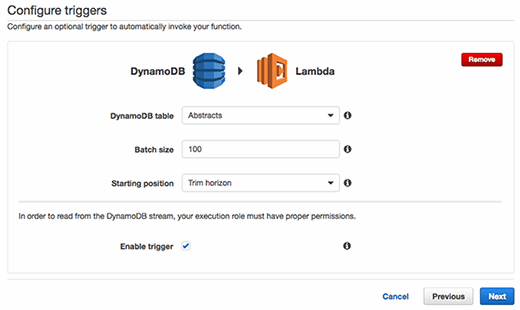

When creating the Lambda function, skip the blueprint setup, but add a DynamoDB trigger and configure it to the table, choosing the Trim Horizon starting position. This ensures that the Lambda function will always receive the events to process in the correct order.

On the next page, use an existing IAM role, and choose the role that the developer set up in the first step. The following code may be used to set up a simple copy operation, replacing INSERT_REGION_HERE with the region of the Target table.

var AWS = require('aws-sdk');

var dynamodb = new AWS.DynamoDB({ region: 'INSERT_REGION' });

/**

* Handle INSERT and MODIFY event types

*/

function put(record, tableName){

dynamodb.putItem({

TableName: tableName,

Item: record.NewImage,

}, function putRecordCallback(err){

if(err){

console.error('ERROR Saving record', err);

}

});

}

/**

* Handle a DELETE event type

*/

function remove(record, tableName){

dynamodb.deleteItem({

TableName: tableName,

Key: record.Keys,

}, function putRecordCallback(err){

if(err){

console.error('ERROR Deleting record', err);

}

});

}

exports.handler = function handler(event, context, callback){

if(event && event.Records){

event.Records.forEach(function(record){

// Parse the table name out of the event source ARN

var tableName = record.eventSourceARN.split('/')[1];

if(record.eventName === 'MODIFY' || record.eventName === 'INSERT'){

put(record, tableName);

} else if(record.eventName === 'DELETE'){

remove(record, tableName);

} else {

console.error('Ignoring Unkonwn Event Type:', record.eventName);

}

})

callback(null, 'Processing Events');

} else {

console.error('ERROR', JSON.stringify(event));

callback('Malformed event');

}

};

Setting up a two-way mirror

One-way mirroring only works for tables that have write operations constrained to a single region. This works perfectly for Stories, but not so much for Sessions or other write-heavy items that are local to users. Anything that the web application writes frequently -- and not just a back-end component -- may benefit from two-way mirroring.

Two-way mirroring has a lot of race condition issues. For example, if an account is edited in two different regions at roughly the same time, which operation was correct? It's important for these types of configurations to only use two-way mirroring if a particular item is only going to be actively edited in one region at a time. Otherwise, if strong consistency is a requirement, it's best to just use one-way mirroring.

In two-way mirroring, each Target table also has a stream event set up to mirror change events back to the Source table. But it's important to note that only events from outside of the original sync function can be sent. So, in this case, each Item must have a version number; only events that increment the version number are saved between tables. Developers can use something such as the following to prevent an endless loop between the sync functions:

dynamodb.getItem({

TableName: tableName,

Key: record.Keys,

}, function checkVersionNumber(err, resp){

if(!resp || !resp.Item ||

parseInt(record.Item.version.N) > parseInt(resp.Item.version.N)){

// Do processing

}

});

Additionally, on any save operations within the application, a version number must be incremented using DynamoDB ADD syntax for any updates.

Use Route 53 Latency or Geolocation routing

To handle routing users to their closest endpoint, developers should set up Amazon Route 53. This service allows developers to direct end users to the application stack nearest to them. This can be done using Route 53's geolocation-based routing techniques, which developers can use to redirect traffic to certain endpoints based on the end user's physical location.

Further improvements

The functions shown here do not properly handle any DynamoDB errors that may come up. Developers can handle these errors using the new Promise syntax that Node.js 4.3 supports, which is the latest version that AWS Lambda supports. If multiple events occur on the same item within a short period of time, a race condition may occur where one event happens before the other processes. Developers can use the SequenceNumber available in each record to handle this issue. This ensures that updates are only processed for the Target table if the SequenceNumber is greater than the one that was already processed for that Item. This could be stored on the item itself or in a separate table. If the developer sets up two-way mirroring, he must store the sequence number in a separate table to prevent a loop between updates in one table vs. the other.