Mike Kiev - Fotolia

Analyze Amazon S3 storage classes, from Standard to Glacier

Select the proper S3 class to improve your storage strategy. Learn the differences in cost and fit, such as which tiers offer frequent access and which are better for archiving.

Enterprises rely on cloud infrastructures for data backup, archiving and disaster preparedness. For instance, because AWS operates infrastructure and storage services that are highly distributed, flexible and secure, many organizations have become interested in AWS as an alternative to off-site tape storage for long-term data archival.

Like many Amazon services, S3 object storage comes in several variants with different performance characteristics and price points, including a new Glacier Deep Archive service that is the closest analog to off-site tape storage.

When AWS users consider this array of storage options, it can be difficult to choose the best tool for the job. Our goal in this piece is to define the various AWS object storage services and focus on fits for low-cost, long-term archival. We'll look at their capabilities and limitations, the type of data and applications for which each is suited and the tradeoffs of using AWS for long-term storage versus traditional alternatives.

AWS object storage portfolio

AWS offers six object storage classes in its S3 portfolio. Before users choose one, they need to consider these six data storage parameters:

- durability and redundancy to store data in a single or multiple AWS regions and availability zones (AZs);

- availability and uptime;

- performance, as defined by latency to the first byte of retrieved data;

- duration or holding time;

- access frequency; and

- capacity

S3 service costs are determined by how these parameters are defined across the various tiers. For example, more redundancy, lower access latency or higher uptime can translate to a higher price.

Below are the six Amazon S3 storage classes, listed in descending order of cost and access frequency, along with their notable characteristics:

- Standard: Standard S3 is a general-purpose object storage platform designed for application data that must be instantly and constantly available.

- Intelligent-Tiering: Many applications have large data sets with a range of access patterns. These patterns depend on factors such as the data type, seasonal changes and internal business needs. Intelligent-Tiering automatically identifies and moves infrequently accessed data -- data that has not been accessed for 30 days -- to lower-cost infrastructure. When an object in the infrequent tier is accessed, it is automatically moved back to the higher-performance tier and the 30-day clock restarts.

- Standard Infrequent Access (IA): Some data is seldom accessed but requires fast performance when users need it. The Standard-IA tier targets this scenario and delivers performance similar to standard S3 but with less availability.

- One Zone-IA: Unlike Standard-IA, this tier doesn't automatically spread data across at least three AZs. However, both IA tiers provide the same millisecond latency to data as Standard S3.

- Glacier: Although it uses object storage, Glacier is a different animal than the other S3 versions in that it is squarely designed for data archiving. AWS has never revealed Glacier's underlying technology. Whether Glacier uses low-performance hard disk drives, tape, optical or something else, its performance and availability parameters are similar to enterprise tape libraries. However, unlike a tape library, Glacier users can specify a maximum time for data retrieval that can range from a few minutes to hours.

- Glacier Deep Archive: Deep Archive is designed for long-term archival -- think years -- with infrequent and slow access to data within 12 hours.

Performance and price across the S3 storage classes

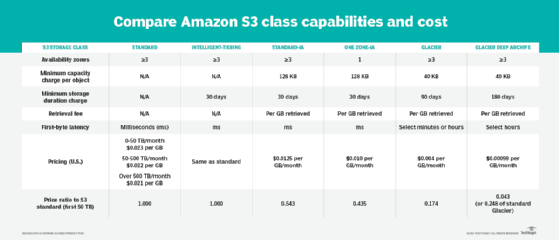

The following table from the AWS documentation summarizes the performance, reliability, availability and pricing of each Amazon S3 storage class.

Each of these object storage classes is equally designed for durability at a value of 99.99 -- 11 nines -- and supports lifecycle transitions. This level of durability is particularly notable because it translates to an expected loss of one object every 100 years when storing a billion objects, or one object every 10,000 years out of 10 million objects. Each class -- aside from One Zone-IA -- is designed for 99.99% availability.

Usage tips

Most AWS users rely on multiple S3 tiers. Organizations that want to balance usage between the various Amazon S3 storage classes can employ lifecycle policies that automatically move data to lower-cost tiers based on factors like object age, last access time, storage bucket, object type, tag or prefix.

One Zone-IA, Glacier and Glacier Deep Archive are the most appropriate Amazon S3 storage classes for long-term archival. The Glacier tiers are the best for information that must be retained for years due to tax laws and regulatory guidelines. Glacier Deep Archive is about 95% cheaper than Standard S3, so the cost savings for large repositories can be significant.

S3 object storage vs. on-premises storage or off-site tape archival

The decision to use Glacier instead of an off-site tape storage service is multifaceted. Here are the advantages and setbacks of each approach.

Tape archive

- Exploits backup technology and software investments that many companies have already deployed.

- Doesn't rely on network connections to restore data in the event of a disaster.

- Can be less expensive for larger archives.

- Requires added administrative overhead and a support contract.

AWS -- Amazon Glacier and Glacier Deep Archive

- No Capex required.

- Faster time to the first byte of restored data.

- Enables users to trade off price and restoration time.

- Depends on a private AWS circuit -- Direct Connect, co-location service cross-connect -- for best performance.

- Can be more expensive for huge archives, particularly for companies that already have a current-generation tape library.