What is AWS Glue?

AWS Glue is a cloud-based and serverless data integration service that helps users to prepare data for analysis through automated extract, transform and load (ETL) processes. This managed service offers a simple and cost-effective way for categorizing and managing big data in the enterprise and using it for various applications, like machine learning (ML), application development and analytics.

AWS Glue from Amazon Web Services (AWS) simplifies the discovery, preparation, movement, integration and formatting of information from disparate data sources, both on premises and on the AWS cloud. It also makes it easy to manage and organize data in the centralized AWS Glue Data Catalog, where it can be analyzed and the results of the analyses used to inform business decisions.

Multiple data integration capabilities are available with AWS Glue, including the following:

- Data discovery.

- Modern ETL.

- Data cleansing.

- Data transformation.

- Centralized cataloging.

- Search and query of cataloged data using other Amazon services, like Amazon Athena, Amazon EMR and Amazon Redshift Spectrum.

The service also includes DataOps tools for authoring jobs, running jobs and implementing business workflows. These capabilities and tools support both technical and nontechnical users -- from developers to business users. AWS Glue's integration interfaces and tools also support different kinds of workloads, including ETL and streaming, enabling organizations to maximize the value and usability of their data.

How AWS Glue works

AWS Glue orchestrates ETL jobs and extracts data from many cloud services offered by AWS. The service generates appropriate output streams, depending on the application, and incorporates them into data lakes and data warehouses. It uses application programming interfaces (APIs) to transform the extracted data set for integration and to help users monitor jobs.

Users must define jobs in AWS Glue to enable the ETL process on data from the source to the target. They must also determine which source data populates the target (destination) and where the target data resides in order for AWS Glue to generate the data transformation code. These sources and targets can be any of the following AWS services:

- Amazon Simple Storage Service (Amazon S3).

- Amazon Redshift.

- Amazon DynamoDB.

- Amazon Relational Database Service (Amazon RDS).

- Amazon DocumentDB.

In addition, AWS Glue supports Java Database Connectivity (JDBC)-accessible databases, MongoDB, other marketplace connectors and Apache Spark plugins as data sources and destinations.

Users can utilize triggers to put ETL jobs on a schedule or pick specific events that trigger a job. Once triggered, AWS Glue extracts the data, transforms it based on scripts that are either generated automatically by AWS Glue or provided by the user in the AWS Glue console or API, and transforms the data from the data source to the target. The scripts contain the programming logic that is required to perform the data transformation. The transformation happens based on the metadata table definitions in AWS Glue Data Catalog, which are populated based on the user-defined crawler for data store sources, and the triggers defined to initiate jobs.

The service can automatically find an enterprise's structured or unstructured data when it is stored within data lakes in S3, data warehouses in Amazon Redshift and other databases that are part of Amazon RDS. Glue also supports MySQL, Oracle, Microsoft SQL Server and PostgreSQL databases that run on Amazon Elastic Compute Cloud instances in Amazon Virtual Private Cloud.

The service then profiles data in the data catalog, which is a metadata repository for all data assets that contain details such as table definition, location and other attributes. A team can also use Glue Data Catalog as an alternative to Apache Hive Metastore for Amazon EMR applications.

To pull metadata into Glue Data Catalog, the service uses Glue crawlers, which scan raw data stores and extract schema and other attributes. An IT professional can customize crawlers as needed.

Features of AWS Glue

The core features of AWS Glue are the following:

- Automatic data discovery. AWS Glue enables users to automatically obtain schema-related information and store it in the data catalog using crawlers. This information can then be used to manage ETL jobs.

- Job scheduler. AWS Glue jobs can be set and called on a flexible schedule with its job scheduler, either by event-based triggers, on demand or on a specific schedule, regardless of the complexity of the ETL pipeline. Several jobs can be started in parallel, and users can specify dependencies between jobs.

- Development endpoints. AWS Glue provides development endpoints to develop and test ETL scripts for various jobs. Developers can use these to debug Glue, as well as create custom readers, writers and transformations, which can then be imported into custom libraries.

- AWS Glue Studio. This no-code graphical interface makes it easy to define and monitor the ETL process and jobs, compose various data transformation workflows and inspect the data results at each step of a job. It automatically generates the code needed for AWS Glue jobs and includes a drag-and-drop editor to easily build ETL jobs.

- AWS Glue DataBrew. This visual data preparation tool makes it easy to clean and normalize data and even experiment with it from any data lake, data warehouse or database. DataBrew also includes 250-plus prebuilt transformations to automate data preparation, standardization and correction.

- Automatic code generation. AWS Glue automatically generates the code required to extract data from a source, transform it as required and load it into the target. The code scripts are generated in either Scala or PySpark.

- Integrated data catalog. The catalog acts a centralized store of data that can be used to discover, search and query data in any AWS data set without having to move the data. It is a singular metadata store of data from a disparate source in the AWS pipeline. An AWS account has one catalog.

- AWS Glue Flex. This is a flexible execution job class that reduces the cost of nonurgent data integration workloads and non-time-sensitive jobs with varying start and completion times.

- Transformation of streaming data in transit. AWS Glue cleans and transforms data in transit, ensuring that it is available for quick analysis in the user's target data store.

- Built-in ML and job notebooks. Any user can clean, prepare and deduplicate data for analysis with AWS Glue's ML-based FindMatches feature. They can also use serverless job notebooks with one-click setup to easily author jobs; develop and deploy scripts; perform ad hoc queries; and explore, analyze and visualize data.

- Interactive sessions. Data engineers can interactively explore and prepare data using their preferred integrated development environment or job notebook for many types of data preparation and analytics applications.

Benefits and drawbacks of AWS Glue

AWS Glue offers benefits for users looking to discover, prepare, move and integrate data for analytics use cases. For example, it offers a range of data integration capabilities. In addition, it supports many types of workloads, including ETL, ELT, batch, centralized cataloging and streaming, without requiring inflexible lock-in. Depending on the workload, users can select from one of many serverless and scalable data processing and integration engines, such as AWS Glue for Ray, AWS Glue for Python Shell or AWS Glue for Apache Spark.

These capabilities are available in a single serverless service. Consequently, users don't have to worry about managing the underlying infrastructure and can focus on discovering, preparing and integrating data for their specific applications.

AWS Glue can scale on demand at the petabyte level and supports different data types and schemas. It also offers pay-as-you-go hourly billing for any data size, meaning users only pay for the time the ETL job takes to run. This enables users to set up appropriate ETL jobs to maximize data value, while controlling costs.

Another benefit of AWS Glue is seamless integration with AWS analytics services and Amazon S3 data lakes. Its integration interfaces make it easy to integrate data across the organization's infrastructure and use it for different analytics applications and workloads.

Finally, AWS Glue includes a built-in Data Quality feature that helps maintain data quality across data lakes and pipelines by generating useful and actionable metrics. It also enables users to automatically create, manage and monitor data quality rules. If quality deteriorates, AWS Glue raises alerts that notify users and enable them to take appropriate action.

The following are additional benefits of AWS Glue:

- Fault tolerance. Failed jobs in Glue are retrievable, and logs in Glue can be debugged.

- Filtering. Glue filters for bad data.

- Support. Glue supports several non-JDBC data sources.

The drawbacks of AWS Glue include the following:

- Limited compatibility. While AWS Glue does work with a variety of commonly used data sources, it only works with services running on AWS. Organizations may need a third-party ETL service if sources are not AWS-based.

- No incremental data sync. All data is staged on S3 first, so Glue is not the best option for real-time ETL jobs.

- Learning curve. Teams using Glue should have a strong understanding of Apache Spark.

- Relational database queries. Glue has limited support for queries of traditional relational databases, only Structured Query Language (SQL) queries.

AWS Glue use cases

The primary data processing functions performed by AWS Glue are the following:

- Data extraction. AWS Glue extracts data in a variety of formats.

- Data transformation. AWS Glue reformats data for storage.

- Data integration. AWS Glue integrates data into enterprise data lakes and warehouses.

These capabilities are useful for organizations that manage big data and want to avoid data lake pollution -- hoarding more data than an organization can use. AWS Glue is also useful for organizations that want to visually compose data transformation workflows and run ETL jobs of any complexity on a serverless Apache Spark-based ETL engine.

Some other common use cases for Glue are the following:

- Obtain a unified view of data sources.

- Store and query table metadata in AWS Glue Data Catalog using SQL and Amazon Athena.

- Define and manage fine-grained data access policies with AWS Lake Formation.

- Access data sources for big data processing with Amazon EMR.

- Build, train and deploy ML models with Amazon SageMaker.

- Apply DevOps best practices with Git integration.

Some more specific common use case examples for Glue are as follows:

- Glue can integrate with a Snowflake data warehouse to help manage the data integration process.

- An AWS data lake can integrate with Glue.

- AWS Glue can integrate with Athena to create schemas.

- ETL code can be used for Glue on GitHub as well.

ETL engine

After data is cataloged, it is searchable and ready for ETL jobs. AWS Glue includes an ETL script recommendation system to create Python and Spark (PySpark) code, as well as an ETL library to execute jobs. A developer can write ETL code via the Glue custom library or write PySpark code via the AWS Glue console script editor.

A developer can import custom PySpark code or libraries. In addition, developers could upload code for existing ETL jobs to an S3 bucket and then create a new Glue job to process the code. AWS also provides sample code for Glue in a GitHub repository.

Scheduling, orchestrating ETL jobs

AWS Glue jobs can execute on a schedule. A developer can schedule ETL jobs at a minimum of five-minute intervals. AWS Glue cannot handle streaming data.

If a dev team prefers to orchestrate its workloads, the service enables scheduled, on-demand and job completion triggers. A scheduled trigger executes jobs at specified intervals, while an on-demand trigger executes when prompted by the user. With a job completion trigger, single or multiple jobs can execute when jobs finish. These jobs can trigger at the same time or sequentially, and they can also trigger from an outside service, such as AWS Lambda.

AWS Glue pricing

AWS charges users an hourly rate, billed by the second for data discovery crawlers, ETL jobs and provisioning development endpoints to interactively develop ETL code. The exception to this billing scheme is DataBrew jobs, which are billed per minute, and DataBrew interactive sessions, which are billed per session.

AWS also charges users a monthly fee to store and access metadata in AWS Glue Data Catalog. That said, the first million objects stored in the catalog, along with the first million accesses, are free. Usage of AWS Glue Schema Registry is also offered free by AWS.

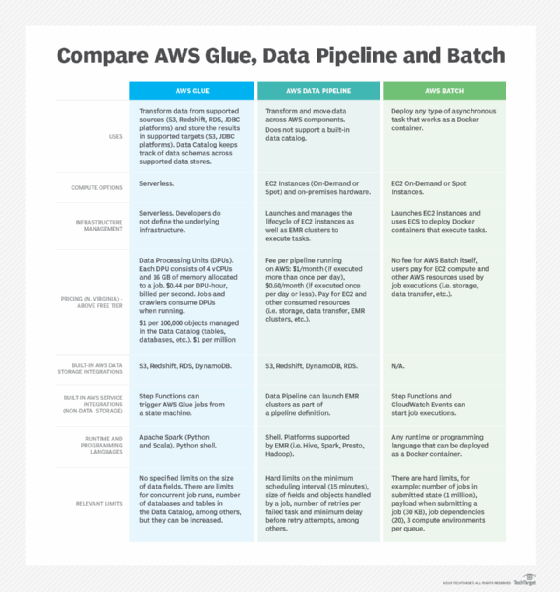

AWS Glue and Azure Data Factory have key differences, despite being similar ETL services. Compare AWS Glue vs. Azure Data Factor to see which best suits your organization's data integration and ETL requirements. Also, check out how different companies use AWS serverless tools and technologies to process and analyze data as part of their IT strategies.