Sergey Nivens - Fotolia

The 4 rules of a microservices defense-in-depth strategy

Learn the four must-follow rules when introducing defense-in-depth to a distributed microservices architecture, especially when services traverse numerous networks and apps.

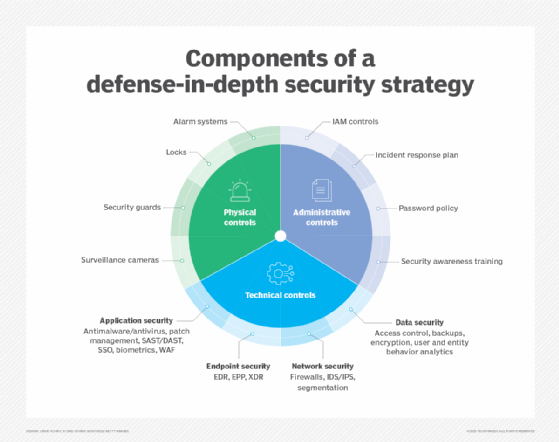

Defense-in-depth is a popular security strategy that places the applications and data (or groups of applications and data) that inhabit an architecture into designated security layers. While attackers could still gain access to one part of a system, the idea is that these multiple, varied security layers will prevent that attack from universally gaining system access. However, the defense-in-depth strategy presents a unique challenge when it comes to microservices, simply because the proliferation of independent services involved creates a massive attack surface.

Thankfully, architects can use a combination of encryption, access management, traffic analysis, monitoring and logging tools designed to address this additional complexity. For instance, microservices-centric API gateways can isolate services from outside access, manage credentials and regulate user permissions.

But while they are certainly helpful, it's not enough to simply install and run these tools. To properly apply a defense-in-depth strategy to a microservices architecture, there are four fundamental rules every software team must abide by. Let's review those four rules here.

Rule 1. Implement full protection

You can't simply protect your individual microservices: You must also secure the platforms they run on, the components they connect to and the information they access. Little good is done when the services are secured, but the underlying containers, network, service mesh and management systems are left vulnerable.

Play close attention to database resources and services that cross application boundaries. Generally, it's advisable to limit microservices interactions so that they only communicate with the applications authorized to access them. All shared resources should retain their own security mechanisms, perhaps at the network connection level, to limit risks. At any rate, you should avoid leaving any services or other application components universally accessible as much as possible.

Rule 2. Never ignore edge security

Defense-in-depth doesn't equate to simply relying on deeper layers of security to catch breaches. It's critical to ensure that user interaction and network-edge security are also strong, particularly when remote users are involved. Teams must absolutely monitor and investigate any suspected breach attempts to prevent gradual erosion of barriers through repeated attacks.

Most edge-security strategies employ API brokers to isolate internal services, manage user credentials and maintain role-based access control. These role-based access rights reduce the burden of controlling access on a per-user basis, a practice that all-too-often results in failures and exposed vulnerabilities. Just remember to avoid getting too granular with role definitions and assignments, which compromises the simplified access control this role-based approach was meant to provide.

Rule 3. Follow your workflows to determine protection points

In microservices applications, messages flow along predictable paths. This makes it easy to see logical points for security focus, such as where messaging paths intersect. However, this doesn't mean developers can ignore low-volume data paths. In fact, they should concentrate diligently on providing additional security in areas with considerable concentrations of workflow traffic.

Network-level security mechanisms provide the easiest way to address the security of microservices workflows, mainly by encrypting messages between microservices. However, be careful not to encrypt entire packets. Otherwise, intermediary handlers will have to decrypt the header to route traffic, holding up the entire workflow.

Rule 4. Consider zero-trust security

The final rule is to seriously consider implementing a zero-trust security model for all microservices-based applications. Most IP networks are inherently permissive when it comes to allowing connectivity, and allowing universal access is obviously problematic. Security measures like firewalls can protect assets over IP networks by limiting this universal connectivity; but, if something gets overlooked by the firewall, it immediately creates an unsafe environment.

Zero-trust mechanisms implement a default mode where no connectivity is allowed whatsoever until credentials are 100% validated. Sure, you'll get the occasional complaint from users who make a mistake with their credentials and are denied access. However, it's better to get a fixable complaint from a user than to be the victim of an unannounced attack on critical application systems.