Distributed tracing vs. logging: Uses and how they differ

Distributed tracing and logging help IT teams identify performance issues in systems in different ways. But they also complement each other to pinpoint problems.

When something goes wrong with an application or website, software developers troubleshooting the problem often first turn to two technology-driven processes: distributed tracing and logging. Both can be done alongside application performance monitoring and be included with APM practices in broader observability initiatives conducted by development and IT operations teams. But distributed tracing and logging serve different purposes and work in different ways.

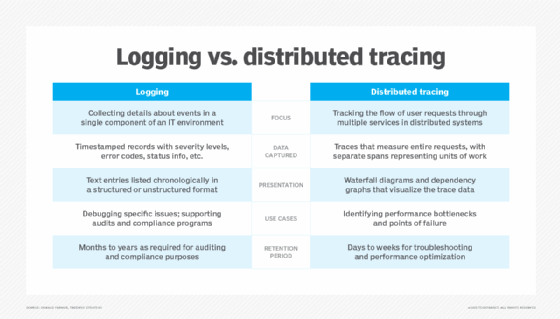

Logging is the default way to record what applications, systems and other components of an IT environment are doing. As the term indicates, it creates a straightforward log file that contains basic details about all actions and events within, say, an application. Distributed tracing is more complicated: It records information about individual user requests, transactions and application messages as they pass between multiple microservices or systems, so performance issues that arise can be traced through the entire process.

Let's look more closely at the two processes and the key differences between them, as well as how they can complement each other.

What is logging?

Logging works simply. When something happens in an IT system, such as an error message, a user login or various other events, the details are written to a log file. Log entries are timestamped, and severity levels are sometimes automatically applied to indicate the nature of entries and to help developers filter them for performance monitoring and troubleshooting. The most common levels are DEBUG, INFO, WARN, ERROR and FATAL.

In addition, entries often include things such as error codes and status information. Logs are crucial for diagnosing issues within a single application or service because teams can see exactly what went wrong just before and after an error.

This article is part of

What is APM? Application performance monitoring guide

The logging process has been much the same since the advent of digital computing. Nearly every part of a system architecture creates logs. The following are three common log categories:

- Application logs. These typically include information such as application startup/shutdown messages, processed transactions and errors or exceptions encountered. Custom events relevant to an application's business logic can also be logged.

- System logs. They're generated by the operating system or other system-level software, such as device drivers and system services. System logs record low-level events: system boot messages, hardware or network errors, user logins and logouts, resource usage alerts and more.

- Security logs. They record events related to IT security and user access. That includes user authentication details, such as successful logins, failed login attempts, authorization checks, password changes, account lockouts and firewall denials. Security logs often feed into security information and event management systems for threat detection and forensic analysis.

Other notable types include database logs, which track queries, changes and other actions in databases; audit logs, for tracking access to sensitive data and other activities in IT systems to support auditing and compliance initiatives; and network logs, which record network traffic and API requests. Together, all these logs provide a comprehensive picture of activities in an IT environment.

Logging features and issues to be aware of

Log files can be structured or unstructured. Structured logs are stored in data formats such as JSON and XML, and the entries have a consistent structure -- hence the term. They're easier to search, query and parse than unstructured text files, particularly if teams are looking to use automated logging tools to help with those tasks. On the other hand, unstructured logs can be easier for people to read at a glance.

When a software bug or performance issue is reported, logs give immediate clues for debugging. For example, that might include error stack traces, which are the sequence of software routines that led up to an error; the values of any parameters or variables in the system at the time; and possibly user IDs.

This level of detail makes logs critical for managing compliance with both external regulations and internal policies. Organizations might need to keep security logs for a year or more to track compliance with data privacy laws. As a result, they can become massive. But other types of logs can also grow to be huge, given the amount of data being recorded.

For this reason, it's important to develop a unified policy across the enterprise, not only about the instrumentation used to generate logs but also about how long logs are stored. Periodically, it's necessary to close or rename a current log file and start a new one, so the old file doesn't keep growing indefinitely. Once logs are "rotated" like this, older files can be compressed, archived or deleted, helping ensure that disk space is managed efficiently and that logs remain well organized for easier analysis.

What is distributed tracing?

If developers need to diagnose seemingly random slowdowns affecting certain user requests in an application, using logs alone can be a painful process because they only present error messages and other entries sequentially with timestamps. A developer might be able to work out that some of these entries are related to the same transaction, but there's no automatic map of how a request hops between different computing services or systems.

That's where distributed tracing becomes valuable: It ties together everything involved in processing requests across multiple services so developers and system administrators can see which service is slowing things down or failing.

Distributed tracing lets IT teams follow individual requests made in an application across all the services or processes they hit. In a microservices environment, a single user request might bounce through numerous application components. The request's entire path is traced as it flows from Service A to Service B, then to Service C, and so on.

The following are distributed tracing's key elements:

- Spans. As requests flow through a system, each service's portion of the processing work -- such as handling an incoming API call or querying a database -- is encapsulated in a separate span. For instance, if Service A calls Service B and then Service B queries a database, you would end up with three spans: one for A's work, one for B's work and another for the database call.

- Traces. A trace combines all these related spans to provide a comprehensive view of how a request moves between different services. Each span within a trace is tagged with the same trace ID, typically a 128-bit Universal Unique Identifier or another type of UID, so the trace context is passed along as the request proceeds on its journey. The data captured as part of a trace makes it easier to troubleshoot performance or reliability issues in a distributed system and identify their root cause.

Tracing features and issues to be aware of

Spans record both a start time and an end time for the work they track. By measuring a span's duration, developers can see how long that part of the process took -- i.e., its latency. The timing information is crucial for spotting where a system might be slowing down or encountering an error.

Each span can also contain tags or annotations with extra details relevant to the unit of work being done, such as the API endpoint that's called or a set of query parameters. This metadata helps pinpoint why the processing task represented by a span took longer than usual or failed.

Spans follow a hierarchical parent-child structure. A root span is the main and perhaps only parent one, representing the entire user request. The child spans for individual processing actions are nested within the root span as part of a trace. In addition, some child spans might have their own child spans if the jobs they handle involve multiple steps.

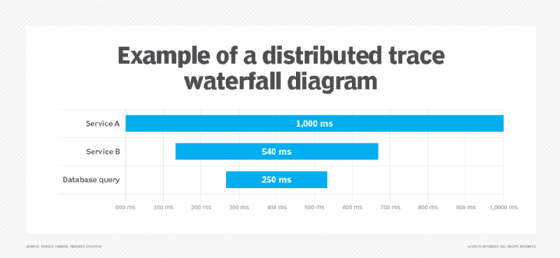

These parent-child relationships can generate a treelike or waterfall diagram that shows the entire flow measured by a trace. Indeed, distributed tracing tools typically display spans in a timeline or dependency graph. Each span appears as a bar, labeled with its average duration. Viewing all the spans together, end to end, reveals where most of the processing time is spent, which services are called in parallel and where any bottlenecks occur.

The simple example shown below illustrates a trace for the scenario mentioned previously: Service A calls Service B, which initiates a database query that enables Service A -- represented as the root span -- to complete the user request.

Like logging, distributed tracing can generate huge amounts of data if every detail is captured and stored. For applications and web services with a high volume of requests or messages, some development and IT operations teams will set up a sampling approach that records traces on perhaps 5% to 10% of traffic.

If a team detects too many anomalies or suspects bigger performance issues over time, it can dynamically increase the sampling rate. This approach reduces system overhead but still enables developers, admins and website managers to see performance patterns. However, traces should always be run on specific types of critical transactions, such as online checkouts and other payments.

Distributed tracing or logging: Which should you use?

Distributed tracing is often the quickest way to locate performance bottlenecks in multiservice workflows. Logs alone don't readily reveal that chain of events, even if the information is available in them. In addition to helping developers with bug fixes or performance optimization, another valuable use for distributed tracing is to help the IT operations team handle alerts or escalations about the overall health of a system, including servers and networks.

As described previously, logs are typically kept long-term for compliance and auditing. Distributed trace data isn't always stored indefinitely -- it's more about daily or short-term troubleshooting.

In practice, though, developers and administrators don't choose distributed tracing or logging over the other; the two processes are complementary. Logs answer the question, "What happened in this specific component at a given moment?" The primary question answered by distributed tracing is, "How does an entire user request move through the system?"

If CPU or memory utilization spikes in a system, an IT operations team might want to review both traces and logs. An unusual spike, or an application that's failing more than normal, triggers deeper investigation than simply monitoring current state and performance using dashboards built into system management consoles and APM tools. The next steps might be distributed tracing to find the bottleneck or point of failure and then examining a log for the final layer of detail to help diagnose why the problem is occurring.

A best practice is to embed a trace ID into every log entry so developers and admins can correlate specific traces to a log file. Then, if they notice a potentially problematic portion of a distributed trace, they can jump to the log and easily see what's going on in more detail.

Two pillars of an observability strategy

Both distributed tracing and logging are often combined with overall performance metrics so that application developers, admins and other IT practitioners can monitor changes over time as part of observability practices.

Observability enables teams to explore the patterns of issues in systems and diagnose problems they didn't anticipate when setting up their original error-handling processes. This exploratory approach is particularly valuable in complex distributed systems where the interactions between components often produce unpredictable behaviors that traditional monitoring tools can't adequately capture or explain.

A good observability strategy uses both logging and distributed tracing to help monitor, debug and optimize performance from multiple angles. Logs, traces and the metrics collected through APM and other monitoring processes are often described as the three pillars of observability. Each method answers different questions about application and system behavior to better inform observability efforts.

Donald Farmer is a data strategist with 30-plus years of experience, including as a product team leader at Microsoft and Qlik. He advises global clients on data, analytics, AI and innovation strategy, with expertise spanning from tech giants to startups.