Getty Images

3 critical stops on the back-end developer roadmap

When it comes to acquiring the skills needed to be a proficient back-end developer, there are no shortcuts. Some of the topics to know will be obvious, others might not be.

Those seeking a career in back-end development and enterprise architecture will find occupational roadmaps contain a somewhat predictable list of required skills. These skills typically revolve around a proficiency in one or more high-profile programming languages, an understanding of both relational and NoSQL database operations, the ability to work with major back-end development frameworks and experience with container orchestration.

While knowledge of relational databases and RESTful APIs is essential, back-end developers shouldn't overlook the importance of other important development concepts.

A good roadmap will include certain overlooked skills that are just as important as Node.js runtimes and RESTful API builds.

To help new back-end developers get a step ahead on their journey, let's review three essential topics: messaging, cloud-based services and the modern design patterns that make microservices and cloud-native deployments scalable and productive.

Message-based systems

New developers often see topics, queues and messaging as advanced areas. As a result, there is a lack of familiarity with this important back-end concept, along with a reluctance to incorporate messaging into an enterprise architecture.

Back-end developers need a strong understanding of how to incorporate message-based, publish and subscribe systems into their networks. The benefits of these architectures include the following:

- Greater performance and a higher quality of service.

- Enhanced reliability and resiliency.

- Elastic scalability.

- Delayed processing.

- Component decoupling.

In a traditional, synchronous system, the client makes a request to the server and waits for a response. In I/O-based architectures, each request triggers the creation of a new process on the server. This limits the number of concurrent requests to the maximum number of processes the server can create.

With traditional architectures, the server handles requests in the order it receives them. This can result in situations where simple actions stall and fail because the server is bogged down with complex queries that had arrived earlier. By introducing topics, queues and message handling into an enterprise architecture, back-end developers can enable synchronous interactions.

With a message-based system, developers place requests in a topic or queue. Subscribers, which might be SOA-based components or lightweight microservices, will read messages off the queue and reliably handle incoming requests when resources are available. This makes architectures more resilient, as they can spread out peak workloads over an extended period.

Queues can also categorize messages they receive. A publish-subscribe system can call on a server with more power to handle complex requests, while other machines handle the rest.

In modern environments, back-end developers will create subscribers as lightweight microservices that can be easily containerized and managed through an orchestration tool such as Kubernetes. As such, message-based systems are easily integrated into modern, cloud-native environments.

Back-end developers should introduce messaging and queues into an enterprise system whenever the following applies:

- A client makes poll-based requests to a server to be informed of state changes.

- Delayed processing of a request will not impact the customer's quality of service.

- Real-time calculations of streaming data does not have to be 100% accurate.

- Multiple subsystems could be used to handle messages delivered to a queue.

- Dynamic targeting of back-end subsystems could improve the quality of service.

A traditional back-end architecture involves an application server that will interact with a relational database or a NoSQL system. Back-end developers are typically well-versed and comfortable with these types of system designs.

The problem with the inclusion of topics and queues is that they require back-end developers or system architects to introduce a new component into the enterprise architecture. Systems that include delayed processing, publish-subscribe systems and asynchronous communication are not typically part of an initial system design. As a result, back-end developers who want to use these types of systems must introduce a new server-side technology into the mix.

A reluctance to change and an excessive aversion to risk can often be a barrier to inclusions of messaging systems in modern, enterprise architectures.

Cloud computing

Back-end developers trained and experienced with on-premises data centers sometimes overlook the benefits cloud computing can deliver. To be fully qualified to work in a modern enterprise, back-end developers must know how to create and deploy lambda expressions and how to provision and deploy managed services in the cloud.

Serverless computing allows programmers to develop business logic as lambda functions and deploys code directly into the cloud without the need to provision underlying servers or manage services at runtime.

The cloud vendor hosts lambda expressions on reliable, fault-tolerance infrastructure that can scale up or down to handle invocations as they happen. Without any infrastructure to manage, lambda expressions and the serverless computing architecture that supports them can greatly simplify the deployment stack and make the continuous delivery of code to the cloud easier.

Not only does serverless computing reduce the runtime management overhead, it can also be a cheaper deployment model. The pay-per-invocation serverless computing model has the capacity to reduce an organization's cloud spending, which is always an important nonfunctional aspect of enterprise deployments.

Back-end developers must be aware of the array of managed services the cloud makes available.

In the past, organizations would think about cloud computing as a reliable location for data storage and remotely hosted VMs. Today, with managed services, a cloud vendor handles the complexities of installation, provisioning and runtime management.

For example, in the past, to deploy container-based microservices into the cloud, the client would need to provision multiple VMs, such as EC2 instances in AWS, and install software to support the master Kubernetes node, master note replicas, multiple worker nodes and networking between master and worker nodes.

Runtime management, software updates, logs, upgrades and audits would be the client's responsibility. With a managed Kubernetes service, such as AWS Fargate, these complexities are hidden from the client.

With a managed Kubernetes service, microservices can be deployed directly into the cloud -- without the need to configure the environment. Logging, auditing and change tracking are provided by the cloud vendor.

A complete roadmap for back-end developers must include an ability to build and deploy serverless applications, along with an understanding of the types of fully managed services cloud vendors make available to their clients.

Cloud-native design patterns

Singletons, factories, bridges and flyweights are widely known by developers as design patterns. Unfortunately, these design patterns are so common that new patterns created from the continuous delivery of cloud-native software hosted in orchestrated containers don't always get recognized.

Every back-end developer must know the standard Gang of Four design patterns and its categories: creational, behavioral and structural. They must also be familiar with modern, cloud-native design patterns as well, such as the API gateway, the circuit-breaker and the log aggregator.

The API gateway is now commonplace in cloud-native deployments. It provides a single interface for clients that might need to access multiple microservices. Development, integration and testing is easier when API makers deliver their clients a single, uniform interface to use.

Additionally, an API gateway can translate the data exchange format used by microservices into a format that is consumable by devices that use a nonstandard format, such as IoT.

A cloud-native request-response cycle might include multiple downstream calls before a roundtrip to a back-end resource is complete. However, if one of those microservices at the end of an invocation chain fails, then the failed microservices pipeline has wasted a great deal of processing power.

To stop a flood of microservices calls that will inevitably lead to failure, a common cloud-native design pattern is to include a circuit breaker in the invocation flow. A circuit breaker will recognize when calls to a microservice have either failed or taken an unreasonable length of time to be fulfilled.

When an error trips the circuit breaker, the client gets an immediate error response, and the circuit breaker will stop any downstream calls. This allows the microservice to continue to function while the failed call is worked out and saves the client time. It relieves the back-end system from consuming unnecessary resources.

When a predetermined amount of time transpires, the circuit breaker will send requests downstream again. If those requests are returned successfully, the circuit breaker resets and clients proceed as normal.

Administrators can deploy stateless, cloud-native applications to any computer node participating in a distributed cluster. However, by default, container-based applications log all events to their node's local hard drive, not to a shared folder or central repository.

As a result, every cloud-native deployment needs a mechanism to push log files from each worker node to a central data store. The logs are then managed within a log aggregator.

The aggregator will not only store the logs for auditing and troubleshooting purposes, it will also standardize the logs in a format that will make it possible to trace user sessions and distributed transactions that touched multiple nodes in the cluster.

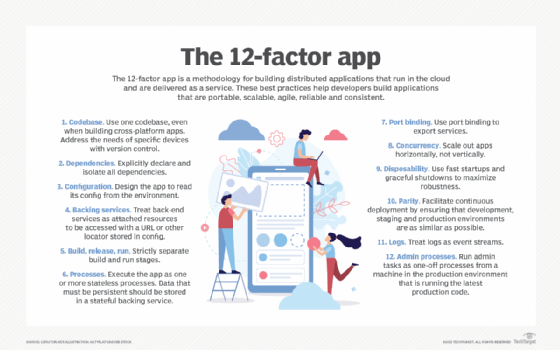

Along with knowledge of important microservices design patterns, a cloud-native developer must also be familiar with the tenets of the 12-factor app, which provides guidance on how to configure several things, including the following:

- Dependencies upon external libraries.

- Codebases for multiple microservices.

- Log management.

- Port binding.

- Backing services.

- Stateless applications to be highly scalable.

While there is no official standard on how to develop and deploy a cloud-native application, back-end developers who stick to the tenets of the 12-factor app should encounter fewer problems with development, deployment and runtime management of microservices-based systems.