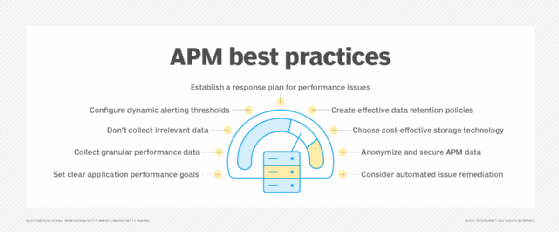

APM best practices: 9 strategies to adopt

Teams responsible for APM must develop a clear strategy to identify performance risks, reduce APM costs and secure sensitive data collected as part of their initiatives.

Virtually any organization that deploys and manages applications can benefit from application performance monitoring (APM) tools and practices. APM plays a key role in optimizing software responsiveness and reliability by systematizing the collection and analysis of data related to application performance and helping IT teams identify software problems and opportunities for improvement. By extension, it also assists in ensuring a positive end-user experience, protecting an organization's brand and maximizing revenue streams.

Yet, deploying APM software or establishing basic processes for collecting and interpreting application performance data don't guarantee optimal performance outcomes. Instead, teams responsible for APM must chart a deliberate strategy that helps them get the most value out of their APM initiatives and tools.

To that end, this article outlines nine APM best practices for organizations. These actions help to optimize various aspects of APM, such as improving a team's ability to identify performance risks, minimizing the costs of APM processes and securing sensitive collected data.

Application performance monitoring best practices for IT teams

The following list helps address some of the challenges many organizations face when managing application performance. Examples include the need to track and analyze the performance of complex, distributed applications hosted in cloud-native environments and the pressure to stretch IT budgets by optimizing the cost of APM data collection and storage.

This article is part of

What is APM? Application performance monitoring guide

Although not every best practice described here necessarily applies to every business, they're all strategies the typical organization can use to get the most out of APM programs.

1. Set clear application performance goals

Ask any IT team their main goals for application performance monitoring, and they'll likely say they want applications to perform well. But what does that mean? Does it imply maintaining a specific level of availability? Achieving a particular latency rate? Minimizing application errors?

The answers will vary from one organization to another -- and possibly from one application to another. But whatever the application performance goals happen to be, it should be a priority for teams to define them clearly by establishing the minimum levels of performance they want their applications to achieve.

Defining clear APM goals is especially important because virtually no application will perform perfectly. It's critical to identify which performance issues merit intervention and which ones software engineers can ignore because they fall within the range of acceptable performance.

2. Collect granular performance data

Modern, cloud-native environments involve large numbers of discrete components. For example, a Kubernetes cluster includes various control plane components, as well as nodes, pods and containers.

To assess performance and home in on problems as effectively as possible, it's a best practice to collect data that's as granular as possible. For instance, if you're managing the performance of applications deployed on Kubernetes, tracking just the overall performance of each one based on metrics such as latency and error rates likely isn't enough. Instead, you should collect this data granularly for each container within the application pods.

You should also correlate it with other data sources, such as performance metrics for each node that hosts application instances. Only through these insights can you pinpoint the source of a performance issue -- for example, by determining which container within a pod is causing poor performance or whether the exhaustion of CPU or memory resources on a node is the root cause of the performance trouble.

3. Don't collect irrelevant data

While collecting more data and more granular data is generally a good idea in the context of application performance monitoring, some data just doesn't tell you anything. When you collect irrelevant data, you add needless complexity to your APM processes. You might also bloat APM costs, since the more data you ingest into APM tools, the more you typically pay to use the tools.

For this reason, define clearly which data sources you don't need to collect. One example is redundant data, such as application error events recorded both in individual application logs and system logs for the servers that host the applications.

Circumstances might also arise where you don't need to manage the performance of a given application -- one deployed in a dev/test environment, for example -- and, thus, you can ignore the data it generates.

4. Configure dynamic alerting thresholds

Modern applications often experience large variations in the number of user requests they process, and their performance can change substantially based on the number of requests they're handling at a given time. This makes it challenging to establish a static baseline for what represents "normal" application behavior or performance levels. By extension, it's hard to configure alerts based on simple, preset thresholds. Therefore, it's a best practice to set up dynamic alerting rules. In other words, the conditions that trigger an alert should vary based on the load or traffic an application handles.

For example, rather than generating an alert every time an application's CPU utilization levels surpass 70%, consider configuring alerting rules such that this level of CPU consumption only triggers an alert if the application load is below normal levels. In that case, 70% CPU utilization would be a concern because it can't be explained by high numbers of requests and would likely instead reflect a problem like buggy code inside the application. But when the application is simply under a heavy load, 70% CPU utilization for a short period of time isn't unexpected. It might make more sense to fire an alert only when CPU consumption becomes very high -- like 85% or 90% -- during high-traffic periods.

5. Establish a response plan for performance issues

Generating effective alerts using APM tools is only half the battle. To translate those alerts into meaningful application performance improvements, teams must be prepared to react when the tools notify them of an issue.

Of course, it's impossible to anticipate every application performance degradation scenario or create a response playbook for every possible problem. IT professionals can, however, develop response plans for common performance problems, such as server or network failures. They can also decide ahead of time who will do what to respond to an incident. That's much more efficient than waiting until they're in the midst of an application performance crisis to delegate roles and responsibilities.

6. Create effective data retention policies

Although the primary goal of APM processes is to analyze application performance data in real time or near real time, teams might want to retain data after analysis in case they need to research what caused an outage or identify long-term performance trends. Retaining data for purposes like these makes sense. However, keeping data for longer than needed can be a waste of money because you have to pay for the infrastructure that stores the data.

Therefore, establish clear rules for how long your organization will retain APM data. Don't simply retain everything without a preset deadline for when it will be deleted; otherwise, you could waste money storing data that's far too old to serve any usable purpose.

7. Choose cost-effective storage technology

To a similar end, selecting a cost-effective storage infrastructure can minimize the expenditures associated with hosting APM data during both the analytics process and post-analytics data retention period.

A key goal when choosing storage infrastructure should be to balance cost with performance. For instance, during the analytics process, it typically makes sense to pay for storage devices that support high I/O rates, such as solid-state drives, since they'll enable faster analytics results. To retain data over the longer term, you can save money by using low-cost archival cloud storage services, where you'll pay just pennies per gigabyte. The data will take more time to access, but that's typically fine if you no longer need to run real-time analytics.

8. Anonymize and secure APM data

Although the data collected for application performance monitoring typically isn't highly sensitive in nature, it can sometimes contain information with security or compliance implications. Application log files that record user requests might contain personally identifiable information associated with individual users, for example. Likewise, the technical data in logs could help threat actors understand how systems work and find weaknesses they can exploit if the data falls into their hands.

For these reasons, anonymizing data collected during the APM process is a best practice. Organizations should also secure the data properly by configuring access controls that restrict who can view or modify it and encrypting data where appropriate. Doing so minimizes the risk of unauthorized access to sensitive information.

9. Consider automated issue remediation

Increasingly, APM tools offer the ability to detect and remediate issues automatically. They can't solve complex problems on their own, but they can perform basic automated actions such as restarting a crashed application or moving applications to a different server if the one originally hosting them is running low on available resources.

Automated remediations reduce the strain on IT teams and minimize the risk of an outage or performance degradation that could affect users. However, automated remediation also presents some risk because there's a chance APM tools will make the wrong decisions and take actions that don't actually solve a problem -- or even make it worse.

For these reasons, organizations should consider using automated remediation capabilities in their APM tools to help accelerate incident response. However, they should also decide which types of issues are too important to entrust to automation software alone. For the latter problems, they can disable auto-remediation features entirely or configure them to keep a "human in the loop" by requiring a technician to review and sign off on automated actions before the tools perform them.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.