5 core components of microservices architecture

Before you build a microservices application, take a closer look at the components of the architecture and their capabilities.

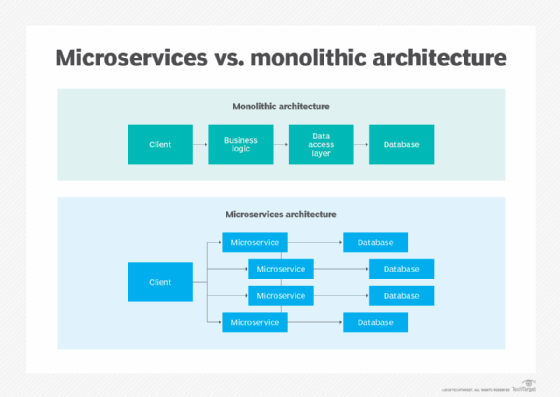

A microservices architecture -- as the name implies -- is a complex coalition of code, databases, application functions and programming logic spread across servers and platforms. Certain fundamental components of a microservices architecture bring all these entities together cohesively across a distributed system.

In this article, we review five key components of microservices architecture that developers and application architects need to understand if they plan to take the distributed service route. Start with microservices themselves, then learn about service mesh as an additional layer, app management via service discovery, container-based deployment and API gateways.

1. Microservices

Microservices make up the foundation of a microservices architecture. The term illustrates the method of breaking down an application into generally small, self-contained services, written in any language, that communicate over lightweight protocols. With independent microservices, software teams can implement iterative development processes, as well as create and upgrade features flexibly.

Teams need to decide the proper size for microservices, keeping in mind that an overly granular collection of too-segmented services creates high overhead and management needs. Developers should thoroughly decouple services in order to minimize dependencies between them and promote service autonomy. And use lightweight communication mechanisms like REST and HTTP.

This article is part of

What are microservices? Everything you need to know

2. Containers

Containers are units of software that package services and their dependencies, maintaining a consistent unit through development, test and production. Containers are not necessary for microservices deployment, nor are microservices needed to use containers. However, containers can potentially improve deployment time and app efficiency in a microservices architecture more so than other deployment techniques, such as VMs.

The major difference between containers and VMs is that containers can share an OS and middleware components, whereas each VM includes an entire OS for its use. By eliminating the need for each VM to provide an individual OS for each small service, organizations can run a larger collection of microservices on a single server.

The other advantage of containers is their ability to deploy on-demand without negatively impacting application performance. Developers can also replace, move and replicate them with fairly minimal effort. The independence and consistency of containers is a critical part of scaling certain pieces of a microservices architecture -- according to workloads -- rather than the whole application. It also supports the ability to redeploy microservices in a failure.

Docker, which started as an open-source platform for container management, is one of the most recognizable providers in the container space. However, Docker's success caused a large tooling ecosystem to evolve around it, spawning popular container orchestrators like Kubernetes.

3. Service mesh

In a microservices architecture, the service mesh creates a dynamic messaging layer to facilitate communication. It abstracts the communication layer, which means developers don't have to code in inter-process communication when they create the application.

Service mesh tooling typically uses a sidecar pattern, which creates a proxy container that sits beside the containers that have either a single microservice instance or a collection of services. The sidecar routes traffic to and from the container, and directs communication with other sidecar proxies to maintain service connections.

Two of today's most popular service mesh options are Istio, a project that Google launched alongside IBM and Lyft, and Linkerd, a project under the Cloud Native Computing Foundation. Both Istio and Linkerd are tied to Kubernetes, though they feature notable differences in areas such as support for non-container environments and traffic control capabilities.

4. Service discovery

Whether it's due to changing workloads, updates or failure mitigation, the number of microservice instances active in a deployment fluctuate. It can be difficult to keep track of large numbers of services that reside in distributed network locations throughout the application architecture.

Service discovery helps service instances adapt in a changing deployment, and distribute load between the microservices accordingly. The service discovery component is made up of three parts:

- A service provider that originates service instances over a network;

- A service registry, which acts as a database that stores the location of available service instances; and

- A service consumer, which retrieves the location of a service instance from the registry, and then communicates with that instance.

Service discovery also consists of two major discovery patterns:

- A client-side discovery pattern searches the service registry to locate a service provider, selects an appropriate and available service instance using a load balancing algorithm, and then makes a request.

- In a server-side discovery pattern, the router searches the service registry and, once the applicable service instance is found, forwards the request accordingly.

Data residing in the service registry should always be current, so that related services can find their related service instances at runtime. If the service registry is down, it will hinder all the services, so enterprises typically use a distributed database, such as Apache ZooKeeper, to avoid regular failures.

5. API gateway

Another important component of a microservices architecture is an API gateway. API gateways are vital for communication in a distributed architecture, as they can create the main layer of abstraction between microservices and the outside clients. The API gateway will handle a large amount of the communication and administrative roles that typically occur within a monolithic application, allowing the microservices to remain lightweight. They can also authenticate, cache and manage requests, as well as monitor messaging and perform load balancing as necessary.

Additionally, an API gateway can speed up communication between microservices and clients by standardizing messaging protocols translation and freeing both the client and the service from the task of translating requests written in unfamiliar formats. Most API gateways will also provide built-in security features, which means they can manage authorization and authentication for microservices, as well as track incoming and outgoing requests to identify any possible intrusions.

There are a wide array of API gateway options on the market to choose from, both from proprietary cloud platform providers like Amazon and Microsoft and open source providers such as Kong and Tyk.