Getty Images/iStockphoto

How and why businesses should develop a ChatGPT policy

ChatGPT-like tools have enterprise potential, but also pose risks such as data leaks and costly errors. Establish guardrails to prevent inappropriate use while maximizing benefits.

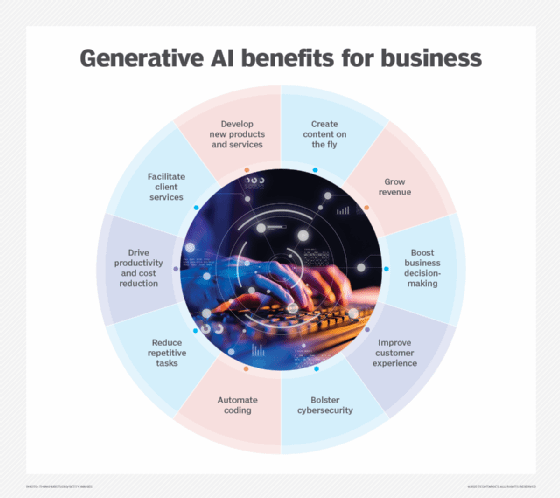

OpenAI's ChatGPT and other large language models show tremendous promise in automating or augmenting enterprise workflows. They can summarize complex documents, discover hidden insights and translate content for different audiences.

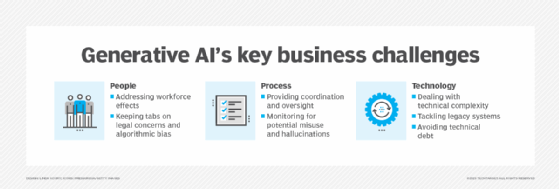

However, ChatGPT also comes with many new risks, such as generating grammatically correct but factually inaccurate prose -- a phenomenon often referred to as hallucination. One law firm was recently chastised and fined for submitting a legal brief citing nonexistent court cases.

Many ChatGPT-like services also collect users' queries as part of the model training process. Samsung recently banned employees from using ChatGPT after discovering technicians were sharing proprietary information with the service.

Given these risks, enterprises must move sooner rather than later in developing acceptable use policies that outline responsible ChatGPT use while improving productivity and customer experience. It's also important to clarify how policies can affect internal use and externally facing services.

Clarify the terminology

While ChatGPT has brought attention to the power of generative AI, the LLMs underlying the service are also being woven into many enterprise apps. Many include the term GPT, which stands for generative pretrained transformer, the model behind ChatGPT. Microsoft and GitHub describe these as copilots, while the legal industry is starting to call them co-counsel. Regardless of the terms, all these tools must be included in your policy.

Barry Janay, owner and president at the Law Office of Barry E. Janay, P.C., said his firm has fully embraced ChatGPT, Casetext CoCounsel and GitHub Copilot. But he does not like these terms, which gloss over the need for oversight.

"I don't fully like the copilot or co-counsel monikers, because while they do create incredible efficiencies of 100 times or even 1,000 times the productivity, they still need to be supervised," Janay said. "I feel positive about where this is heading, although I fully recognize there are serious potential pitfalls."

Set clear guardrails

"Companies should set clear guidelines regarding what employees can and cannot do to establish guardrails for responsible experimentation with AI," said Steve Mills, managing director, partner and chief AI ethics officer at Boston Consulting Group (BCG).

This gives employees a clear lane to operate in, empowering them to experiment with using generative AI for activities such as ideating marketing copy or conducting basic research to identify relevant content for further analysis. Mills said BCG's research has found that companies with clear guidelines innovate faster, as employees can move quickly but understand when to pause for a deeper discussion.

Once guidelines are established, leaders should communicate them broadly to the entire company. This helps solidify employees' understanding of how to use the technology in secure, compliant and responsible ways.

Mills also advises that companies take special precautions when dealing with sensitive or proprietary information, whether their own or that of their clients. "Leadership should explicitly communicate what information should or should not be provided to the AI model," he said.

Depending on their specific needs, organizations might build their own models or buy services from vendors. When evaluating vendor options, clarify how training data and input prompts feed back into the models. Creating a shared understanding of what to look for in new tools can help employees at all levels of the company align around acceptable use.

Establish responsible AI programs

A cornerstone of any effective ChatGPT policy is committing to the responsible use of AI at the executive level. "Success, in many cases, largely depends on whether CEOs understand the importance of these initiatives," Mills said.

BCG has found that organizations where the CEO is deeply engaged report significantly more business benefits. "For AI to truly deliver business value in ways that do not allow risks to become crises, dedicated resources and stewardship are necessary ingredients," Mills said.

Invest the time and resources to understand new risks, which can differ for each company. Once executives have considered and planned for what can go wrong, that awareness can help guide the design and deployment of AI policies across the company.

How Instantprint developed an AI policy

Dan Robinson, head of marketing and e-commerce at U.K.-based online print shop Instantprint, has overseen the development of the company's AI policy. He recommends fostering an environment of trust and providing teams with the rules and regulations they need to use these tools effectively and safely.

Instantprint organizes its AI code of conduct into specific dos and don'ts for each tool. "Making policies a shared effort means that we're more likely to have rules that will work for our team, developed by our team, with the exception of legal and ethical frameworks as a standard," Robinson said.

Transparency is fundamental to this approach. Robinson has found that those using the tools daily have the greatest insights. Openly sharing this information facilitates better understanding, promotes accountability and enables the organization to address concerns faster.

Instantprint started by inventorying and analyzing all the AI tools in use at the organization. The company then conducted an internal survey to explore employees' perspectives on AI and its implications for their jobs. The survey quizzed more than 1,000 office workers to uncover concerns, spending patterns and examples of AI adoption in their workplace.

The organization also created a working document with date stamps to develop an evolving policy while keeping everyone up to date with the latest version. "AI policy writing is continuous while we are still learning," Robinson said.

Best practices for creating a ChatGPT policy

When crafting a ChatGPT policy for your organization, keep the following starting points in mind.

| Do ... | Don't ... |

| Make responsible AI use an executive-level priority. | Wait to develop an AI use policy. |

| Clarify the different words and branding used in ChatGPT-like services. | Share sensitive data with public AI services. |

| Provide guidance and let employees shape the policy. | Automate processes using AI without human oversight. |

| Survey existing use of AI tools and assess each tool's risks. | Claim that AI-generated content was created by humans. |

| Develop a process for ensuring the quality of AI outputs. | Trust AI outputs without verifying their sources, citations and claims. |

| Develop a process for reporting any new problems. | Trust AI models without auditing them for bias. |