Attributes of open vs. closed AI explained

What's the difference between open vs. closed AI, and why are these approaches sparking heated debate? Here's a look at their respective benefits and limitations.

The practice of open AI entails openly sharing AI models, the provenance of training data and the underlying code. Closed AI obscures or protects one or more of these things.

There are many practical reasons enterprises might adopt one approach over the other. Closed AI tends to be faster and can be used via various cloud services. Open AI, while not as fast, enables greater scrutiny of underlying code, models and data and often results in improved explainability and security. In addition, openness about data sources might protect enterprises against intellectual property and copyright infringement as the legal landscape evolves.

While both approaches have merits, the decision to use open versus closed AI has become a topic of fierce debate, with the principal players tending to come down hard on one side or the other. Regulators concerned about giving political adversaries an advantage, for example, tend to oppose open AI -- likewise for vendors striving to maintain a competitive moat. For researchers seeking to build upon the latest innovations in the field and make further discoveries, however, open AI is essential. Still, there are companies that sometimes take a hybrid approach, offering parts of their AI technology as open and keeping other parts proprietary.

Role of open AI in generative AI

The development of generative AI illustrates how complicated these decisions about the use of open vs. closed AI can become. The generative AI applications that have taken the world by storm grew atop the open AI approach taken by Google in the development of its transformer models, the deep learning algorithms that revolutionized natural language processing.

This article is part of

What is GenAI? Generative AI explained

In turn, OpenAI -- the research lab and for-profit company -- refined Google's algorithms into its popular ChatGPT service and provided it as a closed service. Google subsequently decided to dial back its open AI efforts to reap a greater return for its advances.

Meta has gone in the opposite direction, sharing its Large Language Model Meta AI (Llama) model under a quasi-open license. Meanwhile, U.S. regulators concerned about a possible Chinese advantage in AI have called for hearings on the use of open AI. Security researchers are worried that hackers and spammers will use open AI innovations to harm society. Researchers, in contrast, are running open AI models directly on phones in their quests to build upon the latest generative AI innovations.

Future role of open vs. closed AI in innovation, transformation

Brian Steele

Brian Steele

How will the push and pull between open AI and closed systems pan out? Most experts expect AI's bleeding edge will continue to be driven by open AI principles.

"It's unlikely that we will see some novel innovation of AI completely on its own in a closed scenario," said Brian Steele, vice president of product management at Gryphon.ai.

David DeSanto

David DeSanto

However, how widespread the practice of open AI will be going forward remains uncertain. Few AI vendors are willing to disclose information about the provenance of training data publicly, how enterprise customers' data is used with these models and whether it is retained -- per their customers' wishes, said David DeSanto, chief product officer at GitLab.

"Many enterprises do not want their IP to be used to train or improve models or be used for anything but providing output from the model," DeSanto said. In addition, he noted that security professionals are concerned that AI services could experience data breaches, risking their sensitive intellectual property data.

For more information on generative AI-related terms, read the following articles:

What is the Fréchet Inception Distance (FID)?

What is a generative adversarial network (GAN)?

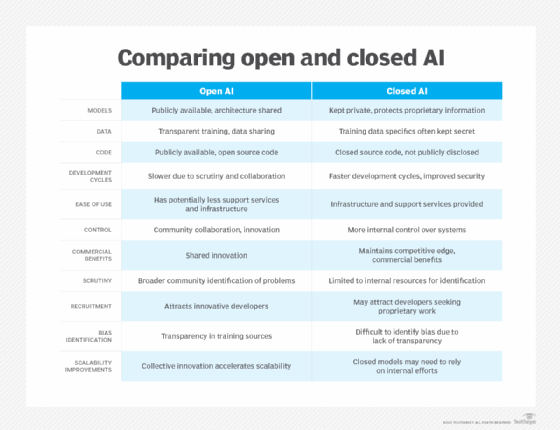

Aspects of closed vs. open AI

In traditional software development, the distinction between open source and closed source focuses on licensing restrictions around code reuse. The dividing line between open versus closed AI is more like a spectrum rather than a binary division, explained Srinivas Atreya, chief data scientist at Cigniti Technologies, an IT services and consulting firm.

Srinivas Atreya

Srinivas Atreya

"Many organizations adopt a mixed approach, sharing some elements openly, while keeping others closed," Atreya said. For example, a company might open source its model code but keep its training data and the specifics of its model architecture private. He believes that researchers will increasingly share AI breakthroughs using coordinated disclosure or staged releases in which models and related resources are divulged in a controlled manner that considers safety and societal implications.

Atreya finds it helpful to consider the following three key aspects when thinking about the differences between closed and open AI:

- Models. Closed AI models are kept private by the organizations that develop them. These organizations typically keep details about their models' structure, training process and proprietary information under wraps to protect their investment and maintain a competitive edge. In contrast, open AI models are made publicly available. This means their architecture, parameters and, often, the trained models themselves are shared openly, enabling other researchers and developers to use, learn from and build upon them.

- Data. AI models are trained on vast amounts of data. With closed AI, the specifics of this training data, such as where it came from, how it was collected and cleaned, and what it consists of, are often kept secret. This can be due to privacy, legal and competitive reasons. With open AI, there is typically more transparency about the data used for training. Others can examine and scrutinize open data, enabling them to verify its quality, biases and appropriateness for the model's intended uses.

- Code. Code relates to the software used to train and run the AI models. In a closed AI approach, the code is kept private. In an open AI approach, the code is made publicly available, often as open source software. This enables others to inspect the code to understand how the AI was trained and operates and potentially modify and improve upon it.

Benefits of closed AI

A closed AI approach has the following benefits, according to AI experts interviewed for this article:

- Faster development cycles. Some examples of closed AI have fast cycles that improve the models' security and performance, according to Tiago Cardoso, group product manager at Hyland.

- Ease of use. Closed AI vendors often provide infrastructure and support services to speed adoption by enterprise apps that connect to their models.

- Potential for license flexibility. Closed AI systems avoid legal issues surrounding open source systems and restrictions on reuse, according to Gryphon.ai's Steele.

- Commercial benefits. Closed models let enterprises maintain an edge in commercializing their innovations, said Nick Amabile, CEO and chief consulting officer at DAS42, a digital transformation consultancy. Closed models incentivize continued and rapid development of new applications and capabilities by commercial software vendors.

- Increased control. The process of sharing internal libraries can slow innovation. "When you control your users and all the various systems that rely on your code, it's much easier to advance those systems," said Jonathan Watson, CTO at Clio.

What is closed AI, in brief

Closed AI conceals the AI models, training data and underlying codebase. It protects the research and development of AI technologies and can be better at specific tasks than open source counterparts but loses out on the benefits of public oversight.

Benefits of open AI

Open AI benefits the industry in the following ways, according to the AI experts interviewed for this article:

Jonathan Watson

Jonathan Watson

- Increased scrutiny. Open AI enables a larger community to identify and mitigate problems. "External knowledge is often greater than internal knowledge, and a community can help advance your mission, help to find and fix issues, and create influence in the tech ecosystem," Watson said.

- Better recruitment. Openly sharing AI innovations can attract prominent developers who desire to innovate at the edge. "In open sourced models, there's a clear demonstration of what your team is about and what it can do, and people are drawn to that," Watson said.

- Greater understanding. Open models provide information that can be essential to properly understand what to expect from the model, according to Cardoso. The model architecture is perfectly known and can be cloned as all the weights are provided. This enables organizations with knowledge to adapt, optimize or apply the model innovatively.

- Identification of bias. Open models disclose the training sources. This helps researchers understand the source of bias and participate in improving the training data.

- Faster scalability improvements. Open models can benefit from collective innovation to accelerate scalability improvements. Open source communities also tend to provide fast optimizations on cost and scalability and even more so if they are backed by large organizations, as is the case for Meta's Llama, Cardoso noted.

What is open AI, in brief

Open AI approaches reveal the full technical details of AI models and the training data and shares the code with others for scrutiny, providing a launchpad for more innovation.

Framing discussions with stakeholders

Every enterprise will adopt its own approach regarding the openness of the AI capabilities they consume and their approach to delivering new services and products. It is important to consider various aspects related to ethics, performance, explainability and intellectual property protection tradeoffs in discussions with enterprise and community stakeholders.

Cardoso said vendors of closed models tend to invest greater resources in security and AI alignment efforts. And he finds that closed models tend to provide better performance. But the community factor in open AI can often fill these gaps in specific scopes. One example is the emergence of fine-tuning in small-model versions, which enables cost-efficient, domain-specific models that can provide better performance in those domains.

Steele said open AI makes sense for companies looking to benefit from the default behaviors of AI applications and that do not have data privacy or usage risks. However, closed AI makes more sense when companies want to extend AI or create proprietary data sets.

DeSanto recommended organizations start by facilitating conversations between their technical AI teams, legal teams and AI service providers.

"Establishing a baseline within an organization and developing shared language can go a long way when deciding where to focus and minimize risk with AI," he said.

From there, organizations can begin setting the appropriate guardrails and policies for AI implementation. This can include policies around how employees might use AI and guardrails like data sanitization, in-product disclosures and moderation capabilities.

Editor's note: This article was updated in July 2024 to improve the reader experience.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.