What is LangChain and how to use it: A guide

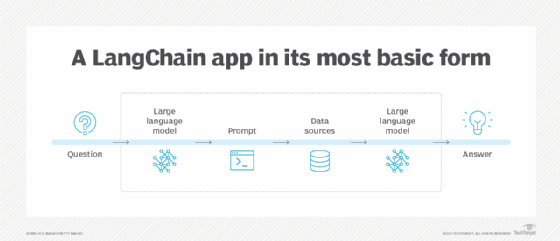

LangChain is an open source framework that enables software developers working with artificial intelligence (AI) and its machine learning subset to combine large language models with other external components to develop LLM-powered applications.

LangChain aims to link powerful LLMs, such as OpenAI's GPT-3.5 and GPT-4, to an array of external data sources to create and reap the benefits of natural language processing (NLP) applications. It's also used for creating interfaces that produce humanlike responses and answer questions.

Developers, software engineers and data scientists with experience in Python, JavaScript or TypeScript programming languages can use LangChain packages offered in those languages.

LangChain was released in 2022 as an open source project by co-founders Harrison Chase and Ankush Gola.

Why is LangChain important?

LangChain is a framework that simplifies the process of creating generative AI application interfaces. Developers working on these types of interfaces use various tools to create advanced NLP apps; LangChain streamlines this process. For example, LLMs must access large volumes of big data, so LangChain organizes these large quantities of data so that they can be accessed with ease.

This article is part of

What is GenAI? Generative AI explained

In addition, GPT (generative pre-trained transformer) models are trained on data available until a specific point in time, known as their knowledge cutoff date. While models are often updated to extend their cutoff date, LangChain can connect AI models directly to data sources to give them knowledge of recent data without limitations.

What are the benefits of LangChain?

LangChain offers various benefits, including the following:

- Open source and community benefits. As an open source framework, LangChain thrives on community contributions and collaboration, and is readily accessible on platforms such as GitHub. Developers can drive innovation by accessing a wealth of resources, tutorials, documentation and support from fellow LangChain users.

- Modular design. LangChain's modular architecture enables developers to mix, match and customize components for specific needs.

- Simplified development. LangChain offers a standardized interface that enables developers to easily switch between different LLMs, streamline workflows and reduce integration complexity. For example, they can switch between LLMs from providers such as OpenAI or Hugging Face with minimal code changes.

- Repurposed LLMs. LangChain lets organizations repurpose LLMs for domain-specific applications without retraining, enabling development teams to enhance model responses with proprietary information, such as summarizing internal documents. The retrieval-augmented generation (RAG) workflow further improves response accuracy and reduces model hallucination of generative AI by introducing relevant information during prompting.

- Interactive applications. LangChain enables interactive applications through real-time communication with language models. For example, its modular components can be used to create interactive applications such as chatbots and AI assistants that engage users in real time.

What are the features of LangChain?

LangChain is made up of the following modules that ensure the multiple components needed to make an effective NLP app can run smoothly:

- Model interaction. Also called Model I/O, this module enables LangChain to interact with any language model and perform tasks such as managing inputs to the model and extracting information from its outputs.

- Prompt templates. LangChain includes prompt template modules that enable developers to create structured prompts for LLMs. These templates can incorporate examples and specify output formats, facilitating smoother interactions and more accurate responses from the models.

- Data connection and retrieval. Data that LLMs access can be transformed, stored in databases and retrieved from those databases through queries with this module.

- Chains. Using LangChain to build more complex apps might require other components or even more than one LLM. This module links multiple LLMs with other components or LLMs. This is referred to as an LLM chain.

- Agents. The agent module lets LLMs decide the best steps or actions to take to solve problems. It orchestrates a series of complex commands to LLMs and other tools to get them to respond to specific requests.

- Memory. The memory module helps an LLM remember the context of its interactions with users. Depending on the specific use, both short-term and long-term memory can be added to a model.

- Retrieval modules. LangChain supports the development of RAG systems with tools for transforming, storing and retrieving information to enhance language model responses. This enables developers to produce semantic representations with word embeddings and store them in local or cloud-based vector databases.

What are LangChain integrations?

LangChain typically builds applications using integrations with LLM providers and external sources where data can be found and stored. For example, LangChain can build chatbots or question-answering systems by integrating an LLM with data sources or stores, including relational or graph databases, text files, knowledge bases or unstructured data. This enables an app to take user-input text, process it and retrieve the best answers from any of these sources. In this sense, LangChain integrations use the most up-to-date NLP technology to build effective apps.

Other potential integrations include cloud storage platforms, such as Amazon Web Services, Google Cloud and Microsoft Azure, as well as vector databases. A vector database can store large volumes of high-dimensional data -- such as videos, images and long-form text -- as mathematical representations that make it easier for an application to query and search for those data elements.

How to create prompts in LangChain

Prompts serve as inputs to the LLM that instruct it to return a response, which is often an answer to a query. This response is also referred to as an output. A prompt must be designed and executed correctly to increase the likelihood of a well-written and accurate response from a language model. That's why prompt engineering is an emerging science that has received more attention in recent years.

Prompts can be generated easily in LangChain executions using a prompt template, which serves as instructions for the underlying LLM. Prompt templates can vary in specificity. They can be designed to pose simple questions to a language model. They can also be used to provide a set of explicit instructions to a language model with enough detail and examples to retrieve a high-quality response.

With Python programming, LangChain has a premade prompt template that takes the form of structured text. The following steps are required to use this:

- Install Python. A recent version of Python must be installed. Once the Python shell terminal is open, enter the following command to install just the bare minimum requirements of LangChain for the sake of this example:

pip install langchain- Add integrations. LangChain typically requires at least one integration. OpenAI is a prime example. To use OpenAI's LLM application programming interfaces, a developer must create an account on the OpenAI website and retrieve the API access key. Then, using the following code snippet, install OpenAI's Python package and enter the key for access to the APIs:

pip install openai

from langchain.llms import OpenAI

llm = OpenAI(openai_api_key="...")- Import the prompt template. Once these basic steps are complete, LangChain's prompt template method must be imported. The code snippet shown below does this:

from langchain import PromptTemplate

prompt_template = PromptTemplate.from_template(

"Tell me an {adjective} fact about {content}."

)

prompt_template.format(adjective="interesting", content="zebras")

"Tell me an interesting fact about zebras."In this scenario, the language model would be expected to take the two input variables -- the adjective and the content -- and produce a fascinating fact about zebras as its output.

How to develop applications in LangChain

LangChain is built to develop apps powered by language model functionality. There are different ways to do this, but the process typically entails the following key steps:

- Set up the environment. The first step is to install LangChain with all its necessary dependencies. In addition, developers should ensure they have access to an LLM API, such as OpenAI, and obtain the API key for integration.

- Define the application. An application developer must first define a specific use case for the application. This also means determining its scope, including requirements such as any needed integrations, components and LLMs.

- Build functionality. Developers use prompts to build the functionality or logic of the intended app.

- Customize functionality. LangChain lets developers modify their code to create customized functionality that meets the needs of the use case and shapes the application's behavior.

- Fine-tune LLMs. It's important to choose the appropriate LLM for the job and to fine-tune it to adhere to the needs of the use case.

- Cleanse data. Using data cleansing techniques ensures clean and accurate data sets. Security measures should also be executed to protect sensitive data.

- Test. Regularly testing LangChain apps ensures they continue to run smoothly.

LangChain vs. LangSmith

LangChain and LangSmith play specific roles in the landscape of LLM applications. The LangChain framework helps developers create LLM-powered applications by offering tools that build intricate workflows and integrate different components.

The LangSmith platform is focused on monitoring, testing, debugging and evaluating these applications in production to ensure they operate reliably and efficiently.

In essence, LangChain aids in the creation of applications, while LangSmith enhances their operational management and quality assurance.

LangChain vs. LangGraph

Similar to LangChain and LangSmith, LangGraph is also a framework for LLMs. LangChain offers a standard interface for building simple applications and workflows using linear chains and retrieval flows.

In contrast, LangGraph builds on LangChain by enabling cyclical graphs, which facilitate the development of complex, stateful and multi-actor applications. This feature enables more control over interactions, including conditional paths and opportunities for human intervention, making LangGraph suitable for advanced agent-based software development.

Examples and use cases for LangChain

The LLM-based applications LangChain is capable of building can be applied to multiple advanced use cases within various industries and vertical markets, such as the following:

- Customer service chatbots. LangChain enables advanced chat applications to handle complex questions and user transactions. These applications can understand and maintain a user's context throughout a conversation in the same way as ChatGPT. AI is widely used to enhance customer experience and service.

- Coding assistants. It's possible to build coding assistants with the help of LangChain. Using LangChain and OpenAI's API, developers can create a tool to assist those in the tech sector with enhancing their coding skills and improving productivity.

- Healthcare. AI has entered healthcare in several ways. LLM-centric LangChain applications help doctors make diagnoses. They also automate rote, repetitive administrative tasks, such as scheduling patient appointments, enabling healthcare workers to focus on more important work.

- Marketing and e-commerce. Businesses use e-commerce platforms with LLM functionality to better engage customers and expand their customer base. An application that can understand consumer purchasing patterns and product descriptions can generate product recommendations and compelling descriptions for potential customers.

- Summarization tasks. LangChain can help condense large volumes of text into concise summaries. This is particularly useful in fields such as journalism, research and content creation, where vital information must be accessed quickly.

- Data augmentation. LangChain can enhance data by generating new data that resembles the existing data. This includes creating variations of the data by paraphrasing or slightly altering its context. Data augmentation is useful for training machine learning models and developing new data sets.

- Media adaptation. LangChain can help the entertainment industry by enabling dubbing and subtitling of content, making movies and TV shows accessible to a broader audience. This improves the viewer experience and expands the content's international reach.

Discover how advancements in large language models and generative AI are revolutionizing industries and driving innovation. Explore future trends that are shaping AI.