4 virtual reality ethics issues that need to be addressed

Technology has outpaced societal guard rails throughout history. Virtual reality is no exception, introducing ethical and legal issues companies need to consider.

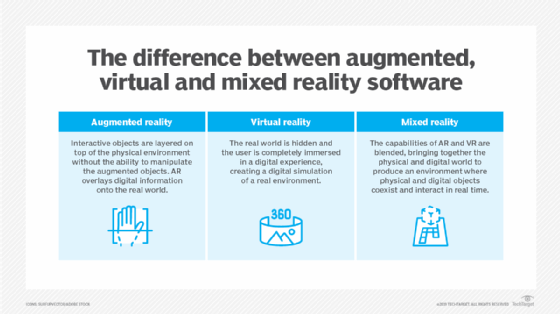

The growth of virtual, augmented and mixed realities -- collectively referred to as extended reality (XR) -- has created a divide. On one side of the divide are users and providers, while legal experts and ethicists who must envision the uses and potential misuses of these new technologies are on the other side. Areas of immediate concern involve the lack of legal precedent, security and data privacy threats, cognitive hacking and dangers related to technology overreliance.

While VR, AR and MR are different, they raise similar legal and ethical challenges for the companies using them. Many of these challenges are related to the difficulty in determining the boundaries between physical and virtual domains.

Here are four main areas companies need to consider.

1. Laws and regulations that struggle to keep up with emerging technologies

Historically, the law has had a difficult time keeping pace with technology, and VR is no exception. Foundational to the law is proving damage and liability. Legal issues in VR and other XR technologies are complicated by physical boundary limitations, data ownership, human behaviors and privacy. Determining jurisdiction becomes challenging when state and federal boundaries are crossed.

The challenge of a consistent definition of a crime also arises, much like in the case of physical crimes where laws vary according to state and country. Furthermore, the ability to define and determine victim damages can vary. Variability challenges lawmakers since enforceable laws are precise.

Consider the police investigation in the U.K. into the claim by a minor that her avatar was virtually gang raped while playing a game in the metaverse. Liability is complicated by the fact that avatars were used by both the victim and defendants, raising questions about the nature of the damages suffered by the victim and how they translate into the physical world.

Cases like this highlight some of the thornier issues related to behavior in VR, AR and MR environments. For example, do participants in these XR environments view the virtual world as a safe place to act out behaviors they would not consider in the physical world? As we have seen on the internet, virtual environments provide a layer of anonymity that sometimes emboldens users to say and do things they do not do in the physical world. Does acting on illegal behaviors in the virtual world desensitize the actor? If so, how does this carry over into the physical world, and will future defendants use a XR defense?

In the physical domain, assigning responsibility for physical harm is often difficult. For example, who is responsible for physical accidents occurring from a lack of awareness of the physical environment? When these accidents occur in a user's home, the answer might seem straightforward and be attributed to a user's physical environment or faulty equipment. However, what about when the physical environment is the workplace or under control of a third party? Will a worker injured in XR be able to claim workers' compensation? What if the damage to the worker is emotional but has physical or performance effects?

2. New and challenging security issues

XR presents a host of security challenges. XR environments are an attractive target due to the value of the data that can be captured. Breaking into VR headsets or other equipment used in XR environments not only gives an attacker access to a wealth of data for nefarious purposes, including the ability to duplicate or falsify the data, but the ability to manipulate the environment to make the user sensations uncomfortable (e.g. induce dizziness). Researchers at Louisiana State University, hired to test a popular app that lets people watch movies together in a virtual living room, found they could hack into the cameras and headsets of people in the room, raising privacy issues. In other tests done for the company that owned the app, researchers were able to disorient users by deleting physical boundaries to make them walk into a wall.

Since XR peripherals are also entry points into the application, a hacker in cases like the one above not only has user privileges but a desired foothold to other users within the application. Furthermore, an application that has been hacked provides a pathway to the OS. Once in the OS, an attacker can plant spyware to access a user's personally identifiable information.

Countermeasures to address security vulnerabilities are also limited to vulnerability patching. Attacks are slow to detect, and signatures have well-documented limitations. When taken in the context of security products such as intrusion detection systems, security information and event management and other reporting tools, the countermeasures don't check for hacking attempts but for cheating -- which is not always an indication of hacking.

3. Cognitive hacking and other privacy issues

Perhaps the most serious concern related to the use of VR and other XR technologies centers on the privacy issue. Data privacy is problematic, but more insidious is the exposure of individual mental models: By tracking eye movements and other involuntary responses, the XR software can access the unconscious thought processing of the targeted individual. This is significant because unconscious thought is spontaneous and potentially reveals the inner ideas of a person, which often override conscious efforts to make a decision. The capture of an individual's mental model lets the holder gain an understanding of the individual's decision-making process, thereby providing the software owner the ability to make highly accurate predictions.

If we thought AI-enabled marketing software that recommends next purchases was invasive, the capture of individual mental models is significantly worse. Not only can the software "own" the brain, but the data collected by AI-driven software is added to the training data for other machine learning efforts. Thus, a user's mental models can ultimately become public training data. This concern is certainly serious in the gaming environment, but in a work environment, employees don't want their employers to always know what they're thinking.

The cognitive hacking aspect of XR is particularly troubling for mental well-being. People who struggle with mental health issues have an additional challenge in a world where software predictions can feel like mental invasions. Mental health treatment is challenging enough without adding immersion into a virtual world where boundaries between reality and fantasy are, by design, blurred. In their paper "The Ethics of Realism in Virtual and Augmented Reality," Slater et al. postulated that frequent and long-term use of XR could result in users prioritizing the virtual world over the physical world. While Slater and Sanchez-Vives had determined in a 2016 work that typical users can distinguish between physical and virtual reality, prioritizing one over the other is still problematic and can result in unanticipated outcomes.

4. Placing too much trust in the technology

Another underexplored aspect of the psychological aspects of VR and other XR technologies is related to how we respond to software programs. Issues range from a user's propensity to over-trust the software to traumatic events experienced in the virtual world that follow the user to the physical world.

The first problem of over-trusting technology is periodically reinforced in daily life. Who hasn't been led astray by the GPS in their vehicle and then tried to use the same software to get out of the situation? Or the indiscriminate use of ChatGPT in numerous instances, even when the user is aware of AI hallucinations. There are numerous instances where humans put trust in machines and then never verify the trust.

XR holds similar risks but is worse since the user -- by virtue of being sensory challenged -- is even more dependent on the software feedback. Moreover, when software has a deep understanding of a user's mental model, the user has good reason to trust the software without completing verification. The unquestioning trust of software is a dangerous practice, since software isn't perfect and vulnerable to errors.

Will this time be different?

Throughout the modern age, technology has outpaced societal guard rails such as laws and ethics. Hitting pause is unrealistic, since imagination and curiosity -- intrinsic human traits that give rise to new tools -- overwhelm our fear of the new or unknown. If history is an indicator, society will rush in blindly and deal with the fallout incrementally and only after significant loss. Perhaps this time the risks of VR, AR and other XR technologies will temper our curiosity and the adoption of these powerful tools will happen with open eyes and open minds.

Char Sample, DSc., is a leading expert in the field of quantitative cultural threat intelligence with over 30 years of professional experience in industry and academia.