Architecting beyond microservices and monoliths

A recent architectural change tied to Amazon's Prime Video service could help enterprise application teams understand their own microservices vs. monolithic architecture choices.

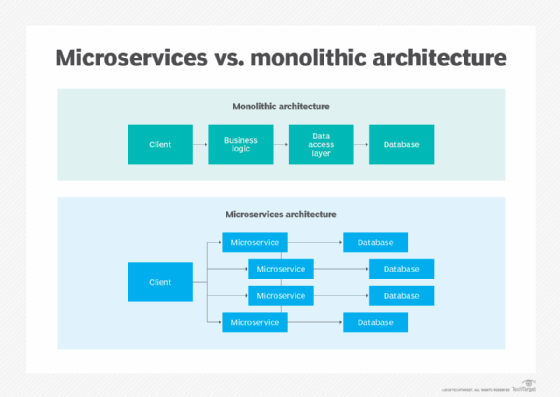

It's long been considered canon that monolithic applications are easy to get started but hard to maintain. As the number of teams grows, changes in one place seem to generate problems in another, and broken builds halt integration of new features until the build problem is addressed. Common practice is to transform the monolithic application into a microservices architecture as the application grows, which allows many teams to make changes and deploy independently.

Rearchitecting from a monolithic to microservices architecture, however, is not necessarily an all-or-nothing proposition. There are many decisions and combinations between those two poles. A recent tweak behind the scenes for Amazon's Prime Video streaming service illustrates this.

Amazon Prime Video's monolith vs. microservices choice

Amazon's team encountered problems scaling an in-house monitoring tool built from distributed components, so they rebuilt it as a monolith -- or did they?

Let's appreciate the context we're talking about here. Amazon Prime is one of the world's leading video services with over 220 million subscribers, streaming video at a rate of 0.38-6.4 GB of bandwidth per hour. Assuming a typical user streams an hour per day, that is around 30 petabytes of data per hour.

Also, in a microservices application, any request might route through a dozen subsystems. If a single component fails to handle load, that component can drag down the overall system's performance or even seize it up. On a social media platform this might break individual functionality such as messaging, alerts, or an image or video prompt. For a video streaming service such as Amazon Prime Video, that single function is everything.

According to Amazon's technical staff, the quality of service (QoS) monitoring software built for Prime Video was failing even when capacity was only about 5% of expected load. Meanwhile, costs were exploding. In their words, the software "performed multiple state transitions for every second of the stream, so we quickly reached account limits." Rather than bring down the video service, the infrastructure continued to respawn, which led to increased costs.

In response, the Amazon Prime Video team returned the monitoring service to a monolithic approach. The company claimed this not only has improved the tool's scalability, but also reduced costs by 90%.

Some industry watchers have questioned Amazon's explanation of this new approach. They suggest that it isn't a monolith after all, and that something more complex is going on.

The service-level monolith

A monolith is a single compiled program. Any change in the program requires a recompile. The code exists in a single repository with shared code libraries. With collective code ownership, many people can touch the same modules in short periods of time. Even if you address merge failures, changes in the same module can introduce unintended consequences. Meanwhile, everything-at-time deployment -- meaning to update all the software to the newest version all at once -- becomes untenable as you grow

That isn't what happened with the rebuilt Amazon Prime Video monitoring service, and it isn't what happens in general. Larger systems are often in the middle. Even the simplest modern web application has many different components, from front end (HTML, JavaScript, CSS) to APIs to the database. Most web applications are no longer the single-executable model, meaning they can compile and deploy the subsystem that was changed rather than in their entirety.

The Amazon Prime Video example is one single subsystem that targets QoS, which is different than any other streaming component (and those are different from all the rest of Amazon). Fundamentally, the old QoS service serialized the data, calculated through web services and deserialized the data back into storage. The new system, apparently, simply does the calculations in memory and saves them.

If the new QoS service is a monolith, it is only so at the service level. That leads to scaling the monolith to the right level.

If this concept fits a need for your enterprise apps team, consider the following options:

Repository. It might be possible to store code in a single repository, with discipline, proper loose coupling and separation of concerns. At web scale, that might mean fewer repositories, not one.

Cohesion. Combined microservices should serve up a single cohesive business process. Another option is to put related microservices on the same virtual hardware, such as a single running Docker container. Combining services on the same container, especially ones able to call each other, can reduce bandwidth and messaging overhead.

Feature vs. component teams. The monolith's aforementioned unintended-consequences problem happens when two teams work in the same codebase. Having component teams resolves this problem, because only one team works on a component at a time. Coordination between teams also creates problems. Feature teams will want more separation between components, or looser coupling, which will increase costs.

Whether you want to reassemble microservices as a series of monoliths, or decompose a monolith into a series, there's one more thing to consider: the design constellation.

Design constellations and dependencies

The classic way to test a service is to isolate it and mock out all immediate dependencies. At the very best, these mocks can use the tests of the dependencies as a contract for what they should serve. Above that, the only option is to recreate the entire production environment, which is expensive and slow.

However, if the dependencies are clear and the system uses a standard data seed, there is another option. Software can create a subset of just the dependencies of that service, perhaps in a Kubernetes or other cluster. Populate them with data, and developers can perform end-to-end testing that is highly reliable.

All that requires a dependency tree, and the ability to create on-demand test environments, likely in a cluster. Combining related services into a service-level monolith might make that a surprisingly easy task.

Plan your architecture for future growth

Amazon CTO Werner Vogels once summed up evolution in software architecture: "With every order of magnitude of growth, you should revisit your architecture and determine whether it can still support the next order-level of growth."

Put differently, what got you here won't get you there. Calculate and graph the system performance and expanding costs. If the slope of one increases too quickly, consider an architectural change before a problem emerges. If so, remember that in a monolith vs. microservices evaluation the right choice might be somewhere between the two extremes.