What is data privacy?

Data privacy, also called information privacy, is an aspect of data protection that addresses the proper storage, access, retention, immutability and security of sensitive data.

Data privacy is typically associated with the proper handling of personal data or personally identifiable information (PII), such as names, addresses, Social Security numbers and credit card numbers. However, the idea also extends to other valuable or confidential data, including financial data, intellectual property and personal health information. Vertical industry guidelines often govern data privacy and data protection initiatives. Regulatory requirements of various governing bodies and jurisdictions serve similar purposes.

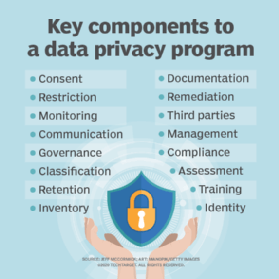

Data privacy isn't a single concept or approach. Instead, it's a discipline involving rules, practices, guidelines and tools to help organizations establish and maintain required levels of privacy compliance. Data privacy is generally composed of the following six elements:

- Legal frameworks. Prevailing legislation enacted and applied to data issues, such as data privacy laws.

- Policies. Established business rules and policies to protect employees and user data privacy.

- Practices. Best-practices put in place to guide IT infrastructure, data privacy and protection.

- Third-party associations. Any third-party organizations, such as cloud service providers, that interact with data.

- Data governance. Standards and practices used to store, secure, retain and access data.

- Global requirements. Any differences or variations of data privacy and compliance requirements among legal jurisdictions around the world, such as the U.S. and European Union (EU).

Data privacy is a subset of data protection, which also includes traditional data protection approaches, such as data backup and disaster recovery, and data security. The goal of data protection is to ensure the continued privacy and security of sensitive business data while maintaining the availability, consistency and immutability of that data.

Why is data privacy important?

The importance of data privacy is directly related to the business value of data. The evolving data-based economy is driving businesses of all sizes to collect and store more data from more sources than ever before. Data is used for a range of business reasons, including the following:

- Serving customers. Data helps identify customers, understand their needs, and provide the goods and services they want.

- Understanding operations. Data from networks and devices helps a business understand and learn more about its infrastructure, facilities and human behaviors.

- Insights. Data from databases and other data sources provides insights that can improve the business.

- Training. Data can be used to train machine learning and AI systems.

Data privacy is a discipline intended to keep data safe against improper access, theft or loss. It's vital to keep data confidential and secure by exercising sound data management and preventing unauthorized access that might result in data loss, alteration or theft.

For individuals, the exposure of personal data might lead to improper account charges, privacy intrusion or identity theft. For businesses, unauthorized access to sensitive data can expose intellectual property, trade secrets and confidential communications. It can also adversely affect the outcome of data analytics.

Data privacy lapses, also referred to as data breaches, can have serious effects on all parties involved. Individuals affected by a data breach might find improper financial and credit activity in their name, compromised social media accounts, misused personal healthcare information, and other issues.

A business might face regulatory consequences, such as fines, lawsuits, and irreparable damage to their brand and reputation. With the integrity of its data compromised, a business might lose faith in its data and need a response plan to convince customers it's trustworthy.

What are the laws of data privacy?

Regulatory legislation drives data privacy practices because government entities recognize the potential negative effects of data breaches on citizens and the greater economy. Numerous laws require and enforce data privacy functions and capabilities.

In the U.S., data privacy laws and regulations concerning have been enacted in response to the needs of particular industries or sectors of the population. Examples include the following:

- Children's Online Privacy Protection Act. COPPA gives parents control over what information websites can collect from their children.

- Health Insurance Portability and Accountability Act. HIPAA ensures patient confidentiality for healthcare data.

- Electronic Communications Privacy Act. ECPA extends government restrictions on wire taps to include transmission of electronic data.

- Video Privacy Protection Act. VPPA prevents the wrongful disclosure of an individual's PII stemming from their rental or purchase of audiovisual material.

- Gramm-Leach-Bliley Act. GLBA mandates how financial institutions must deal with an individual's private information.\

- Fair Credit Reporting Act. FCRA regulates the collection and use of credit information.

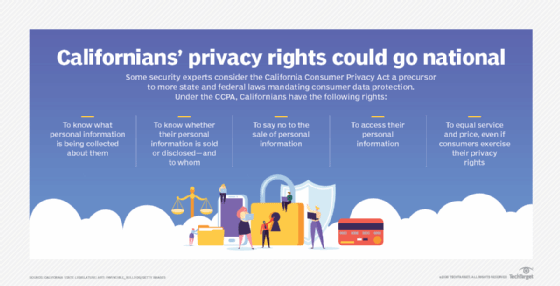

Some U.S. data protection laws are enacted at the federal level. States also enact data privacy laws. Examples of state-level data privacy laws include the California Consumer Privacy Act, California Privacy Rights Act, Virginia's Consumer Data Protection Act, Colorado Privacy Act, New York SHIELD Act, Utah Consumer Privacy Act, and Connecticut Data Privacy Act.

The EU has the General Data Protection Regulation (GDPR), which governs the collection, use, transmission and security of data on residents of its 27-member countries. GDPR regulates areas such as an individual's ability to consent to provide data, how organizations must notify data subjects of breaches and an individual's rights over the use of their data.

Data privacy vs. data security

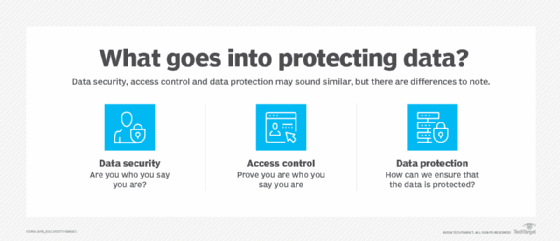

Data privacy and data security are closely related ideas, but they aren't interchangeable.

- Data privacy focuses on issues related to collecting, storing and retaining data as well as data transfers within applicable regulations and laws, such as GDPR and HIPAA.

- Data security is the protection of data against unauthorized access, loss or corruption throughout the data lifecycle. Data security can involve processes and practices, along with a variety of tools such as encryption, hashing and tokenization to guard data at rest and in motion.

Data privacy is a subset of data security; data privacy can't exist without data security.

Data privacy vs. data governance

Data governance is a broader concept encompassing both data privacy and security. It also includes additional concerns, such as data quality and management throughout the entire data lifecycle. Organizations handling data should have comprehensive data governance procedures in place, with data privacy being one key consideration within them.

Data privacy policies delve into specifics, outlining approaches and tools for accessing, using and transmitting private data. Organizations use data privacy policies to prove to external parties, such as regulatory bodies and stakeholders, that their data privacy policies follow local, state and federal laws. A lot of regulatory compliance related to data is specific to data privacy. Data governance is an essential part of ensuring data privacy.

What are the benefits of data privacy compliance?

Proper data privacy compliance can yield four major benefits for a business:

- Lower storage costs. Storing all data forever can be costly and risky. Companies that make rational decisions about what data to collect and store, known as data minimization, and hold data for the minimum retention time reduce costs for primary and backup data storage.

- Better data use. Data is time sensitive. A business making better data collection and retention decisions can benefit from timely and better-quality data, which results in more accurate and relevant analytical results.

- Better business reputation and brand. The reputation of a business can be as important as its product or service. A business that successfully adopts and adheres to data privacy practices can demonstrate care for customer data and data privacy, leading to a better reputation and a stronger brand. Conversely, a business that experiences a major data breach can suffer irreparable damage to its reputation and brand.

- Regulatory compliance. Proper data privacy compliance can protect a business from the litigation and fines that come with data privacy breaches.

What are the challenges of data privacy?

Data privacy isn't easy or automatic, and many businesses struggle to meet requirements and counter threats in an ever-changing regulatory and security landscape. Some of the biggest data privacy challenges include the following:

- Privacy is an afterthought. Many businesses deal with data privacy long after implementing a business model and IT infrastructure, leaving business and technology leaders scrambling to understand and address complex requirements. Data privacy should be treated as a fundamental business goal, with policies, training, tools and IT infrastructure designed to meet privacy needs from the ground up.

- Poor data visibility. The old axiom "you can't manage what you can't see" applies to data privacy. Organizations need a clear understanding of what data is present, what its level of sensitivity is and where it's located. Only then can a business make decisions about security and data privacy.

- Too much data. A business can be responsible for managing petabytes of data comprising various files, databases and stores located across storage devices and cloud repositories. It's easy to lose track of data, letting sensitive content elude security, privacy and retention guidance. A business must have the right tools and policies to manage enormous and growing data volumes.

- More isn't always better. Businesses are starting to understand that data must have context and value. Retaining all data forever is expensive and presents storage, protection, attack and legal discovery risks. Modern businesses must set balanced data retention policies about the amount of data collected, its value to the business and reasonable retention needs.

- Too many devices. Modern businesses must embrace remote access, wireless, bring your own device, internet of things, smart devices and other technologies. With all these moving pieces, it becomes harder to manage those devices while controlling data storage and access. Data privacy in this complex environment demands careful infrastructure management, strong access controls, comprehensive monitoring and well-considered data governance policies.

- Too many regulations. Any given business might be subject to data privacy regulations at various levels, including federal, state, province municipal and industry. An enterprise that does business in multiple states, provinces or countries is likely subject to multiple prevailing controls. New requirements appear regularly, and they can change over time. This presents a vast, complex and fluid regulatory landscape.

Important technologies for data privacy

Various technologies exist to assist organizations in their data privacy efforts. These include the following:

- Data governance platforms. Software tools are available to help organizations ensure data privacy and compliance. These platforms treat data as an asset and help business users manage it to comply with organizational privacy policies and broader regulatory requirements.

- Encryption. Private data can be scrambled to appear nonsensical to unauthorized observers. Only authorized users have access to encryption keys to decipher encrypted data.

- Multifactor authentication. MFA is used to safeguard private data with two or more factor authentication, ensuring only authorized personnel have access.

- Identity and access management. IAM tools, such as authentication and access controls, ensure only authorized personnel can access and modify private data.

Tips to protect data privacy

There are countless guidelines and tips that can apply to data privacy. For individuals, data privacy can be reinforced with safeguards and actions such as the following:

- Select strong passwords and change them frequently.

- Use MFA or biometric identification for important accounts.

- Don't click links and buttons within emails.

- Avoid providing PII that's unnecessary or not required.

- Use malware tools and keep those tools updated.

- Use only trusted apps and websites.

For businesses, privacy principles and guidelines are more extensive and complex. But they can include the following tactics:

- Collect as little data as possible to accomplish a business task.

- Require strong authentication and MFA, such as user passwords or app credentials for application programming interfaces.

- Understand data sources, uses and storage locations.

- Use access monitoring and logging to track data access.

- Use encryption and other cybersecurity technologies to protect data at rest and in motion.

- Back up data and test restoration.

- Ensure any third-party storage providers, such as cloud storage providers, share data privacy requirements and techniques.

- Regularly educate employees, partners and customers about data privacy guidelines.

A business must also contend with privacy legislation and regulatory issues related to data storage and retention. All data privacy guidance should include a thorough understanding of regulatory requirements.

The future of data privacy

The amount of data generated globally has increased exponentially in recent years in large part because of the proliferation of internet-connected devices. This has led businesses to intensify their focus on data privacy and security. Business leaders realize that more data means a higher potential for cyberattacks and data breaches, which lead to legal or financial ramifications and assessments. As a result, business models will do more to incorporate data privacy protections going forward.

Data privacy will likely become a higher priority for most organizations because of multiple factors. New legislation is emerging requiring businesses and other organizations to adhere to data privacy principles, with particular emphasis on mitigating the risks associated with artificial intelligence. For example, the EU AI Act went into effect March 2024. It includes guidelines and regulations for ensuring responsible use of AI.

Data privacy is one of the most challenging areas of IT security businesses must contend with. Find out more about the top data privacy challenges.