Pramote Lertnitivanit/istock via

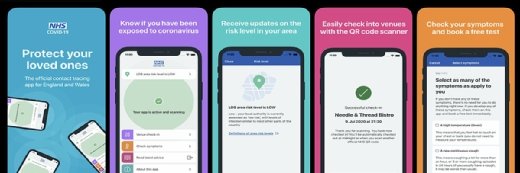

COVID-19 Contact Tracing Apps Spotlight Privacy, Security Rights

As tech giants like Microsoft, Google, and Apple move to craft the APIs behind COVID-19 contact tracing apps, privacy advocates rush to ensure the protection of privacy and cybersecurity rights.

Contact tracing app initiatives have emerged in the wake of the COVID-19 pandemic, as a modern enhancement to traditional methods for tracking the spread of the virus, finding new infections, and supporting the reopening of the economy.

Several tech giants and public health authorities across the globe have quickly signed on to build the application programming interfaces (APIs) and apps necessary to support the scale of the project. In the US, some states have implemented their own versions, while Microsoft has partnered with the University of Washington on a new app designed to help public health agencies.

But the rare partnership between Google and Apple has generated the most interest, given the companies’ past privacy concerns and the planned use of Bluetooth Low Energy technology to inform individuals when they’ve been exposed to someone who has COVID-19.

The American Civil Liberties Union, a group of 200 scientists, and the Electronic Frontier Foundation have all released reports outlining potential privacy and cybersecurity risks developers should consider when both building the API and drafting privacy policies.

For the ACLU, the concern lies with potential overreach, discrimination, and ensuring participation is voluntary. The groups are also concern the developers have not created an exit strategy for sunsetting the data generated during the pandemic after it has ended.

Google and Apple have responded to those concerns with a transparent list outlining its practices, as well as its plans to disable the service at the end of the pandemic.

Despite those assurances, a study by the Washington Post and the University of Maryland revealed that three in five Americans would be either unwilling or unable to use the contact tracing system. The trouble is that the success of these apps rest on user participation.

Which begs the question: Can these privacy and security risks be overcome to assure individuals their data will only be used in the fight against the spread of COVID-19?

As Google and Apple released their API on May 20 and privacy concerns remain prominent, HealthITSecurity.com spoke with a range of privacy and security leaders to dive deeper into some of these concerns and the functions developers must consider to restore individuals’ trust in these technologies.

“The COVID-19 pandemic is presenting novel privacy and security challenges as public health authorities navigate the prospect of widespread contact tracing initiatives,” said Sherrese Smith, vice-chair of Paul Hastings’ Data Privacy and Cybersecurity practice. “Privacy laws and regulations continue to change, as do consumer expectations on the use of data by both public and private entities.”

“How the COVID-19 response evolves will have significant impacts on how data protection laws are crafted and implemented in the future,” she continued. “This is particularly true in the United States, which has yet to adopt a national privacy protection law and could see significant legal changes in the next few years.”

The Crux of Privacy Concerns

Contact tracing apps are integral to tracking and stopping the spread of COVID-19, and those willing to turn over their personal information to “do their part” to address the virus have some level of expectation for a reduction in their personal privacy to do so, explained attorney Jena Valdetero, a partner of Bryan Cave Leighton Paisner.

“Bluetooth was not designed for contact-tracing, so it is a somewhat blunt tool for identifying potential COVID-19 exposure.”

However, it is crucial people have an understanding of what they’re giving up in exchange for participation and its benefits.

For Valdetero, those risks are tied to apps that allow you to identify particular users through the app’s reliance on GPS to track people’s movements, or due to users living in sparsely populated geographic areas that make it “difficult to truly anonymize the data.”

Many of the proposed apps will rely on geo-location tracking, but this poses another issue with whether individuals understand the extent to which they’re giving the app an “almost uncomfortable degree of insight into their daily activities.”

“While there is a clear public health need and individual benefit to people to participate in contact tracing, most people are uneasy about the idea that the government – or a hacker - may be able to tell exactly where they go and when,” Valdetero said.

“The prevailing view seems to be that Bluetooth technology will address the concern that the apps will be able to identify the users individually, which is true, and is particularly important if the information is going to be shared with the government,” she added.

As a result, anyone with whom the user comes in contact may be able to determine who was exposed based on their own interactions. And Valdetero is unsure how app developers could address that risk.

These concerns were also shared by Smith, as even Bluetooth-based proximity tracing could lead to the discovery of a person’s infection status. Those with a limited number of physical contacts could easily use their own device contact log to identify the family and friends who self-reported positive infection statuses through contact-tracing apps.

To Smith, the risks are also tied to over inclusive results and increased surveillance.

“Many advocates are also concerned about the risk of false-positives and knock-on effects for individuals that have been notified of involuntary exposure,” she said. “Bluetooth was not designed for contact-tracing, so it is a somewhat blunt tool for identifying potential COVID-19 exposure.”

“Proximity is only one factor in virus exposure and may be less significant than other factors, such as wearing a face mask,” she added. “Relying solely on proximity risks creating a database that overestimates potential exposure events. If a ‘clean’ proximity record becomes necessary for some individuals to go back to work or get life insurance, over-inclusion can result in real-world negative consequences.”

Further, data needs to be collected at scale for these contact tracing apps to be effective, Smith stated. So the concern of many, and perhaps rightly so, is that these systems designed to contain COVID-19 will end up serving as the basis for more widespread digital surveillance in the future.

Heather Federman, vice president of privacy and policy at BIGID, a data privacy firm took it a step further, explaining concerns around data retention and secondary use are valid. After 9/11, FISMA took on a wide sweep of data through a consumer facing application, which was later found to be used for other purposes. The data was also meant to be sunsetted after a specific period of time, but provisions are still in place to allow the data collection to continue.

“Every disclosure of personal information comes with some latent risk that it will be used in the future for purposes not disclosed at collection."

Right now, Congress is considering competing legislation designed to shore up some of these issues and ensure collection ends after the pandemic, but Federman mused: “How do we know that it’s actually going to happen?”

“It goes to a greater point that a lot advocates have made that these apps could open a door to do more surveillance,” Federman said. “Right now, apps are being developed specifically around COVID-19, but who’s to say it wouldn’t be used to determine who’s vaccinated or for performing a general health check.”

“We have to be sure that these apps will not be used by governments for increased unwarranted public surveillance,” Kelvin Coleman, executive director, National Cybersecurity Alliance (NCSA).” “Although it's still in its early days, and we haven’t seen anything conclusive here, it’s something people should be thinking about moving into the post-COVID-19 world.”

Coleman added that these apps are “also a double-edged sword” when it comes to privacy. The apps will be instrumental for notifying people of potential exposures, but they’ll be sharing more private information on those reentering society. Concerns shared by advocates are valid, as data breaches will be more likely with mass data collection and centralized storage.

The apps could also exacerbate potential threat vectors, through phishing scams, ransomware, and the like, he explained.

“There are warnings about the risks that have already had some impact and have helped Google and Apple limit and constrain their approach,” said Tom Pendergast, MediaPRO’s chief learning officer. “But the risks are just huge in terms of collecting really sensitive personal information.”

“Who has access to it? And how do we put limitations on what can be done with it? And how do we sunset the data? And if we start to identify certain people as infected, the data will be more susceptible to ending up into the wrong hands or government overreach. Those individuals could be stripped of basic, and even human rights,” he added. “I don’t think privacy risks get any more fundamental than that.”

If the information ends up in the wrong hands or used in an inappropriate way, people could be stripped of their rights, stressed Pendergast.

Smith added: “Balancing urgent public health needs and the slow erosion of privacy rights over the long term is an important public policy issue that is being prompted by these unprecedented times.”

The Potential for Overreach

One of the biggest concerns brought to light by the ACLU insights is the potential for overreach. And while Congress is working to craft privacy legislation for COVID-19 contact tracing, Federman explained that it’s highly unlikely legislators will come to a compromise ahead of the release of these apps.

The lack of a comprehensive privacy law has only fueled concern around the use of these apps, explained Coleman. The other concern lies with just what information can be accessed and to which organization.

"App developers should work with privacy counsel to develop clear consent mechanisms, plain-language privacy notices, and easy-to-use opt-out procedures."

Those surveillance concerns have stemmed from instances in the UK and India, where Coleman said its citizens have experienced unprecedented watchdog scrutiny. While the US has broader freedoms, without a federal privacy law, “transparency could be up to developers’ discretion. Whether the pendulum swings in favor of the people’s privacy will remain to be seen.”

“The problem is that it’s hard to control overreach,” Federman said. “It comes down to accepting that you can’t always control for everything, but we do our best to try and control the system and ensure the initial setup has all of these privacy controls in place, especially around secondary data use.”

“But it must also come with an internal audit and risk assessment into how the tech is using data, and whether they’re using it on a personal level,” she continued. “Some aggregate data could be useful… but if they’re using data for any additional purpose beyond contact tracing, stakeholders must have users’ rights in place through an ethical review.”

The key will be a mixture of external transparency, as well as contractual controls in the agreements between developers and third-party apps. Valdetero noted that the third-party apps could be tempted to use the data collected by contact tracing apps for other purposes not contemplated at the time the information was collected.

As a result, developers must draft and provide users will clear and comprehensive privacy policies that outline the precise data they’ll collect, how it’s used, and to whom the data will be shared, Valdetero said.

The policy will need to clearly state the data will only be used for the purpose of contact tracing and addressing the pandemic and not for other purposes, she explained. And if the intent of the use of data changes during that time, such as researchers learning the data could help with COVID-19 in another way, then those developers must first get opt-in permission from users before they do so.

“Every disclosure of personal information comes with some latent risk that it will be used in the future for purposes not disclosed at collection. Location data that could potentially be linked to individual users can be quite valuable,” Smith said. “This is why it is critical for developers to minimize data collections and build in privacy by design wherever possible.”

“With privacy, an ounce of prevention is better than a pound of cure,” she added. “So before signing up for these apps, users should take the time to understand the data that is collected, applicable privacy policies, and the app provider’s reputation. Privacy protection is a competitive advantage these days and enforcement of privacy laws is widespread, so companies take significant risks when they use data for novel purposes.”

Inherent Cybersecurity Risks

Several proposed contact tracing apps will rely heavily on Bluetooth technology for tracking individuals and potential exposures. While Smith stressed that any contact tracing system will come with its own inherent security risks, Bluetooth-based platforms will have inherent vulnerabilities to correlation attacks.

In these exploits, hackers leverage external data, like photos, video, or facial recognition templates to identify anonymized data.

“For example, it is conceivable that a bad actor could install a video camera outside of a health clinic and later pair the video with data from the contact tracing app to link anonymized keys to individual faces,” Smith said.

“While certainly possible at the local level, it does seem somewhat unlikely that such correlation attacks would be scalable by bad actors,” she added. “It would likely require the physical installation of video cameras and Bluetooth beacons on a large scale and may not prove attractive to hackers.”

But for Smith, the critical Bluetooth vulnerability known as BlueFrag will be the biggest security challenge. Reported only in February, the flaw has still not been fixed on some Android devices. The bug could allow a remote threat actor to silently execute arbitrary code when Bluetooth is enabled.

“How the COVID-19 response evolves will have significant impacts on how data protection laws are crafted and implemented in the future.”

And with the widespread adoption of Bluetooth-based contact tracing apps, the number of Bluetooth-activated devices would also increase – as would the risk surrounding existing Bluetooth vulnerabilities, explained Smith.

There are well documented flaws in Bluetooth technology that make it an exploitable channel for attackers. In fact, the flaw is found in some medical devices. Coleman said that as these apps plan to leverage Bluetooth tech for tracing, hackers will certainly launch cyberattacks against the tech.

Key Security Requirements

To protect privacy and reduce cybersecurity risks, the app developers will need to implement key security requirements. Coleman explained those measures much be built upon compliance with privacy regulations.

Once compliance is established, the biggest necessity will be for developers to actively push users into ensuring they’re employing personal security measures – “especially given the dependence on Bluetooth with these apps.”

“Google and Apple have already taken a good first set of steps to better ensure privacy by barring the use of location data tracking in their contact tracing API,” Coleman said. “Other government agencies or private sector developers should ideally follow the same example.”

“They should also be transparent in communicating to users the vulnerabilities surrounding Bluetooth functionality, why enabling it on devices should be done on as needed basis, the importance of using encryption measures and enabling MFA for any apps that use or collect personally identifiable information.”

Further, with the Bluetooth flaws, users will also need to be cautious when employing the tech. And developers will need to encourage users to ensure their smartphone software is up-to-date and fully patched.

Coleman explained hackers commonly launch malicious clones that appear in app stores, which will be highly probably after the official launch of these apps. Which means that users will also need to be educated on the importance of only downloading official apps.

Valdetero added that it’s easy to “see how threat actors could lure unsuspecting users into downloading fake ones.” Users must also make sure their firmware is up to date, while developers must ensure individuals understand the importance of using the most current version of the application.

Those designing these apps should also constantly check the platforms for any vulnerabilities.

“Taking those precautions into account, Bluetooth tracing methodologies have won favor over location-based contract tracing alternatives, which would leave these tools more easily exploitable for mass surveillance uses,” Coleman said.

“Location data would allow active mapping to ID who might be meeting who, as well as when or where meetings between individuals are taking place,” he added. “Google and Apple have already ensured that governments using their joint API to develop contact tracing tools are barred from enabling location data tracking. This also mitigates the potential impacts of breaches against centralized data servers.”

Further, developers will need to monitor the contact tracing apps for vulnerabilities and issue software updates on a routine basis. Coleman stressed the need for vigilance and rapid response to overall security gaps to keep people safe at scale.

If there’s an expectation that contact tracing apps will be the key tool to reducing the spread of COVID-19, Coleman added that these measures will be critical.

Valdetero made another crucial point: “Unless the apps have the ability to cross-communicate, the best chance of the apps meeting their stated purpose is to have everyone utilize the same app.”

“My understanding is that cross-communication functionality is part of the plan, particularly as people begin to travel between various countries and it will be important to trace traveler’s movements to identify whether and how the virus is spreading,” she added.

Ensuring the data is anonymized will also be crucial, as well as data minimization, Smith explained. Developers must commit to and ensure contact tracing apps are only collecting the necessary amount of data, as well as only sharing the minimum necessary information to help contain the spread to reduce the potential attack surface.

Fortunately, Smith explained that “any Bluetooth-based contact tracing apps have taken this to heart, using anonymized tokens and RPIDs to implement tracing and eschewing GPS-based solutions.”

In the end, cybersecurity can be maintained through developers opening up their code to scrutiny from outside groups in order to verify the security of the app, explained Pendergast. This should include auditing, pen testing, and array of security practices that will prove crucial to the development of the app.

It’s critical developers adopt the highest level of data protection practices, following the General Data Protection Regulation (GPDR) and HIPAA practices. Pendergast added that developers must be “totally transparent and above board.”

“Practically speaking, app developers should work with privacy counsel to develop clear consent mechanisms, plain-language privacy notices, and easy-to-use opt-out procedures. These are critical to ensuring transparency and fully informed consent from users,” Smith concluded.