Getty Images/iStockphoto

Hard Stops in EHRs, Clinical Decision Support Can Improve Care

The judicious use of hard stops in EHR workflows and clinical decision support systems can improve performance on process measures and lead to better care.

Clinical decision support systems that use hard stops, in which a response is required before a user can move forward with a task, are associated with higher performance on both process and outcomes measures, according to a new study published in JAMIA.

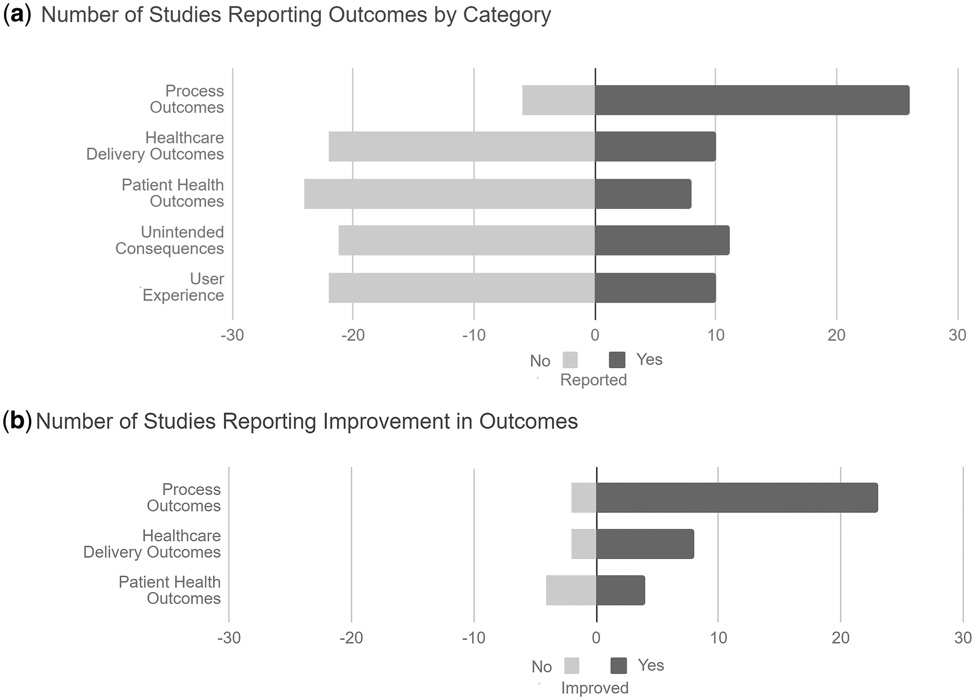

The literature review of 32 articles found that well-designed CDS workflows with hard stops improved performance on process measures in 79 percent of studies, while outcomes improved in 88 percent of the articles included in the sample.

Hard stops were also found to be more effective than soft stops, during which the user can easily override the suggestion, in three out of four studies.

The authors are quick to note, however, that performance improvements were only achieved in organizations that embraced user feedback and leveraged iterative design to create intuitive and streamlined workflows.

“An alert—an automatic warning message meant to communicate essential information to the clinician using an EHR—is now generated for 6 percent to 8 percent of all orders entered into an EHR by providers,” explained the team of researchers from Yale University.

“Each of these alerts represents an intention to provide useful information to the clinician, shape clinician behavior, and positively impact patient safety and outcomes.”

However, EHR alerts have also produced serious unintended consequences: alarm fatigue and physician burnout.

Previous studies have shown that EHR users receive dozens of notifications during the course of a typical day, many of which are low-value or not relevant to the task at hand. Providers may spend up to an hour a day simply sorting through these alerts, which produces a highly frustrating user experience.

The high number of notifications that add little value to the clinical decision-making process leads to desensitization.

Users are more apt to ignore important alerts when they are overwhelmed with pointless data. With clinicians immediately dismissing up to 95 percent of all alerts, noted the Yale team, vital information is easy to miss.

To combat desensitization and fatigue, EHR developers and clinical decision support designers have created three tiers of alerts: hard stops, soft stops, and passive alerts.

“We define hard-stop alerts as those in which the user is either prevented from taking an action altogether or allowed to proceed only with the external override of a third party,” said the team. “The most common alert trigger was order placement for the medication or test that was the CDS target.”

In the studies included in the review, hard stops were programmed into workflows as interruptive pop-up notifications or less intrusive inline requirements to fill out specific data elements before being allowed to continue to another task.

“Soft-stop alerts are those in which the user is allowed to proceed against the recommendations presented in the alert as long as an active acknowledgement reason is entered,” the study continued.

Passive alerts present information to the user, but do not require any action and do not interrupt the workflow.

The key to creating a workflow that meets the needs of the user while preventing potential harm to patients is carefully balancing hard stops with other methods of communication.

Most of the implementations included in the review managed to achieve this goal well enough to improve the delivery of care, the researchers said.

Of the 15 studies that evaluated delivery outcomes, 11 showed improvement in the target metric. Half of the studies focusing on patient health outcomes also showed positive results from hard stops in CDS tools.

These gains were notable across multiple clinical disciplines and settings of care. Separate studies reported improvements in deep venous thrombosis (DVT) rate and preventable venous thromboembolism (VTE) events in trauma patients, decreases in drug hypersensitivity reactions, increases in the detection of ankle fractures in urgent care settings, and decreases in unnecessary MRI testing for lower back pain.

Four studies specifically compared the use of hard stops to soft stops. Three of those studies indicated that hard stops are superior to less interruptive alerts for achieving desired outcomes.

“The fourth study found that both soft-stop and hard-stop alerts reminding the providers to complete restraint renewal orders improved ordering rates over the alert-free baseline but found no difference between the two alert styles in median restraint order renewal time or number of restraint orders,” the team observed.

“Only one study directly compared the impact of a hard-stop vs. soft-stop alert on a healthcare delivery outcome and showed a significant cost savings using the hard-stop alert over the soft-stop alert to prevent unnecessary duplicate lab orders. No studies compared adverse event rates between the two alert styles.”

However, the results were less unanimously positive when researchers examined the impact of hard stops on user satisfaction with workflows.

Three of the ten studies reporting on user experiences found poor acceptance of hard stops within the workflow, which resulted in creative workarounds to dodge interruptions.

In one study, “after implementation of a hard stop on documentation of chemotherapy intent in a clinical note template, no increase in documentation was shown, and users reported avoiding use of the template because of the hard stops.”

In another, the authors theorized that users were actively entering incorrect data in certain fields simply to avoid the threshold that would trigger a hard stop alert.

The last study found that a mandatory pain assessment protocol did not fare well with physicians, who felt the tool added administrative burdens without improving their decision-making.

“Many providers chose the fastest way through the module, even if it produced inaccurate and contradictory clinical documentation to limit time spent in the tool,” the study said.

The studies that saw higher rates of user acceptance “attributed this to comprehensive implementation plans involving user engagement, multiple iterations, and rapid response to feedback,” said the Yale team. “Two additional studies stated their interventions were well accepted with one specifying that acceptance was likely tied to low frequency of hard-stop alerts.”

Overall, the team believes that hard stops have an important role to play in clinical decision support systems and electronic health records, but more study will be required to identify the ideal deployment of interruptive alerts.

“Because [hard stops] can be such powerful tools, effectively prohibiting specific actions on the part of the provider, they need to be carefully implemented with diligent assessment of possible harms and continuous user involvement in the design and implementation process,” the authors cautioned.

“A lack of user testing and iterative design is more likely to lead to unintended consequences and error-prone systems and must be addressed in accordance with the growing body of literature on this topic. It is imperative that we approach the study of CDS with the same rigor with which we approach more traditional healthcare interventions.”