mast3r - stock.adobe.com

What Is Deep Learning and How Will It Change Healthcare?

What is deep learning, why is it significant, and how will this innovative artificial intelligence strategy change the healthcare industry?

Healthcare organizations of all sizes, types, and specialties are becoming increasingly interested in how artificial intelligence can support better patient care while reducing costs and improving efficiencies.

Over a relatively short period of time, the availability and sophistication of AI has exploded, leaving providers, payers, and other stakeholders with a dizzying array of tools, technologies, and strategies to choose from.

Just learning the lingo has been a top challenge for many organizations.

There are subtle but significant differences between key terms such as AI, machine learning, deep learning, and semantic computing.

Understanding exactly how data is ingested, analyzed, and returned to the end user can have a big impact on expectations for accuracy and reliability, not to mention influencing any investments necessary to whip an organization’s data assets into shape.

In order to efficiently and effectively choose between vendor products or hire the right data science staff to develop algorithms in-house, healthcare organizations should feel confident that they have a firm grasp on the different flavors of artificial intelligence and how they can apply to specific use cases.

Deep learning is a good place to start. This branch of artificial intelligence has very quickly become transformative for healthcare, offering the ability to analyze data with a speed and precision never seen before.

But what exactly is deep learning, how does it differ from other machine learning strategies, and how can healthcare organizations leverage deep learning techniques to solve some of the most pressing problems in patient care?

Deep learning in a nutshell

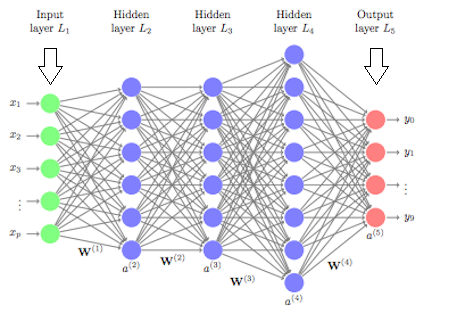

Deep learning, also known as hierarchical learning or deep structured learning, is a type of machine learning that uses a layered algorithmic architecture to analyze data.

In deep learning models, data is filtered through a cascade of multiple layers, with each successive layer using the output from the previous one to inform its results. Deep learning models can become more and more accurate as they process more data, essentially learning from previous results to refine their ability to make correlations and connections.

Deep learning is loosely based on the way biological neurons connect with one another to process information in the brains of animals. Similar to the way electrical signals travel across the cells of living creates, each subsequent layer of nodes is activated when it receives stimuli from its neighboring neurons.

In artificial neural networks (ANNs), the basis for deep learning models, each layer may be assigned a specific portion of a transformation task, and data might traverse the layers multiple times to refine and optimize the ultimate output.

These “hidden” layers serve to perform the mathematical translation tasks that turn raw input into meaningful output.

“Deep learning methods are representation-learning methods with multiple levels of representation, obtained by composing simple but non-linear modules that each transform the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level,” explains a 2015 article published in Nature, authored by engineers from Facebook, Google, the University of Toronto, and Université de Montréal.

“With the composition of enough such transformations, very complex functions can be learned. Higher layers of representation amplify aspects of the input that are important for discrimination and suppress irrelevant variations.”

This multi-layered strategy allows deep learning models to complete classification tasks such as identifying subtle abnormalities in medical images, clustering patients with similar characteristics into risk-based cohorts, or highlight relationships between symptoms and outcomes within vast quantities of unstructured data.

Unlike other types of machine learning, deep learning has the added benefit of being able to decisions with significantly less involvement from human trainers.

While basic machine learning requires a programmer to identify whether a conclusion is correct or not, deep learning can gauge the accuracy of its answers on its own due to the nature of its multi-layered structure.

“With the composition of enough such transformations, very complex functions can be learned."

Deep learning also requires less preprocessing of data. The network itself takes care of many of the filtering and normalization tasks that must be completed by human programmers when using other machine learning techniques.

“Conventional machine-learning techniques are limited in their ability to process natural data in their raw form,” said the article from Nature.

“For decades, constructing a pattern-recognition or machine-learning system required careful engineering and considerable domain expertise to design a feature extractor that transformed the raw data (such as the pixel values of an image) into a suitable internal representation or feature vector from which the learning subsystem, often a classifier, could detect or classify patterns in the input.”

Deep learning networks, however, “automatically discover the representations needed for detection or classification,” reducing the need for supervision and speeding up the process of extracting actionable insights from datasets that have not been as extensively curated.

Naturally, the mathematics involved in developing deep learning models are extraordinarily intricate, and there are many different variations of networks that leverage different sub-strategies within the field.

The science of deep learning is evolving very quickly to power some of the most advanced computing capabilities in the world, spanning every industry and adding significant value to user experiences and competitive decision-making.

What are the use cases for deep learning in healthcare?

Many of the industry’s deep learning headlines are currently related to small-scale pilots or research projects in their pre-commercialized phases.

However, deep learning is steadily finding its way into innovative tools that have high-value applications in the real-world clinical environment.

Some of the most promising use cases include innovative patient-facing applications as well as a few surprisingly established strategies for improving the health IT user experience.

Imaging analytics and diagnostics

One type of deep learning, known as convolutional neural networks (CNNs), is particularly well-suited to analyzing images, such as MRI results or x-rays.

CNNs are designed with the assumption that they will be processing images, according to computer science experts at Stanford University, allowing the networks to operate more efficiently and handle larger images.

As a result, some CNNs are approaching – or even surpassing – the accuracy of human diagnosticians when identifying important features in diagnostic imaging studies.

In June of 2018, a study in the Annals of Oncology showed that a convolutional neural network trained to analyze dermatology images identified melanoma with ten percent more specificity than human clinicians.

Even when human clinicians were equipped with background information on patients, such as age, sex, and the body site of the suspect feature, the CNN outperformed the dermatologists by nearly 7 percent.

“Our data clearly show that a CNN algorithm may be a suitable tool to aid physicians in melanoma detection irrespective of their individual level of experience and training,” said the team of researchers from a number of German academic institutions.

In addition to being highly accurate, deep learning tools are fast.

Researchers at the Mount Sinai Icahn School of Medicine have developed a deep neural network capable of diagnosing crucial neurological conditions, such as stroke and brain hemorrhage, 150 times faster than human radiologists.

“Our data clearly show that a CNN algorithm may be a suitable tool to aid physicians in melanoma detection irrespective of their individual level of experience and training.”

The tool took just 1.2 seconds to process the image, analyze its contents, and alert providers of a problematic clinical finding.

“The expression ‘time is brain’ signifies that rapid response is critical in the treatment of acute neurological illnesses, so any tools that decrease time to diagnosis may lead to improved patient outcomes,” said Joshua Bederson, MD, Professor and System Chair for the Department of Neurosurgery at Mount Sinai Health System and Clinical Director of the Neurosurgery Simulation Core.

Deep learning is so adept at image work that some AI scientists are using neural networks to create medical images, not just read them.

A team from NVIDIA, the Mayo Clinic, and the MGH & BWH Center for Clinical Data Science has developed a method of using generative adversarial networks (GANs), another type of deep learning, which can create stunningly realistic medical images from scratch.

The images use patterns learned from real scans to create synthetic versions of CT or MRI images. The data can be randomly generated and endlessly diverse, allowing researchers to access large volumes of necessary data without any concerns around patient privacy or consent.

These simulated images are so accurate that they can help train future deep learning models to diagnose clinical findings.

“Medical imaging data sets are often imbalanced as pathologic findings are generally rare, which introduces significant challenges when training deep learning models,” said the team. “We propose a method to generate synthetic abnormal MRI images with brain tumors by training a generative adversarial network.”

“This offers an automatable, low-cost source of diverse data that can be used to supplement the training set. For example, we can alter a tumor’s size, change its location, or place a tumor in an otherwise healthy brain, to systematically have the image and the corresponding annotation.”

Such a strategy could significantly reduce of AI’s biggest sticking points: a lack of reliable, sharable, high-volume datasets to use for training and validating machine learning models.

Natural language processing

Deep learning and neural networks already underpin many of the natural language processing tools that have become popular in the healthcare industry for dictating documentation and translating speech-to-text.

Because neural networks are designed for classification, they can identify individual linguistic or grammatical elements by “grouping” similar words together and mapping them in relation to one another.

This helps the network understand complex semantic meaning. But the task is complicated by the nuances of common speech and communication. For example, words that always appear next to each other in an idiomatic phrase, may end up meaning something very different than if those same words appeared in another context (think “kick the bucket” or “barking up the wrong tree”).

While acceptably accurate speech-to-text has become a relatively common competency for dictation tools, generating reliable and actionable insights from free-text medical data is significantly more challenging.

Unlike images, which consist of defined rows and columns of pixels, the free text clinical notes in electronic health records (EHRs) are notoriously messy, incomplete, inconsistent, full of cryptic abbreviations, and loaded with jargon.

Currently, most deep learning tools still struggle with the task of identifying important clinical elements, establishing meaningful relationships between them, and translating those relationships into some sort of actionable information for an end user.

A recent literature review from JAMIA found that while deep learning surpasses other machine learning methods for processing unstructured text, several significant challenges, including the quality of EHR data, are holding these tools back from true success.

“Researchers have confirmed that finding patterns among multimodal data can increase the accuracy of diagnosis, prediction, and overall performance of the learning system. However, multimodal learning is challenging due to the heterogeneity of the data,” the authors observed.

Accessing enough high-quality data to train models accurately is also problematic, the article continued. Data that is biased or skewed towards particular age groups, ethnicities, or other characteristics could create models that are not equipped to accurately assess a broad variety of real-life patients.

Still, deep learning represents the most promising pathway forward into trustworthy free-text analytics, and a handful of pioneering developers are finding ways to break through the existing barriers.

A team from Google, UC San Francisco, Stanford Medicine, and the University of Chicago Medicine, for example, developed a deep learning and natural language processing algorithm that analyzed more than 46 billion data points from more than 216,000 EHRs across two hospitals.

The tool was able to improve on the accuracy of traditional approaches for identifying unexpected hospital readmissions, predicting length of stay, and forecasting inpatient mortality.

“This predictive performance was achieved without hand-selection of variables deemed important by an expert, similar to other applications of deep learning to EHR data,” the researchers said.

“Instead, our model had access to tens of thousands of predictors for each patient, including free-text notes, and identified which data were important for a particular prediction.”

While the project is only a proof-of-concept study, Google researchers said, the findings could have dramatic implications for hospitals and health systems looking to reduce negative outcomes and become more proactive about delivering critical care.

Drug discovery and precision medicine

Precision medicine and drug discovery are also on the agenda for deep learning developers. Both tasks require processing truly enormous volumes of genomic, clinical, and population-level data with the goal of identifying hitherto unknown associations between genes, pharmaceuticals, and physical environments.

Deep learning is an ideal strategy for researchers and pharmaceutical stakeholders looking to highlight new patterns in these relatively unexplored data sets – especially because many precision medicine researchers don’t yet know exactly what they should be looking for.

"Our model had access to tens of thousands of predictors for each patient, including free-text notes, and identified which data were important for a particular prediction.”

The world of genetic medicine is so new that unexpected discoveries are commonplace, creating an exciting proving ground for innovative approaches to targeted care.

The National Cancer Institute and the Department of Energy are embracing this spirit of exploration through a number of joint projects focused on leveraging machine learning for cancer discoveries.

The combination of predictive analytics and molecular modeling will hopefully uncover new insights into how and why certain cancers form in certain patients.

Deep learning technologies will accelerate the process of analyzing data, the two agencies said, shrinking the processing time for key components from weeks or months to just a few hours.

The private sector is similarly committed to illustrating how powerful deep learning can be for precision medicine.

A partnership by GE Healthcare and Roche Diagnostics, announced in January of 2018, will focus on using deep learning and other machine learning strategies to synthesize disparate data sets critical to developing precision medicine insights.

The two companies will work to combine in-vivo and in-vitro data, EHR data, clinical guidelines, and real-time monitoring data to support clinical decision-making and the creation of more effective, less invasive therapeutic pathways.

“By leveraging this combined data set using machine learning and deep learning, it may be possible in the future to reduce the number of unnecessary biopsies that are performed due to suspicious findings in the mammograms and possibly also reduce mastectomies that are performed to combat ductal carcinoma in situ, a condition that may evolve into invasive breast cancer in some cases,” said Nadeem Ishaque, Chief Innovation Officer, GE Healthcare Imaging.

A separate study, conducted by researchers from the University of Massachusetts and published in JMIR Medical Informatics, found that deep learning could also identify adverse drug events (ADEs) with much greater accuracy than traditional models.

"By leveraging this combined data set using machine learning and deep learning, it may be possible in the future to reduce the number of unnecessary biopsies."

The tool combines deep learning with natural language processing to comb through unstructured EHR data, highlighting worrisome associations between the type, frequency, and dosage of medications. The results could be used for monitoring the safety of novel therapies or understanding how new pharmaceuticals are being prescribed in the real-world clinical environment.

Clinical decision support and predictive analytics

In a similar vein, the industry has high hopes for the role of deep learning in clinical decision support and predictive analytics for a wide variety of conditions.

Deep learning may soon be a handy diagnostic companion in the inpatient setting, where it can alert providers to changes in high-risk conditions such as sepsis and respiratory failure.

Researchers from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) have created a project called ICU Intervene, which leverages deep learning to alert clinicians to patient downturns in the critical care unit.

“Much of the previous work in clinical decision-making has focused on outcomes such as mortality (likelihood of death), while this work predicts actionable treatments,” said PhD student and lead author Harini Suresh. “In addition, the system is able to use a single model to predict many outcomes.”

The tool offers human clinicians a detailed rationale for its recommendations, helping to foster trust and allowing providers to have confidence in their own decision-making when potentially overruling the algorithm.

Google is also on the leading edge of clinical decision support, this time for eye diseases. The company’s UK-based subsidiary, DeepMind, is working to develop a commercialized deep learning CDS tool that can identify more than 50 different eye diseases – and provide treatment recommendations for each one.

In a supporting study published in Nature, DeepMind and Moorfields Eye Hospital found that the tool is just as accurate as a human clinician, and has the potential to significantly expand access to care by reducing the time it takes for an exam and diagnosis.

“Currently, eye care professionals use optical coherence tomography (OCT) scans to help diagnose eye conditions. These 3D images provide a detailed map of the back of the eye, but they are hard to read and need expert analysis to interpret,” explained DeepMind.

“The time it takes to analyze these scans, combined with the sheer number of scans that healthcare professionals have to go through (over 1,000 a day at Moorfields alone), can lead to lengthy delays between scan and treatment – even when someone needs urgent care. If they develop a sudden problem, such as a bleed at the back of the eye, these delays could even cost patients their sight.”

With deep learning, the triage process is nearly instantaneous, the company asserted, and patients do not have to sacrifice quality of care.

“This is a hugely exciting milestone, and another indication of what is possible when clinicians and technologists work together,” DeepMind said.

What is the future of deep learning in healthcare?

As intriguing as these pilots and projects can be, they represent only the very beginning of deep learning’s role in healthcare analytics.

Excitement and interest about deep learning are everywhere, capturing the imaginations of regulators and rule makers, private companies, care providers, and even patients.

The Office of the National Coordinator (ONC) is one organization with particularly high hopes for deep learning, and it is already applauding some developers for achieving remarkable results.

In a recent report on the state of AI in the healthcare setting, the agency noted that some deep learning algorithms have already produced “transformational” outcomes.

“There have been significant demonstrations of the potential utility of artificial Intelligence approaches based on deep learning for use in medical diagnostics,” the report said.

“Where good training sets represent the highest levels of medical expertise, applications of deep learning algorithms in clinical settings provide the potential of consistently delivering high quality results.”

The report highlighted early successes in diabetic retinal screenings and the classification of skin cancer as two areas where deep learning may already be changing the status quo.

On the clinical side, imaging analytics is likely to be the focal point for the near future, due to the fact that deep learning already has a head start on many high-value applications.

“Applications of deep learning algorithms in clinical settings provide the potential of consistently delivering high quality results.”

But purely clinical applications are only one small part of how deep learning is preparing to change the way the healthcare system functions.

The strategy is integral to many consumer-facing technologies, such as chatbots, mHealth apps, and virtual personalities like Alexa, Siri, and Google Assistant.

These tools have the potential to radically alter the way patients interact with the healthcare system, offering home-based chronic disease management programming, 24/7 access to basic triage, and new ways to complete administrative tasks.

By 2019, up to 40 percent of businesses are planning to integrate one or more of these popular consumer technologies into their internal or external workflows.

Customer support and communication are two early implementations. But with market-movers like Amazon rumored to start rolling out more consumer-facing health options to patients, it may only be a matter of time before chatting with Alexa becomes as common as shooting the breeze with a medical assistant.

Voice recognition and other analytics based on deep learning also have the near-term potential to provide some relief to physicians and nurses struggling with their EHRs.

Google appears particularly interested in capturing medical conversations in the clinic and using deep learning to reduce administrative burdens on providers.

One recent research paper illustrated the potential to use deep learning and NLP to understand casual conversation in a noisy environment, giving rise to the possibility of using an ambient, intelligent scribe to shoulder the onus of documentation.

“We wondered: could the voice recognition technologies already available in Google Assistant, Google Home, and Google Translate be used to document patient-doctor conversations and help doctors and scribes summarize notes more quickly?” a Google team posited.

“While most of the current automatic speech recognition (ASR) solutions in medical domain focus on transcribing doctor dictations (i.e., single speaker speech consisting of predictable medical terminology), our research shows that it is possible to build an ASR model which can handle multiple speaker conversations covering everything from weather to complex medical diagnosis,” the blog post says.

Google will work with physicians and data scientists at Stanford to refine the technology and understand how it can be best applied to the clinical setting.

“We hope these technologies will not only help return joy to practice by facilitating doctors and scribes with their everyday workload, but also help the patients get more dedicated and thorough medical attention, ideally, leading to better care,” the team said.

EHR vendors are also taking a hard look at how machine learning can streamline the user experience by eliminating wasteful interactions and presenting relevant data more intuitively within the workflow.

“Taking out the trash” by using artificial intelligence to learn a user’s habits, anticipate their needs, and display the right data at the right time is a top priority for nearly all of the major health IT vendors – vendors who are finding themselves in the hot seat as unhappy customers plead for better solutions for their daily tasks.

Both patients and providers are demanding much more consumer-centered tools and interactions from the healthcare industry, and artificial intelligence may now be mature enough to start delivering.

“We finally have enough affordable computing power to get the answers we’re looking for,” said James Golden, PhD, Senior Managing Director for PwC’s Healthcare Advisory Group, to HealthITAnalytics.com in February of 2018.

“When I did my PhD in the 90s on back propagation neural networks, we were working with an input layer, an output layer, and two middle layers,” he recalled.

“That’s not extremely complex. But it ran for four days on an Apple Lisa before producing results. I can do the same computation today in a picosecond on an iPhone. That is an enormous, staggering leap in our capabilities.”

The intersection of more advanced methods, improved processing power, and growing interest in innovative methods of predicting, preventing, and cheapening healthcare will likely bode well for deep learning.

With an extremely high number of promising use cases, strong investment from major players in the industry, and a growing amount of data to support cutting-edge analytics, deep learning will no doubt play a central role in the quest to deliver the highest possible quality care to consumers for decades to come.